At a Glance: Ray vs. Spark

What is Ray?

Python-Native Distributed Framework

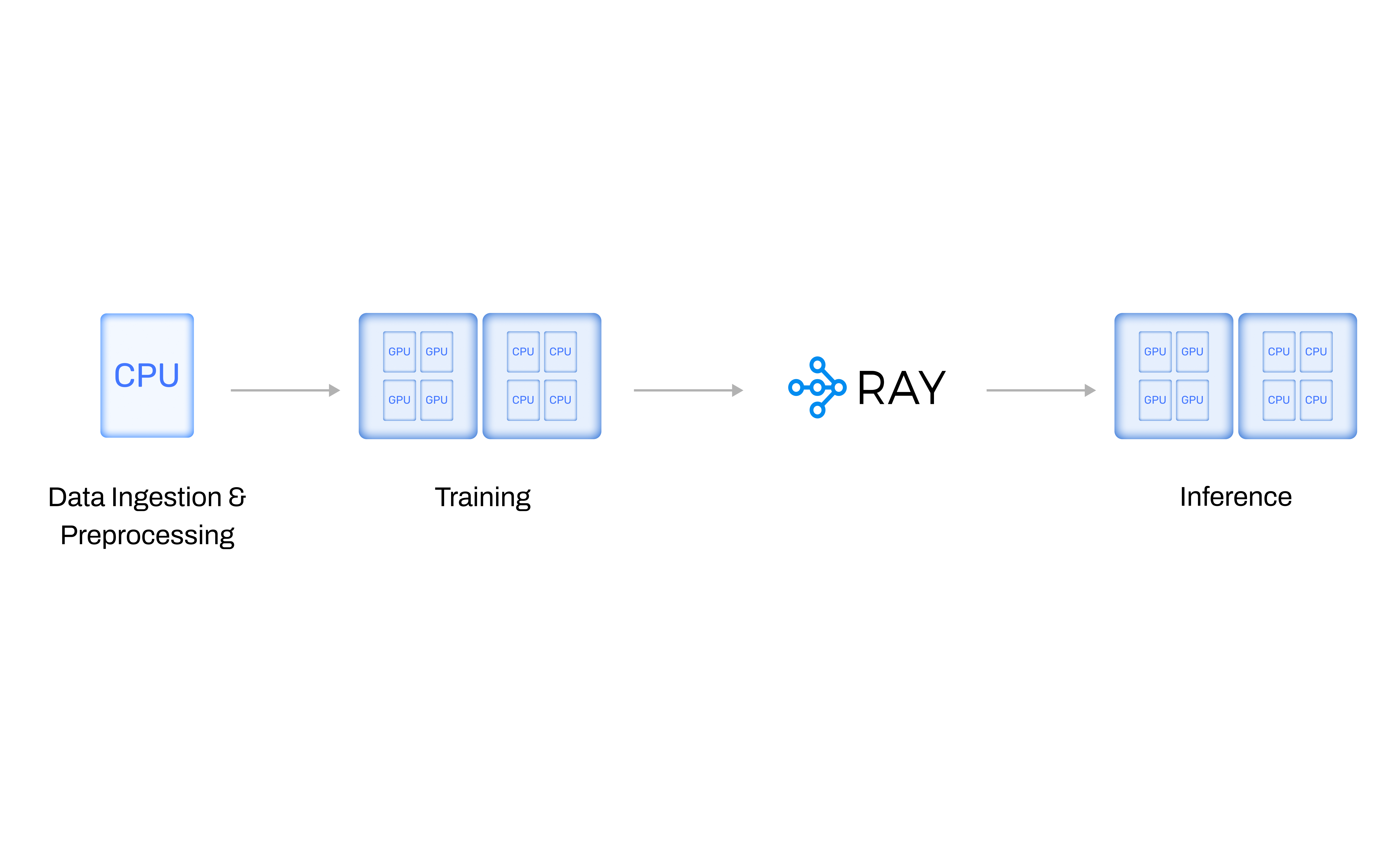

Parallelize and scale your machine learning applications from data processing to model training to model serving and beyond.

Native GPU Capabilities

Ray works with any cloud and any accelerator, allowing you to scale across your private, secure cloud resources.

Cutting-Edge AI, Data, and ML Libraries

Ray offers five libraries built on top of Ray Core to improve ML data processing, model training, tuning, serving, and reinforcement learning.

Future-Proof Technology

Ray’s cloud-native infrastructure and first-class cluster management makes it the go-to choice for GPU and CPU computing.

4 Fundamental Differences Between Ray and Spark

Core API Differences

Ray Core's flexible and general API makes it ideal for scaling a wide variety of AI types (including ML, Deep Learning, and GenAI) as well as generic Python apps. In contrast, Spark's wider core API is supplemented by feature-rich libraries like SparkSQL that makes it effective at scaling general data processing and classic ML.

AI Compute Engine for Any Workload

Ray is Python-native, cloud-first, and future-proof, making it the best choice for modern and future distributed computing challenges.

Supported Workloads and Use Cases

Unstructured Data Processing

Model Training

Model Serving

Gen AI Workloads

Structured Data Processing

Ray’s flexibility supports Spark on Ray for structured data processing.

Ray’s flexibility supports Spark on Ray for structured data processing.

Scaling Arbitrary Python Programs

|  | |

|---|---|---|

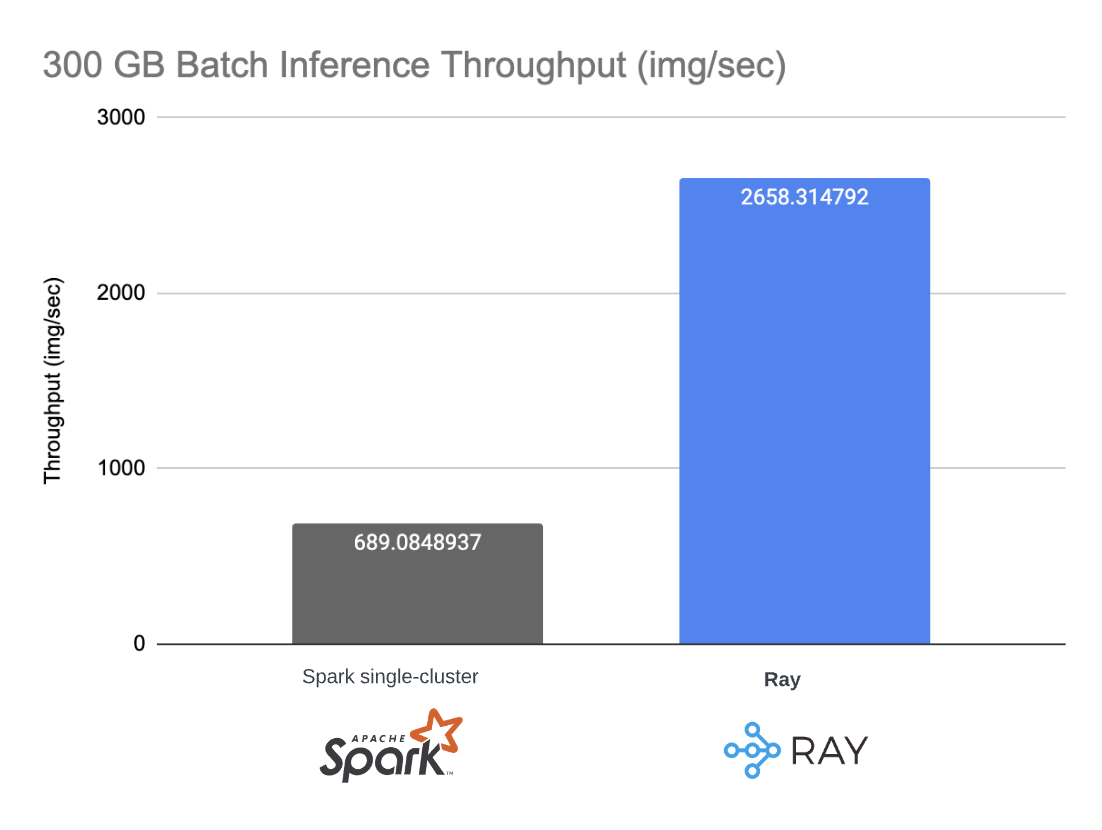

Unstructured Data Processing |  Ray was built to support modern AI/ML workloads, and it excels at processing unstructured data, such as video, images, and text. Ray’s ability to orchestrate heterogeneous compute resources makes it ideal for processing multimodal data across GPUs and CPUs, significantly improving efficiency and reducing cost. |  While Spark does offer some support for unstructured data processing, its SQL and Dataframes APIs are optimized for structured and semi-structured data. Spark is less efficient at processing unstructured data, including multimodal data, especially when requiring heterogeneous compute resources. |

Model Training |  Ray is not just a framework for processing unstructured data. Once you’ve processed your data, Ray Data integrates seamlessly with the other Ray libraries including Ray Train for training deep-neural networks (DNNs), large language models (LLMs), as well as classic ML models. |  Spark is primarily a data processing platform. While it supports classic ML workloads, it is less effective at training LLMs and other deep neural networks, which require hardware-accelerator support. |

Model Serving |  Ray Data connects seamlessly to Ray Serve, making it possible to serve any AI model after pre-processing your data. |  Spark focusses on data processing, and has little support for LLM/DNN serving, especially in online settings. |

Gen AI Workloads |  Ray’s ability to orchestrate heterogeneous compute across GPUs and CPUs, as well as its Pythonic API, make it the leading choice for scaling AI and GenAI workloads, no matter how sophisticated these workloads are. |  While Spark is effective for classic ML workloads, many modern AI and GenAI workloads require support for hardware accelerators and more flexible, low-level APIs. |

Structured Data Processing |  Ray’s flexibility supports Spark on Ray for structured data processing. Ray was built for modern and future AI/ML workloads, where engineers are largely manipulating unstructured data. Ray isn’t optimized for most structured data processing, though it is effective at last-mile preprocessing. Ray’s flexibility supports Spark on Ray for structured data processing. |  Spark is optimized for high-performance processing of structured and semi-structured relational data through its SQL and Dataframe APIs. While effective for classic ML workloads, many modern AI and GenAI workloads include large amounts of unstructured, multimodal data. |

Scaling Arbitrary Python Programs |  With its general, Python-native API, Ray supports arbitrary Python applications. Developers can take their existing applications and scale them by just adding a few lines a code. No need to rewrite them! |  Spark is implementing a data parallel computation model. While a good fit for scaling data workloads, it is not flexible enough to scale arbitrary workloads. |

Already Using Spark? Try Spark on Ray

Use your data in meaningful ways across a variety of AI and GenAI workloads with Spark on Ray.

One Unified Cluster

Easily run on-demand Spark jobs via the Ray cluster launcher to enable advanced Ray functionality like autoscaling.

Seamless Migration

Effortlessly migrate from Spark to Spark on Ray, and use your Spark code as-is.

Simple Integration

Powerful and simple APIs for converting a Spark DataFrame to a Ray Dataset. Run data processing and training pipelines on a single cluster.

FAQs

The Best Option for Data Processing At Scale

Get up to 60% cost reduction on unstructured data processing with Anyscale, the smartest place to run Ray.