Summary

Apache Spark was built for a world that was CPU centric and relied on structured and semi-structued data. Ray is better at supporting the future of AI/ML workloads because of its ability to enable heterogeneous compute (compute across GPUs and CPUs) as well as best-in-class unstructured data processing.

LinkOverview

With the multitude of phenomenal tools available in the AI and ML space, it can be confusing to understand where tools stand apart—and what their differences are. One of the most common questions we get at Anyscale is the question of how Ray differs from Apache Spark.

Apache Spark, created in 2009, was created for a world where most computation happened on CPUs and structured data processing + Business Intelligence (BI) was critical. But as technology has evolved, and as we expand into AI, GenAI, and complex use cases, more flexible and general purpose tools like Ray—capable of parallelizing compute heterogeneously across CPUs and GPUs—are better positioned to support current and future workloads.

LinkSimilarities Between Ray and Spark

The question “What is the difference between Ray and Spark?” is surprisingly complex, because Ray and Spark share many commonalities:

They are both popular open source projects that aim to make it easy for users to leverage cluster computation.

Architecturally, they both feature language-integrated APIs, a centralized control plane, and lineage-based fault tolerance.

They were both created in the same lab space in UC Berkeley.

At their core, they both offer support for distributing functions across a dataset

Both Ray and Spark can be used with ML libraries

They both offer integrations with common file formats, like Parquet or CSV.

However, due to inherent design differences, each system is uniquely suited for different use cases. Understanding these fundamental domain-driven differences is critical when choosing which tool to use.

LinkUse Case Comparison

For a quick glance at how Ray differs from Spark, refer to our table below. Or, read on for a full breakdown of the technical differences between Spark and Ray.

Application Type | Spark | Ray |

|---|---|---|

Structured Data Processing | ✅ | ✅ |

Unstructured Data Processing | Limited | ✅ |

Data Loading | ✅ | ✅ |

Change Data Capture | ✅ | ✅ |

Batch Processing | ✅ | ✅ |

Streaming Workloads | ✅ | Coming Soon |

Batch Inference | Limited | ✅ |

SQL-Support | ✅ | ❌ |

Hyperparameter Tuning | Limited | ✅ |

High Performance Computing (HPC) | Limited | ✅ |

Distributed Model Training | Limited | ✅ |

Traditional ML | ✅ | ✅ |

Reinforcement Learning | ❌ | ✅ |

GenAI | Limited | ✅ |

LinkTechnical Differences Between Ray and Spark

LinkDifference 1: Original Framework Purpose

To understand the difference between Spark and Ray—and the benefits of each—you must first understand the goals each framework was trying to solve. Spark, as the older tool, was built for structured data processing. By contrast, Ray, which was developed in 2017, was created to handle modern and complex AI workloads. It was originally made because tools like Spark weren’t easily capable of distributing tasks like complex and custom model training algorithms. As a Python-native tool, Ray is more general purpose for ML workloads. Additionally, Ray libraries offer added functionality for specific workloads like data processing and model training.

The modern AI-enabled organization is making use of multimodal data, which emphasizes the need for effective unstructured data processing. Let’s take a look at how data types, hardware demands, and community standards have changed over time:

“Traditional” data processing | Multimodal & unstructured data processing, AI model training and serving | |

|---|---|---|

Data Types | Focus on high-performance processing of structured or semi-structured relational data | Work with structured data and a variety of unstructured data type such as images, video, text, and audio |

Hardware Demands | Large homogeneous CPU clusters | Heterogeneous CPU & GPU clusters, AI Accelerators |

Community Standards | SQL/DataFrame (e.g., ANSI SQL) and Java | PyTorch/TensorFlow and Python |

LinkTechnical Difference 1: Core API Configuration

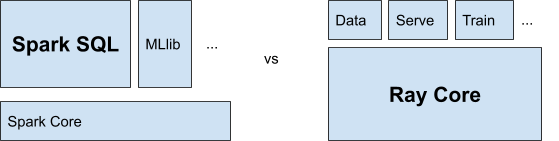

Supporting ML frameworks leads to a broader, more flexible API in Ray Core and thinner supplemental libraries. In contrast, Spark features a thin, narrow core API and featureful libraries.

Figure 1. Compare and contrast Ray vs Spark libraries. Spark features heavyweight, featureful libraries, whereas Ray libraries are thinner parallelism wrappers around existing frameworks.

Figure 1. Compare and contrast Ray vs Spark libraries. Spark features heavyweight, featureful libraries, whereas Ray libraries are thinner parallelism wrappers around existing frameworks.The main tradeoff of having a broader API is learning speed vs. capability. Spark Core is easier to get started with, but because Ray Core has a broader and more flexible API, it supports additional frameworks (like Spark and SQL libraries) with minimal performance impact. Whereas running frameworks like Pandas or Ray on top of Spark usually come with significant performance impact.

Consequently, Spark SQL inherently needs to implement optimizations from the literature (e.g., code generation, vectorized execution). On the other hand, Ray's libraries are oriented more around reducing the performance overhead and operational complexity of compositions of workloads (e.g., graphs of model deployments, ingest for distributed training, or preprocessing for batch inference), enabling existing single-node optimized code to leverage cluster compute.

LinkTechnical Difference 2: Exposure to Primitives

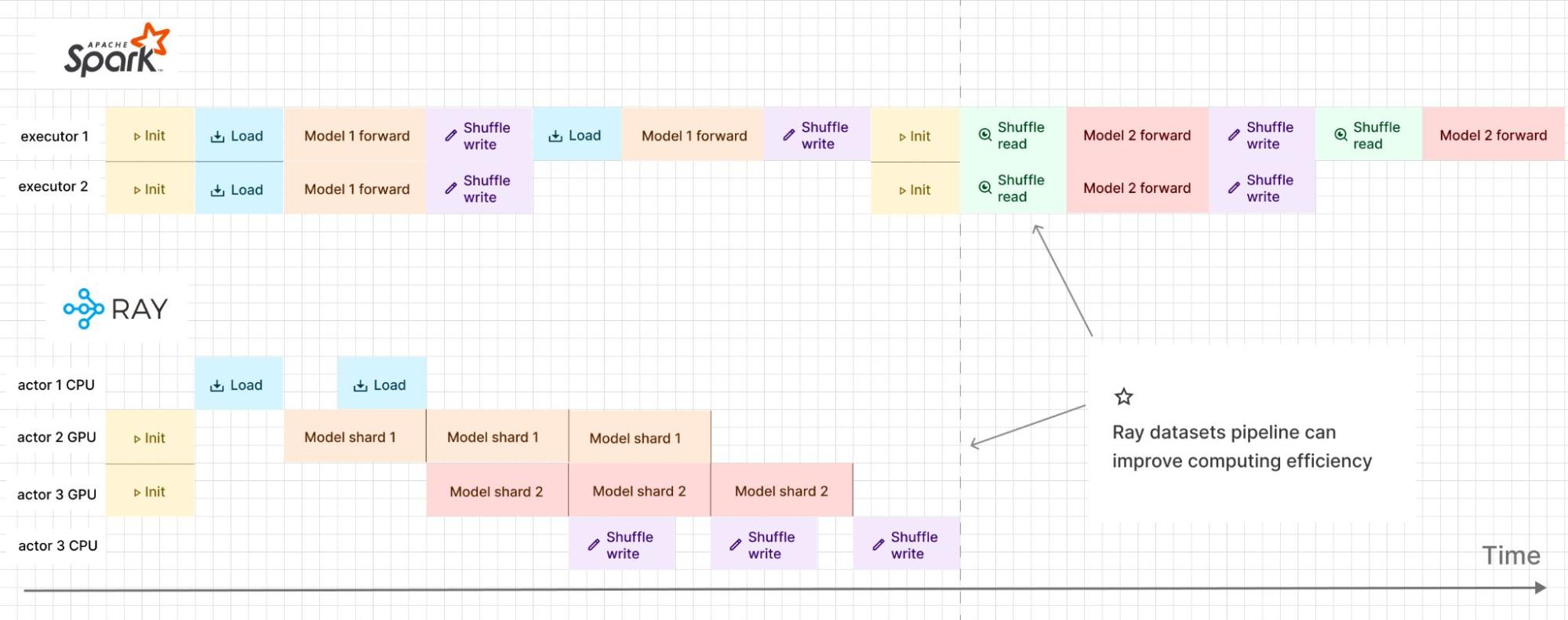

Because they had different goals in mind, Ray and Spark expose primitives at different levels.

Spark is primarily a high-level engine, where you tell it the “what” and it takes care of the “how.” Ray Core is a low-level engine with fundamental Python primitives where you have complete control over the “how,” with high-level libraries built on top, such as Ray Train, Tune, and Data, which provide those high-level primitives.

Spark SQL implements a relational query processing model, which means the system hides parallelism from the user. This is possible since the data community has standardized on SQL. As a result, the Spark Core API exposes a smaller set of primitives (e.g., RDDs, broadcast variables) that are optimized for bulk execution. As a result, it’s very difficult to implement pipeline parallelism in Spark.

Ray exposes parallelism because the community baseline is ML frameworks (e.g. TF, Torch) and Python. This means that Ray necessarily needs to expose parallelism to the user in order to enable these frameworks to run on top of Ray (e.g., setting up torch worker groups using Ray actors).

What this means is that Ray exposes a number of low-level APIs that Spark hides, including:

The ability to work with individual actors (e.g., executors in Spark)

The ability to dispatch individual tasks (vs, jobs in Spark)

A first-class distributed object API (private in Spark)

Figure 2. Example of Apache Spark dataset pipeline and Ray Core dataset pipeline.

Figure 2. Example of Apache Spark dataset pipeline and Ray Core dataset pipeline.LinkDifference 2: Data Types

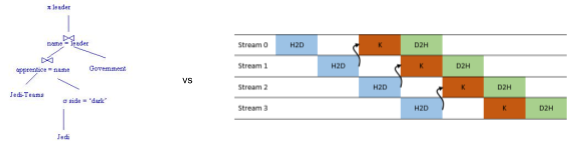

The most obvious difference between Ray and Spark is around data processing support.

Spark includes a mature relational data processing engine, which means it can flexibly and performantly accelerate ETL and analytics workloads including joins, complex transformations, etc, which are features of the Spark SQL library.

In contrast, Ray was designed to support ML workloads, which require simpler, more "brute-force" custom data transformations, typically along the lines of loading data, applying UDF transforms, and then feeding the data into GPUs as fast as possible. This reduces the need for advanced query planning or expression support, instead placing emphasis on efficiently leveraging cluster memory and network resources. It gives a more Pythonic API for ease of migration from single-node Python to scalable data pipelines instead of focusing on SQL operations.

Accordingly, the Ray Data library does not optimize for ETL or analytics operations, but instead focuses on high-throughput streaming for unstructured data for ML inference and training or processing data that doesn’t work well with standard dataframe operations.

Figure 3. Spark SQL focuses on relation query planning (left), compared to Ray Data, which optimizes for streaming preprocessing and loading into GPUs (right).

Figure 3. Spark SQL focuses on relation query planning (left), compared to Ray Data, which optimizes for streaming preprocessing and loading into GPUs (right).LinkSpark: Traditional Data Processing

Historically, all data processing happened on structured or semi-structured data—data that is formatted and organized, typically in a database.

Processing structured data usually implies ETL and analytics workloads, including joins and complex transformations. Once this data is processed, it is then “handed off” to an upstream workload, for example to an ML team.

Therefore, the “traditional” AI pipeline looked as follows:

"Data" typically originates from some upstream source (e.g., request logs)

The data is then processed for internal use (e.g., via data warehouses, ETL)

Data is consumed by downstream teams (e.g., for machine learning training)

Finally, the data is used to either build or improve a product or application

Figure 4. In the “traditional” AI pipeline (in other words, the processing of structured data): Data processing (ETL/Analytics) is typically an upstream workload of ML infra (Serving, Tuning, Training, Inference, LLMs). Both have needs for distributed computation.

Figure 4. In the “traditional” AI pipeline (in other words, the processing of structured data): Data processing (ETL/Analytics) is typically an upstream workload of ML infra (Serving, Tuning, Training, Inference, LLMs). Both have needs for distributed computation.However, this pattern applies primarily to traditional AI pipelines setups. Many modern data pipelines now need AI to process the data, which breaks this pattern in that instead of just preparing data for training at the end, AI models are needed to process in the early steps. As an example, when processing audio files, these records often need to be transcribed into text by an AI model during the first few steps. With the rise of more deep learning, GenAI, and multi-modal models, it is vital to be able to utilize these models as part of any stage of data pipelines.

LinkRay: Modern AI/ML (Unstructured and Structured Data + AI model training and serving)

The things that make Spark good for traditional AI data processing are the same things that hold Spark back from being truly effective at unstructured data processing. As AI computing moves forward, there’s a reduced need for advanced query planning or expression support, and a greater emphasis on efficiently leveraging cluster memory and network resources.

When processing unstructured data, the modern AI data pipeline looks slightly different from above:

"Data" comes in new and varied formats, including text, image, video, audio, etc

To process data in a cost-effective way, we typically want to incorporate pipeline parallelism and/or heterogeneous compute. The important thing is being able to achieve high hardware-utilization (and therefore cost efficiency) while controlling spend in regards to the amount of resources committed to a given workload.

The trained model is then improved (typically with additional unstructured data)

Finally, the data is used to either build or improve a product or application

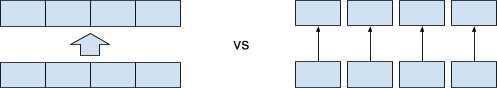

LinkTechnical Difference: Coarse vs. Fine-Grained Lineages

Ray's API requires tracking the system state and lineage of individual tasks, actors, and objects. This is in contrast to Spark, which tracks the lineage of coarser-grained job stages.

Figure 5. Spark provides coarse-grained lineage for fault tolerance (left), compared to Ray's fine-grained lineage (right).

Figure 5. Spark provides coarse-grained lineage for fault tolerance (left), compared to Ray's fine-grained lineage (right).The fine-grained execution model of Ray Core enables it to effectively parallelize ML frameworks, which are often harder to shoehorn into Spark's bulk execution model. Fine-grained lineage also enables fully pipelined execution in Ray Data (compared to bulk execution in Spark)—with the same efficient lineage-based fault tolerance. On the other hand, Spark's scheduling model allows it to more efficiently implement optimizations for large-scale data processing (i.e., DAG scheduling).

LinkDifference 3: Accelerator Compatibility

Traditionally, data workflows ran on CPUs, which are better at executing a sequence of work quickly. However, as AI and ML evolved, so too has our accelerator needs. The majority of AI workloads now use parallel processing in some capacity—whether it’s because the data doesn’t fit on one chip, or because it’s faster to parallelize work across many chips.

GPUs are particularly good at parallel processing. The problem is, GPUs are expensive—more so than CPUs. So modern AI workloads need to be able to access GPU processing power when necessary, but also offset GPU costs with cheaper CPU computing when possible.

The need for GPUs in ML infra creates two distinct scheduling requirements not typically needed for “traditional” AI and structured data processing:

Gang-scheduling: Large models (e.g., LLMs) often require multiple GPUs or multiple GPU nodes to be scheduled at once.

Heterogeneous compute: Mixed CPU-GPU clusters can save costs when there is the need for CPU-intensive data preprocessing prior to GPU compute for inference and training workloads.

LinkTechnical Difference: Heterogeneous vs Homogeneous Compute

As a framework for primarily processing unstructured data, Spark is optimized for making more frequent, more homogeneous scheduling decisions. It does so in order to optimize for fair sharing between jobs and data locality of tasks.

Alternatively, because Ray was built with modern ML workloads in mind, it has first class support for heterogeneous and gang scheduling—meaning that Ray makes fewer, but more complex, scheduling and autoscaling decisions.

Figure 6. Spark optimizes for data locality-aware scheduling in homogeneous clusters, whereas Ray better supports heterogeneous clusters with gang scheduling (placement groups).

Figure 6. Spark optimizes for data locality-aware scheduling in homogeneous clusters, whereas Ray better supports heterogeneous clusters with gang scheduling (placement groups).LinkDifference 4: Data Processing

The choice of whether to use Ray or Spark depends in part on the use case you’re planning to run. There are some instances where Spark’s traditional data processing structure is better suited for the task. But if you’re working on more advanced ML workloads with unstructured or varied data, Ray is likely the better option.

The Difference Between Ray Core & Ray Data

The first thing to understand when comparing Spark and Ray for specific data processing use cases is that you’re not actually comparing Spark and Ray.

Spark—though it has select support for traditional machine learning through libraries like MLlib—is primarily a big data processing tool.

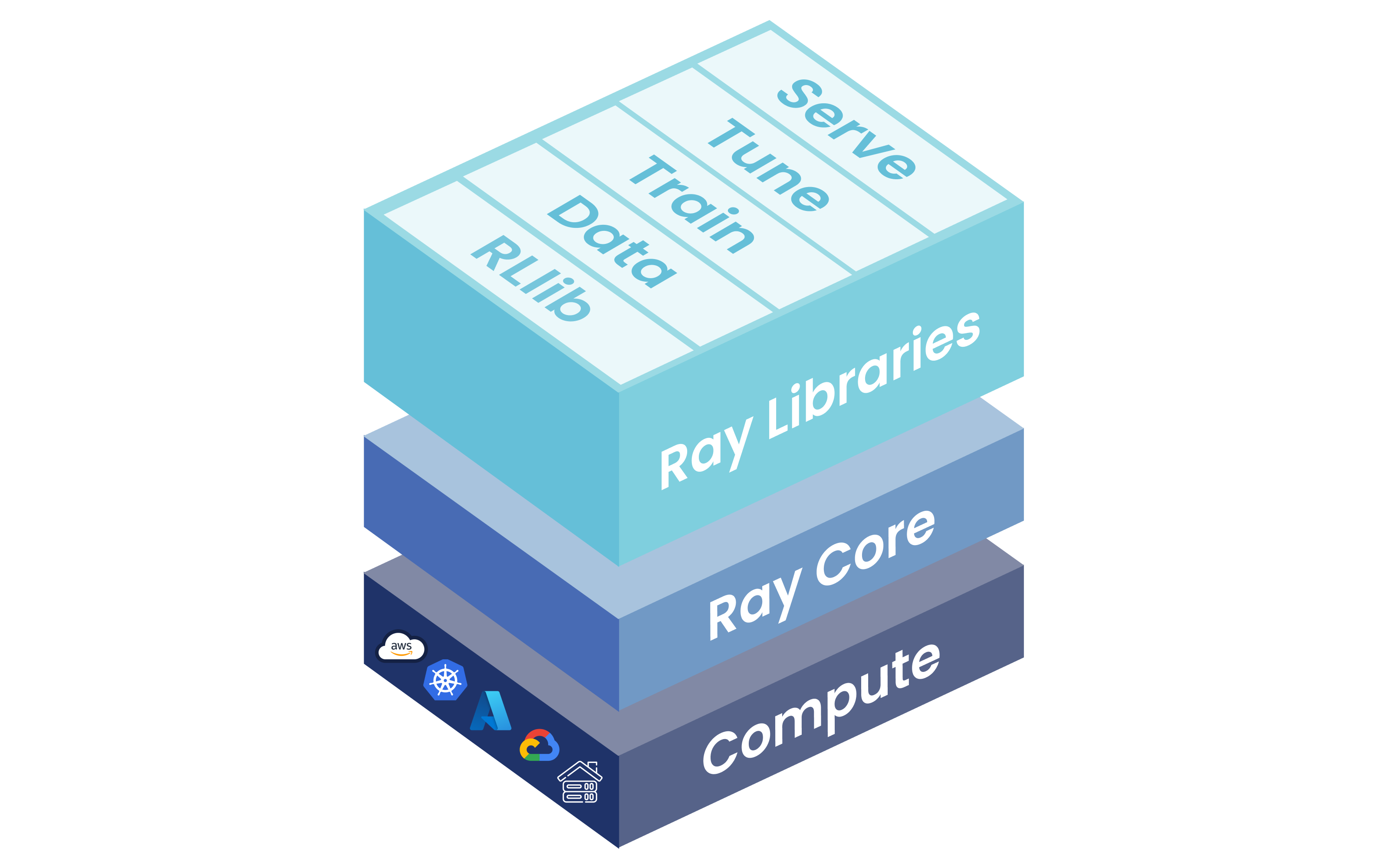

Ray as a whole is an open source framework for distributed computing. Built on top of Ray Core are several high-level libraries that focus on specific AI workloads—including data processing with Ray Data.

In order to directly compare Spark and Ray for data processing, we will be comparing Apache Spark to Ray Data in the following section.

LinkBulk Processing

Both data processing and ML infrastructure workloads frequently require distributed computation. This comes in the form of generic bulk-processing (i.e., embarrassingly parallel jobs). For these workloads, Ray provides Tasks, Datasets, and distributed multiprocessing. Spark provides DataFrames, Datasets, and RDDs. However, bulk processing is a commoditized area. For simple enough parallel workloads, these options will work equally well.

LinkStructured Data Processing

Spark is better suited for structured data processing than Ray, because of its original purpose: big data processing. At its core, Apache Spark is a data processing engine, created to address the limitations of Hadoop’s MapReduce. Spark’s Core API includes support for ETL and analytics workloads. For SQL-style workloads, Spark is typically the recommended choice.

LinkUnstructured Data Processing

Ray Data is better suited for unstructured data processing than Spark. Unlike Spark, Ray easily supports heterogeneous compute, which is crucial for unstructured data processing. With Ray Data, you can process any type of unstructured data, including text, images, audio, video, and beyond.

LinkDifference 5: Support for AI and GenAI Use Cases

Ray Core simplifies distributed computing for any AI/ML workflow. Advanced ML and AI libraries like Ray Train and Ray Serve enable developers to not only process data with Ray, but also train on that data, then deploy and serve models.

Ray's libraries are natively integrated to support accelerated computing and seamlessly integrate with ML frameworks like Pytorch and Tensorflow to solve any AI use case.

Spark is limited to traditional ML and CPU based workloads. Many Spark providers turn to Ray for model training and unstructured data processing.

LinkTechnical Feature Comparison

Ray | Apache Spark | |

|---|---|---|

Programming Language | Ray is Python-native, with additional support for Java, C++, and others. | Spark primarily supports Scala and Java, with API extensions for Python (PySpark) and R. |

Task Scheduling | Ray enables dynamic task scheduling with actors and task dependencies. | Spark offers static, DAG-based execution for task scheduling. |

Heterogeneous Compute | Ray is the best and easiest way to manage distributed computing, including heterogeneous compute. With Ray, you can effectively manage heterogeneous clusters to process your data as efficiently and effectively as possible. | Spark does not natively support heterogeneous compute. |

Fault Tolerance | Ray has fault tolerance support in a variety of ways, including checkpointing, task retries, and actor-based fault tolerance. | Spark supports fault tolerance with built-in lineage information and re-computation of lost data. |

Stateful Computation | Ray supports stateful actors that can maintain state across tasks. | Spark has limited stateful processing, and typically requires external storage to support advanced stateful computation (e.g. HDFS). |

Deployment Support | Ray supports serverless deployment, and also offers a popular open source Kubernetes integration, KubeRay. | Spark typically runs on YARN, Mesos, Kubernetes, or standalone clusters. |

Bulk Processing | Ray’s built-in distributed parallelism makes bulk processing quick and easy. | Spark’s built in DataFrames, Datasets, and RDDs make bulk processing possible. |

Cluster Management | With Ray, you can scale from your laptop to the cloud with just one Pythonic decorator. Ray also includes key cluster management capabilities like autoscaling. | Spark uses a component called SparkContext to coordinate cluster managers and allocate resources across applications. |

Resource Management | Ray offers fine-grained control and dynamic resource scaling. | Spark focuses on status resource allocation with limited dynamic capabilities. |

Locality Scheduling | Ray supports locality scheduling through cluster management. Specifically, Ray automatically sorts nodes within a cluster to first favor those that already have tasks or actors scheduled (for locality), then to favor those that have low resource utilization (for load balancing). | Spark allows you to configure your locality level at the cluster level, among others. |

ETL | Ray has limited native support for ETL transformations. You can also coordinate ETL transformations in Ray through the Spark on Ray library. | As a tool initially built for data workloads, Spark is particularly effective at ETL transformations. |

API Paradigm | Ray’s API is actor-based and task-based, with additional remote functions. | Spark’s API offers batch processing, SQL-like operations, and streaming. |

Integration with External Tools | As a Python-native tool, Ray easily integrates with other Python-based tools, frameworks, and libraries. | Spark integrates well with the Hadoop ecosystem, as well as data lakes and BI tools. |

Concurrency | Ray supports both parallel and concurrent workloads. | Spark is designed primarily for parallel batch processing. |

LinkSpark on Ray

Ray may be best suited for modern AI workloads, but Spark still has valuable features and applications depending on your use case. To get the best of both, you can build the Spark Core API (i.e., RDDs) on top of Ray Core (e.g., tasks and objects). This gives you the power and flexibility of Ray, with some additional functionality from Spark.

Some examples of what you can do with Spark on Ray:

Implement RDD transformations with Ray tasks

Implement the Spark shuffle primitive with Ray tasks and objects

Implement broadcast variables with

`ray.put()`

To get started with Spark on Ray, refer to the documentation.

FAQs

Table of contents

- Overview

- Similarities Between Ray and Spark

- Use Case Comparison

- Technical Differences Between Ray and Spark

- Difference 1: Original Framework Purpose

- Difference 2: Data Types

- Difference 3: Accelerator Compatibility

- Difference 4: Data Processing

- Difference 5: Support for AI and GenAI Use Cases

- Technical Feature Comparison

- Spark on Ray

- FAQs