uv + Ray: Pain-Free Python Dependencies in Clusters

By Christina Zhu and Philipp Moritz | February 27, 2025

When we discovered the uv package manager, we realized that a new era of Python package management had arrived. It tackles the Python developers’ dependency headaches with consistency and speed, which also enables quick development cycles.

Here is a (non-exhaustive) collection of things the folks from astral.sh are getting right that we really like:

They package the whole environment: You don’t need to worry about setting up your own Python distribution which in itself can be quite cumbersome. Instead, let uv manage the Python environment. If you prefer, uv also supports using a system installed Python.

They make uv lightning fast: For starters, it’s written in Rust, just like their very successful Python linter ruff. Designed with speed in mind, uv quickly downloads packages, caches them locally on disk, de-duplicates dependencies between environments and uses hard links to bring them all together.

They respect and improve on existing Python conventions: This includes support for pyproject.toml, outstanding platform independent lockfile support, editable packages, environment variables and lots more – more capabilities get added and bugs fixed all the time.

However, in a distributed context, managing Python dependencies is still challenging. There are many processes to manage on a cluster, and we need to make sure all their dependencies are consistent with each other. If you change a single dependency, you have to propagate that change to every single other node since each worker process needs the same environment so that all the code gets executed the same way. If there’s a version mismatch somewhere, you could be spending lots of hours debugging what went wrong… which is definitely not a fun way to spend your time. The traditional way to solve this problem is by containerizing everything, but that makes the development iteration much slower - if code and dependencies change, a new version of the container needs to be built and pushed to all nodes. Sometimes the whole cluster needs to be restarted, which makes it even slower.

Wouldn't it be nice if you just kick off a distributed Python application by running uv run ... script.py and your dynamically created environment applied to every process in the cluster? Spoiler: That’s exactly how we are going to do it. Let’s look at a simple example using Ray first. Ray is a compute engine designed to make it easy to develop and run distributed Python applications, especially AI applications.

Since this is a new feature in the latest Ray 2.43 release, you currently need to set a feature flag:

1export RAY_RUNTIME_ENV_HOOK=ray._private.runtime_env.uv_runtime_env_hook.hookWe plan to make it default after collecting more feedback from all of you, and adapting the behavior if necessary.

Once you have set up this environment variable, Ray will automatically detect that your script is running inside of uv run and will run all of the worker processes in the same environment. Let’s first look at a very simple example. Create a new file main.py with:

1import emoji

2import ray

3

4@ray.remote

5def f():

6 return emoji.emojize('Python is :thumbs_up:')

7# Execute 1000 copies of f across a cluster.

8

9print(ray.get([f.remote() for _ in range(1000)]))and run it with

1uv run --with emoji main.pyThis will run 1000 copies of the f function across a number of Python worker processes in a Ray cluster. The emoji dependency, in addition to being available for the main script, will also be available for all worker processes. Also, the source code in the current working directory will be available to all the workers.

That’s it! Using Ray + uv, you can manage Python dependencies for distributed applications the same way you normally do on a single machine with uv.

LinkEnd-to-end example for using uv

Now, let’s walk through a more detailed example of how to use uv and dive into some of its convenient features. We will create an application that runs batch inference using Ray Data with the new LLM integration. Let’s first create a pyproject.toml with the necessary dependencies:

1[project]

2name = "my_llm_batch_inference"

3version = "0.1"

4dependencies = [

5 "numpy",

6 "ray[llm]",

7]

And a script batch_inference.py with the following content:

1import ray

2from ray.data.llm import vLLMEngineProcessorConfig, build_llm_processor

3import numpy as np

4

5config = vLLMEngineProcessorConfig(

6 model="unsloth/Llama-3.1-8B-Instruct",

7 engine_kwargs={

8 "enable_chunked_prefill": True,

9 "max_num_batched_tokens": 4096,

10 "max_model_len": 16384,

11 },

12 concurrency=1,

13 batch_size=64,

14)

15processor = build_llm_processor(

16 config,

17 preprocess=lambda row: dict(

18 messages=[

19 {"role": "system", "content": "You are a bot that responds with haikus."},

20 {"role": "user", "content": row["item"]}

21 ],

22 sampling_params=dict(

23 temperature=0.3,

24 max_tokens=250,

25 )

26 ),

27 postprocess=lambda row: dict(

28 answer=row["generated_text"],

29 **row # This will return all the original columns in the dataset.

30 ),

31)

32

33ds = ray.data.from_items(["Start of the haiku is: Complete this for me..."])

34

35ds = processor(ds)

36ds.show(limit=1)To run the application, put both the pyproject.toml and the batch_inference.py file into the same directory and execute the code with uv run batch_inference.py.

Note that this is a very simple example that runs a small model and a one item in-memory dataset loaded with ray.data.from_items, but you can use any of the data sources that Ray Data supports to load the data, any of the data sinks to write the results, and any of the models that vLLM supports for the inference.

If you want to use a Hugging Face model that is behind a gate, you can create a .env file in your working directory like:

1HF_TOKEN = your_huggingface_tokenand run the code with uv run --env-file .env batch_inference.py.

If you want to edit any of the dependencies, you can clone them into a path ./path/foo relative to your working_dir and run uv add --editable ./path/foo. This will append a section in your pyproject.toml that instructs uv to use the checked out (and edited) code instead of the original package.

LinkHow does this work under the hood?

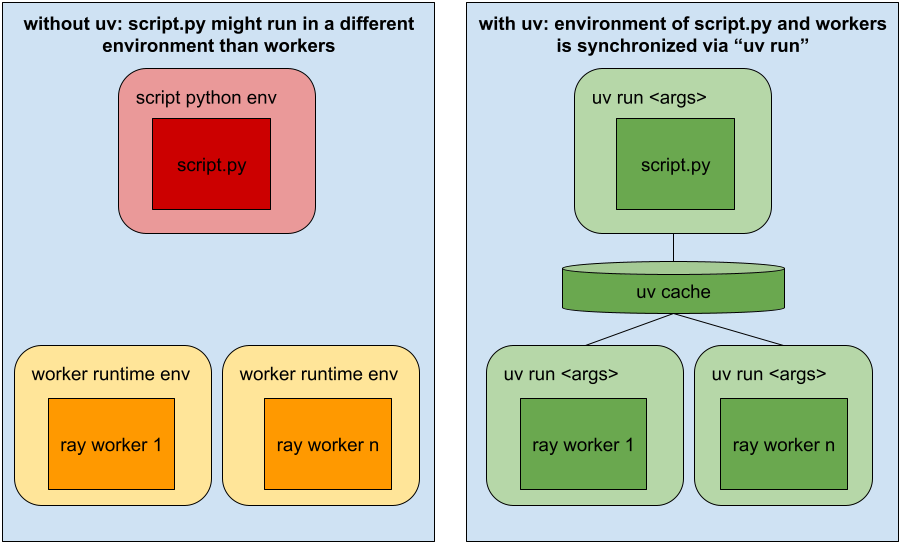

Figure: without uv, script.py runs in the default python environment which might be different from the runtime environment that the workers run in (left), with uv the environments are synchronized via “uv run” and the creation of the environments is sped up by uv’s cache

Under the hood, this integration uses Ray’s runtime environment feature, which allows the environment to be specified in code. We define a new low level runtime environment plugin called py_executable. It allows you to specify the Python executable (including arguments) that Ray workers will be started in. In the case of uv, the py_executable is set to uv run with the same parameters that were used to run the driver. Also, the working_dir runtime environment is used to propagate the working directory of the driver (including the pyproject.toml) to the workers. This allows uv to set up the right dependencies and environment for the workers to run in.

There are some advanced use cases where you might want to use the py_executable mechanism directly in your programs:

Applications with heterogeneous dependencies: Ray supports using a different runtime environment for different tasks or actors. This is useful for deploying different inference engines, models, or microservices in different Ray Serve deployments and also for heterogeneous data pipelines in Ray Data. To implement this, you can specify a different

py_executablefor each of the different runtime environments and useuv runwith a different--projectparameter for each.Customizing the command the worker runs in: On the workers, you might want to customize uv with some special arguments that are not used for the driver. Or, you might want to run processes using poetry run, a build system like bazel, a profiler, or a debugger. In these cases, you can explicitly specify the executable the worker should run in via

py_executable. It could even be a shell script that is stored inworking_dirif you are trying to wrap multiple processes in more complex ways.

LinkUsing uv in a Ray Job

To use uv with a Ray Job, run the job with:

1ray job submit --runtime-env-json '{"working_dir": ".", "py_executable": "uv run"}' -- uv run main.pyThis will make sure both the driver and workers of the job run in the uv environment as specified by your pyproject.toml.

LinkUsing uv in a Ray Service

To use uv with Ray Serve, set the RAY_RUNTIME_ENV_HOOK like above and run the service with uv run serve run app:main.

LinkBest Practices and Tips

Use

uv lockto generate a lockfile and make sure all your dependencies are frozen, so things won’t change in uncontrolled ways if a new version of a package gets released.If you have a

requirements.txtfile and don’t want to use the--with-requirementsflag, you can useuv add -r requirement.txtto add the dependencies to your project.toml and then use that with uv run.If needed you can use the system python via

UV_SYSTEM_PYTHON=1If your

pyproject.tomlis in some subdirectory, you can useuv run --projectto use it from there.If you use

uv runand want to reset the working directory to something that is not the current working directory, use the--directoryflag. The Ray uv integration will make sure yourworking_dirwill be set accordingly.

LinkHighlights of Our uv x Ray Open Source Efforts

As with any enthusiastic supporter of a new open source project, we’ve been very lucky that some Ray users have already picked up this feature before it was even released and suggested improvements, thank you for that! We hope to get a lot more feedback now that we release the feature with Ray 2.43.

Many thanks to the uv maintainers for discussing the integration with us, and quickly fixing a bug that occurred when uv environments were set up concurrently in Ray.

LinkConclusion

Combining uv with Ray significantly improves Python dependency management for distributed systems by pairing uv's fast environment setup with Ray's scalable programming model, ensuring consistent worker execution across clusters. We strive to make Ray + uv the best solution for running code in a distributed fashion, and would love your feedback on GitHub or on Discourse.

That being said, there are situations where uv is not a feasible solution – you might work on an established project stitching together different package managers, repositories, and binaries. Or, you are in an enterprise environment with very specific requirements. At Anyscale, we are working on a dependency management solution based on our OCI compatible fast container runtime that allows for very fast development iterations and also supports a wide range of dependency needs. If you're interested in this, give the Anyscale Platform a spin.