Ray Summit 2022 stories - ML Platforms

LinkThe first blog on our Ray Summit stories highlighted how companies are using Ray to train their large language models. In this second series, we are highlighting a few talks on how companies are scaling their ML workloads as part of their ML platforms. See how Uber, Spotify or Shopify are building their next generation platforms on top of Ray.

LinkUber - Large-scale deep learning training and tuning with Ray

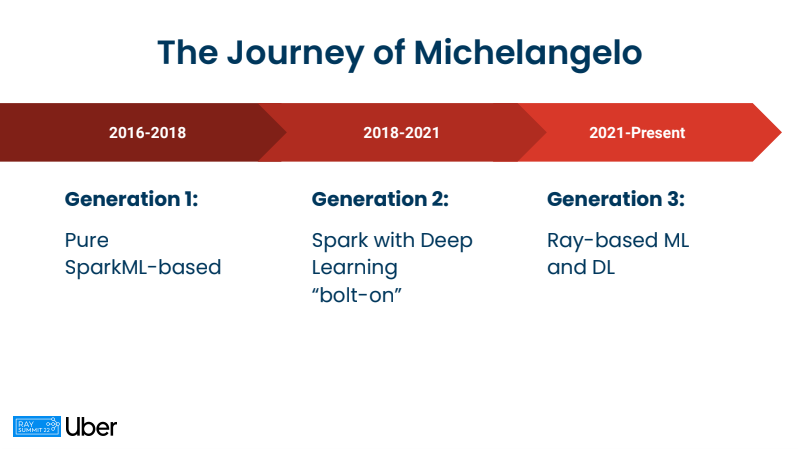

Michelangelo, Uber’s ML platform, began as a monolithic Spark-based application in 2016. Two years later, it evolved to “bolt on” Horovod to accommodate deep learning use cases. And finally it was built on top of Ray to provide a unified and scalable ML compute platform for better flexibility and cost performance.

In this latest generation of their ML platform, Uber’s ML platform team is in the process of refactoring their end-to-end ML pipelines onto Ray and phase out their Spark estimators and MLlib dependencies.

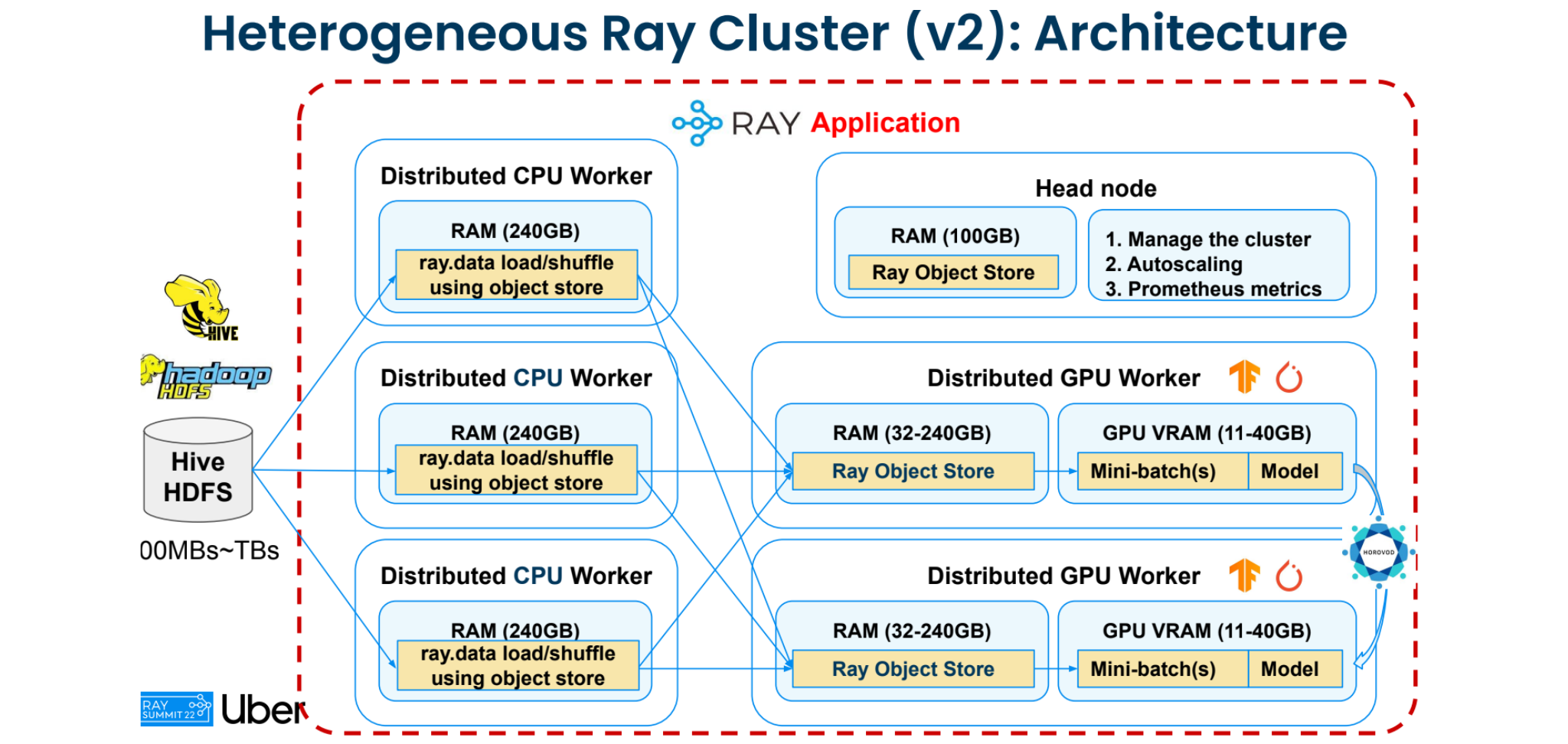

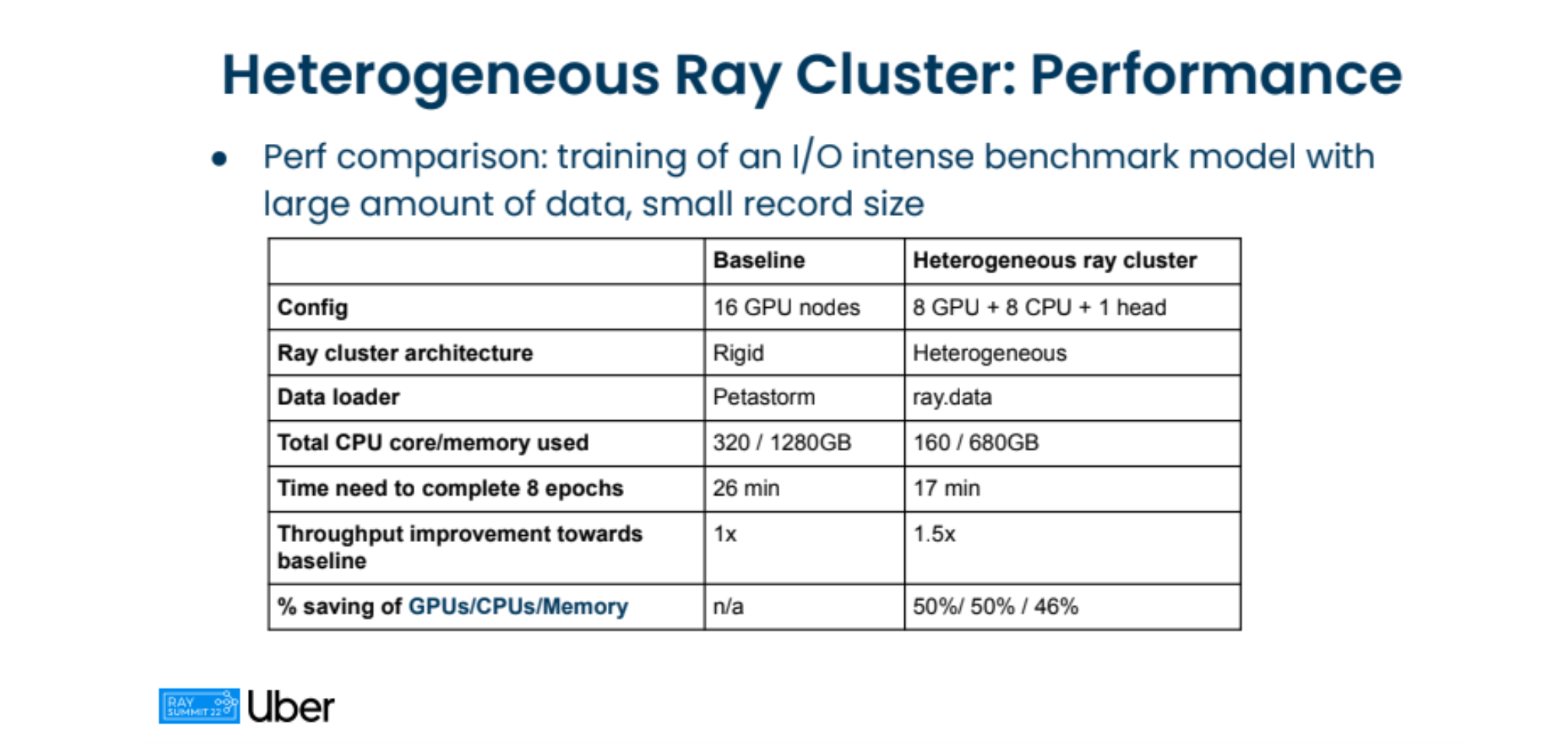

By implementing their large-scale deep learning on top of a Ray heterogeneous (CPU + GPU) cluster, the Uber team was able to benefit from a 50% savings on the ML compute part of training a large-scale deep-learning job.

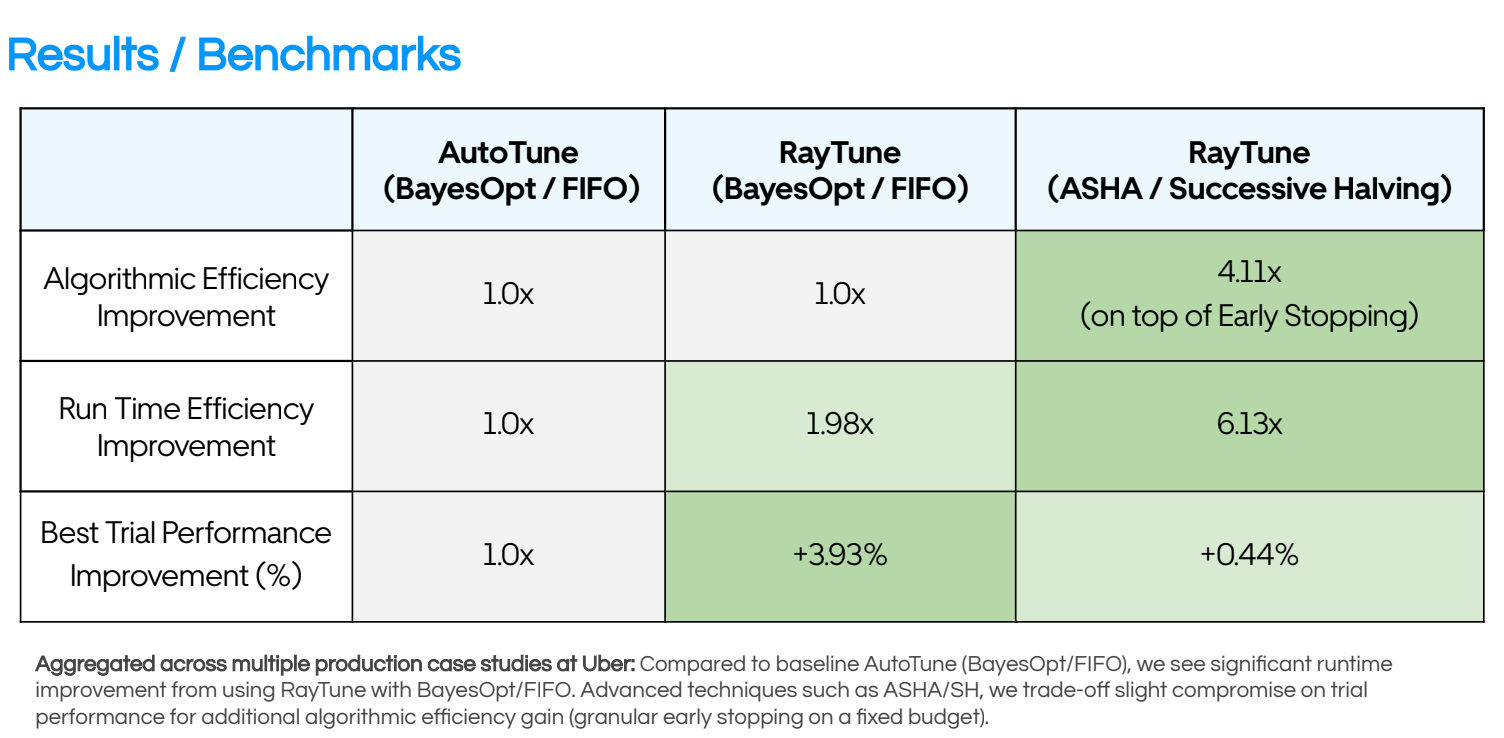

The Uber internal Autotune service is now using Ray Tune, and they were able to experience up to 4x speed up on their hyperparameter tuning jobs.

Check out the full talk:

LinkHow Spotify sped up ML research and prototyping with Ray

The mission for the Spotify ML team is to democratize their ML platform so that Spotify employees from all backgrounds such as engineers, data scientists, and researchers can leverage their unique perspectives, skills, and expertise to further ML and spur innovation at Spotify.

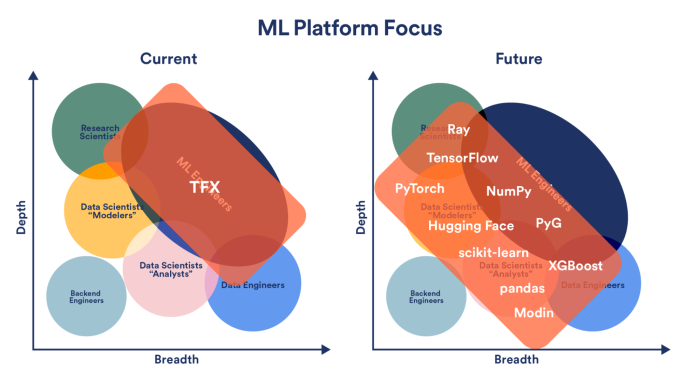

The 2-3 year vision for the ML platform is to evolve to be more accessible, flexible, framework-agnostic and python native.

The figure above illustrates the larger ML practitioner umbrella at Spotify which encompasses the following roles: research scientists, data scientists, data engineers, and ML engineers focused on ML-related tasks.

Spotify selected Ray because “it is tailored for ML development with its rich ML ecosystem integration. It easily scales compute-heavy workloads such as feature preprocessing, deep learning, hyperparameter tuning, and batch predictions — all with minimal code changes.”

The team found Ray simpler and more intuitive than TensorFlow Extended (TFX), the framework initially used for their ML platform, and eliminated the need to learn other frameworks, APIs or Kubernetes. The ML platform team selected a few use cases and conducted some POCs on Ray to solve various Spotify problems, such as training GNN embeddings for podcast recommendations and scaling reinforcement learning for Radio. For example, the Graph neural network POC was implemented in 2.5 months from ideation to implementation and productionized within just two weeks. For the RL use case, Ray unblocked performance bottlenecks in RL agent training and paved the foundation for productionizing offline RL workloads.

Check out the full talk below and their recent blog post for more details.

Link

The Magic of Merlin - Shopify's new machine learning platform

The Shopify ML platform team went through multiple iterations initially using an in-house Pyspark solution, then prototyping using the Google AI platform but ultimately chose to build on top of open source projects such as Kubernetes and Ray. The ML platform goals were centered around three tenets:

Scalability - Robust infrastructure that can scale machine learning workflows

Fast Iterations - Tools that reduce friction and increase productivity for data scientists and machine learning engineers by minimizing the gap between prototyping and production

Flexibility - Ability for users to use any libraries or packages they need for their models

The ML platform team realized the importance of building around real use cases, as it allowed them to scale up with the most amount of data. The team also realized that building a platform is quite different from maintaining one. The ML platform team learned how to effectively migrate users to the new platform and set goals that enabled a smooth transition. One key finding was ensuring a good user experience was key to success.

Watch the full talk below:

The following was just a highlight of how some companies are scaling their ML workloads and delivering ML driven innovations with Ray. For more information on how Ray and Anyscale can benefit your ML development and deployment, view our video and blog post resources.