Ray Data GA

We're excited to announce Ray Data as GA.

Ray Data is a scalable data processing library for AI workloads. It provides flexible and performant APIs for scaling unstructured data preprocessing, ingest for ML training, and batch inference.

With this announcement, Ray Data becomes generally available with stability improvements in streaming execution, reading and writing data, better tasks concurrency control, and debuggability improvement with dashboard, logging and metrics visualization.

LinkMotivation

Ray Data is built to target data-centric AI workloads. In particular, Ray Data focuses on the following three categories of workloads:

Unstructured Data Preprocessing: Being able to handle large numerical datasets with different modalities such as images, sensor data, point-clouds, audio, and video. For example, preprocessing raw audio files for voice recognition, resizing and augmenting images for a computer vision model, or cleaning large datasets of videos for an autonomous driving object recognition model.

Batch Inference: Running deep learning models on large volumes of data at scale in an offline fashion. For example, applying an LLM to millions of customer reviews for sentiment analysis, or detecting objects in a large database of driving footage.

Ingest for ML Training: Streaming and preprocessing data on-the-fly to be fed into GPUs where training happens.

There are a lot of distributed data processing engines, such as Spark, Dask, and Beam, but they are primarily focused on traditional structured data workloads.

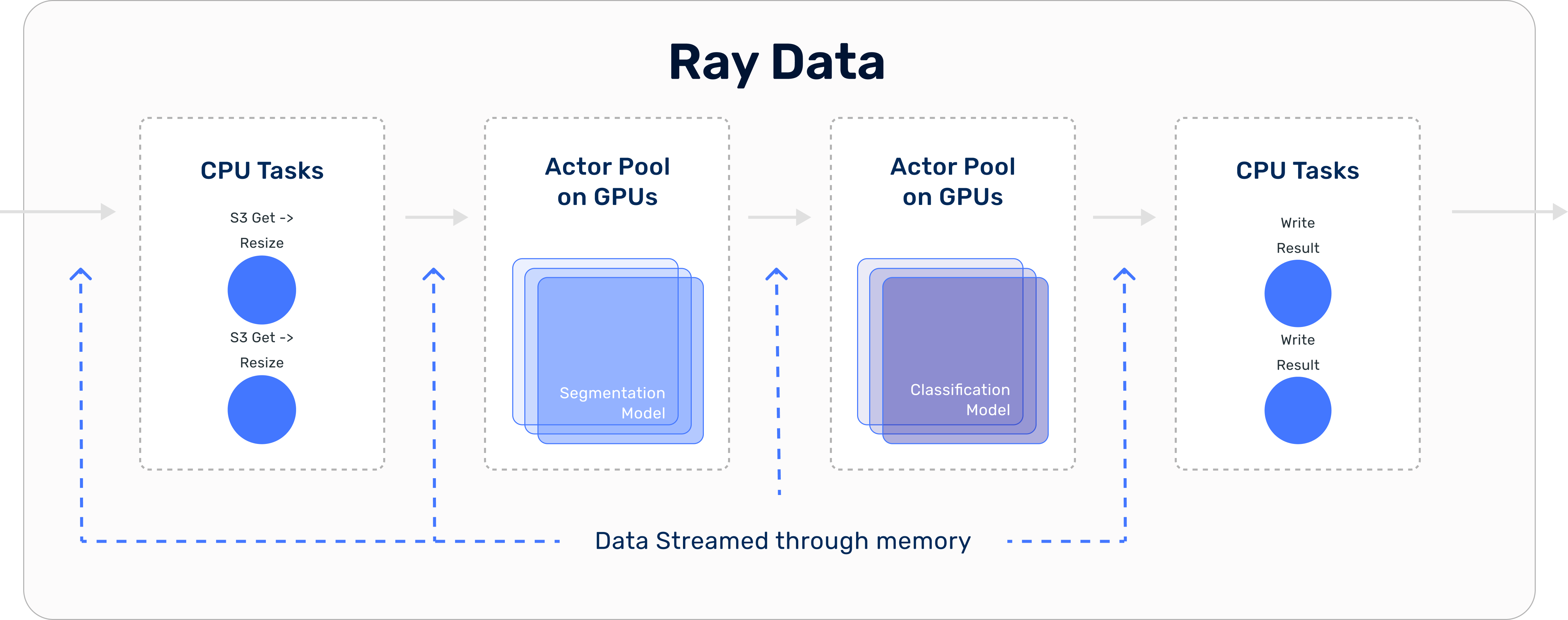

In many data-intensive AI workloads, the underlying system needs to efficiently utilize both CPUs and GPUs. For example, in an offline image batch inference workload, the GPU is needed to perform the actual inference, while the CPU can be used to perform some preprocessing steps such as image resizing or rotation, or simply to perform I/O bound operations such as reading from cloud storage.

Being able to integrate seamlessly with the ecosystem while providing efficient execution across heterogeneous resources was something that other existing data processing systems did not do well, which motivated the development of Ray Data.

LinkRay Data

Ray Data is a distributed data system for AI workloads. It is built to efficiently use mixed CPU and GPU resources, integrates seamlessly with both the data and AI ecosystems, while offering the fault tolerance and resource multiplexing properties of traditional batch processing systems.

Heterogeneous Resource Support: As mentioned above, AI workloads often require efficiently utilizing GPUs and CPUs, which requires execution to be pipelined across different tasks (stages). Ray Data is built with a streaming execution model that pipelines data across different tasks to maintain high resource utilization while minimizing job completion time. As a result, Ray Data can be up to 3x better than Spark on batch inference workloads.

Ray Data also has support for stateful operators (actors), which amortizes the overhead of instantiating expensive objects on the GPU like large neural networks. These operators can also themselves be distributed, which is critical in use cases such as batch inference for large language models.

Ecosystem: Ray Data also integrates with most common tools between the data and AI ecosystems:

ML frameworks: PyTorch, TF, HuggingFace

File formats: Parquet, image, JSON, CSV, etc.

Data sources: BigQuery, Databricks table, Pinecone, SQL databases.

Cloud storage: S3, GCS

Scalability: Ray Data is built on Ray Core and is built with scalability and reliability in mind, scaling up to 1000 GPU nodes. Ray Data’s autoscaling functionality allows for scaling worker nodes to zero and scale up according to resource demands as required by your pipeline. Ray Data also features fault tolerance capabilities so that task failures will be automatically retried without impeding the completion of the program.

On Anyscale, Ray Data can also checkpoint and continue progress from within stages, which is useful for long running batch inference jobs.

In comparison to other systems, Ray Data offers the following:

Ray Data | Spark | Dask | |

|---|---|---|---|

Heterogeneous Resource Support | Yes | Limited | Yes |

Streaming Execution | Yes | Limited | No |

Stateful Execution (Actors) | Yes | No | Limited |

Python-native | Yes | No | Yes |

SQL / Dataframe API | No | Yes | Yes |

LinkWhat’s New with Ray Data

We’re excited to announce GA for Ray Data. Major features that come with Ray Data GA include:

Stability Improvements: In Ray 2.5, streaming execution was turned on by default. Over the last year, we’ve focused on introducing new functionality and improvements to Ray Data to better improve the stability of the streaming execution. Such improvements include improved output buffer management, backpressure between stages, resource reservations per operator, and better runtime resource estimation.

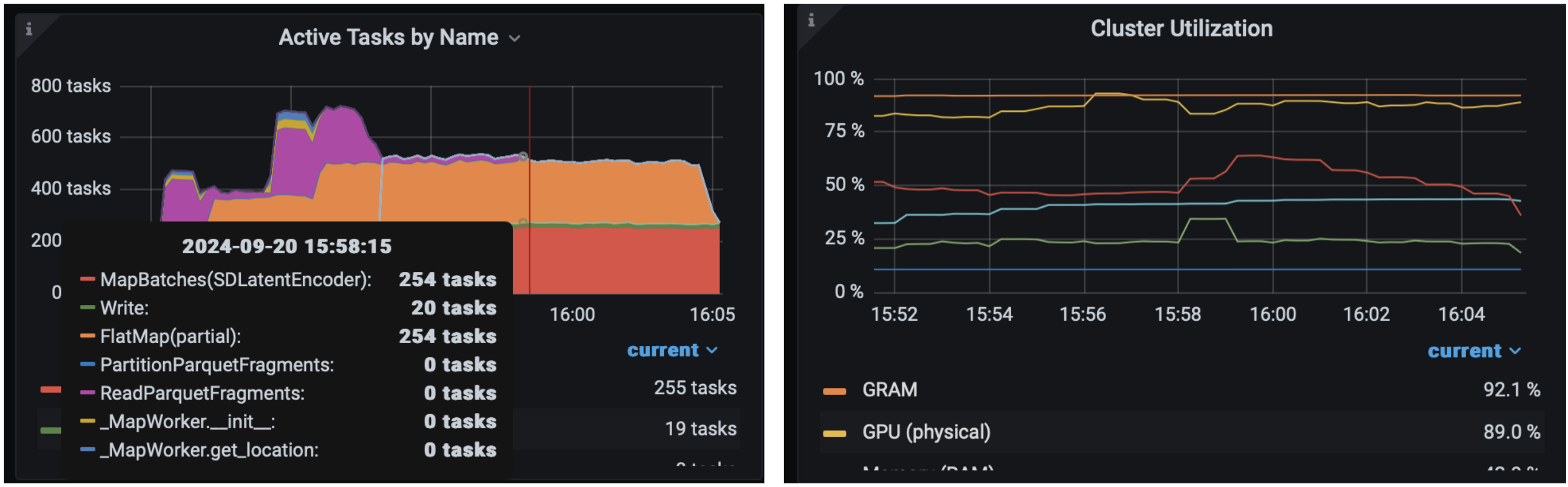

Observability: In Ray 2.9, we introduced a Ray Data dashboard page for monitoring your Ray Data workloads. This allows you to visually break down your Ray Data job into different stages to diagnose and isolate issues. Further, the metrics page in the dashboard now also has a Ray Data section that displays various metrics and stats, such as disk spilling and memory allocated in the object store. The Ray Data dashboard also now surfaces per-op metrics, such as number of running/finished/failed tasks, which enables finer-grained debugging.

We’ve also recently invested in improving the console output progress bar, so that you can visualize your workload in your development environment.

Take a look at the Ray Data dashboard page: Link.

Integrations: Over the past year, the Ray Data community has contributed a large number of connectors with different data sources. These data connectors allow for better efficient integration of Ray Data into existing data pipelines. We thank the community for these contributions:

Docs | Contributed by | Contributor | |

|---|---|---|---|

Iceberg | Hinge | ||

Delta Sharing | Coinbase | ||

Avro | |||

Lance | Anyscale | ||

Snowflake | [Link] (Proprietary) | Anyscale | Anyscale Team |

LinkConclusion

Try out Ray Data today on Anyscale. In addition to getting access to RayTurbo, Anyscale's optimized Ray runtime engine, we've extended Ray Data with more powerful capabilities, including:

Improved autoscaling

Improved spot instance support

Native readers for multi-modal data types

Read operation optimizations which can lead to up to 5x improvements on read-intensive workloads

Job checkpoint and resume for better cost efficiency

Learn more by checking out the Anyscale platform.