Ray 2.2: Improved developer experience, performance and stability

We’re excited to share recent developments for Ray in the 2.2 release -- enhanced observability, improved performance for data-intensive AI applications, increased stability, and better UX for RLlib.

As a large number of Ray users continue their adoption of Ray in production, the Ray community has voiced new requirements with respect to performance, robustness, and stability for Ray. We have responded with a new release of Ray to address these requirements, which has been our steadfast focus since we announced Ray 2.0 –– to make Ray easy to use, performant, stable, and unified across its machine learning libraries with Ray AIR.*

We’re excited to share recent developments for Ray in the 2.2 release -- improved observability, improved performance for data-intensive AI applications, increased stability, and better UX for RLlib.

LinkImproved observability across the Ray ecosystem

Ray Jobs API is now GA. The Ray Jobs API allows you to submit locally developed applications to a remote Ray Cluster for execution and aims to simplify the experience of packaging, deploying, and managing a Ray application.

To observe and gauge your Ray job’s resource usage during its execution, we've added a number of features to the Ray Dashboard, including the ability to visualize CPU flame graphs of your Ray worker processes and additional metrics for varying memory usage.

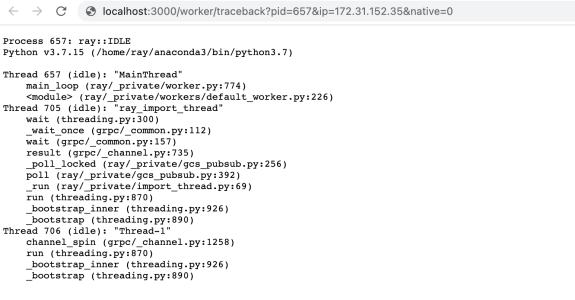

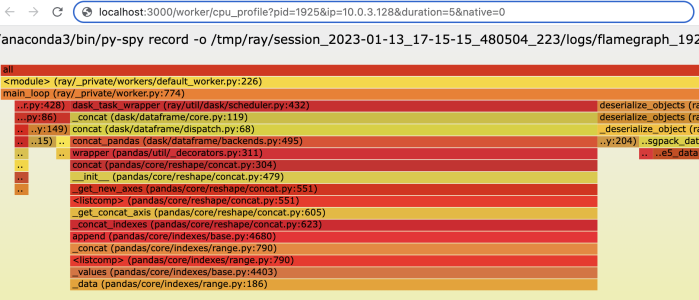

View the stack trace or CPU flame graph of a Ray worker process from the dashboard.

View the stack trace or CPU flame graph of a Ray worker process from the dashboard. A generated stack trace in the browser.

A generated stack trace in the browser. A generated CPU flame graph in the browser

A generated CPU flame graph in the browserAcross Ray’s AI libraries, we’ve seen more and more users push the boundaries of reliability. RLlib’s Algorithms now feature flexible fault tolerance for simulation/rollout workers and evaluation workers. This means that when worker nodes get pre-empted and removed, the training will continue to progress and will recover as soon as nodes are restored. To the Ray Tune library, we have made a number of improvements for its fault tolerance, including better checkpoint syncing and retries.

LinkImproving performance for data-intensive AI applications

In our Ray 2.0 release, we released the Ray AI runtime in beta. Since our beta release, the Ray team has driven a variety of performance and usability improvements, specifically targeting data-intensive ML applications.

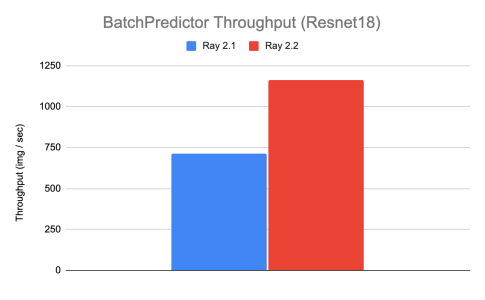

We’ve received feedback from users about improving the performance for Batch Predictors. In this release, we’ve focused efforts on reducing latency and memory footprint for batch prediction for deep learning models by avoiding unnecessary data conversions [link to PR here]. Ray 2.2 offers nearly 50% improved throughput performance and 100x reduced GPU memory footprint for batch inference on image-based workloads.

Ray 2.2 offers nearly 50% improved throughput performance for batch inference on image-based workloads.

Ray 2.2 offers nearly 50% improved throughput performance for batch inference on image-based workloads.Additionally, the Ray team has strengthened support for the ML data ecosystem, including but not limited to expanded version compatibility with Apache Arrow, full TensorFlow TF records reading/writing support for Ray Data, and new connector methods for TF and Torch datasets (from_tf, from_torch).

LinkStability improvements for out of memory crashes

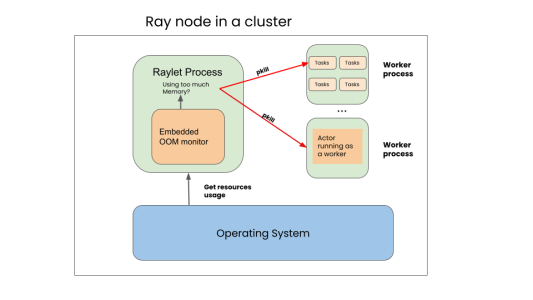

Out-of-memory (OOM) are harmful errors that disrupt not only long running Ray applications but also degrade other services in the Ray cluster. In this release, we’ve enabled the Ray Out-Of-Memory (OOM) Monitor by default. This component periodically checks the memory usage, which includes the worker heap, the object store, and the raylet as described in our memory management documentation. The monitor uses memory usage statistics to defensively free up memory and prevent Ray from completely crashing.

For data-intensive workloads, we’ve heard numerous users report that when files are too large, Ray Data can have out-of-memory or performance issues. We’re enabling dynamic block splitting by default, which will address the above issues by avoiding holding too much data in memory.

LinkRLlib API enhancements

Last but not least, the RLlib team has been heads down on making some fundamental UX improvements for RLlib.

We’ve introduced an enhanced RLlib command line interface (CLI), allowing for automatically downloading example configuration files, python-based config files, better interoperability between training and evaluation runs, and many more. Check out the CLI documentation for more details.

We’ve also seen quite a few user reports about RLlib’s checkpoint format. We’ve pushed changes to make Algorithm and Policy checkpoints cohesive and transparent. All checkpoints are now characterized by a directory rather than a single pickle file, and you can use a from_checkpoint() utility method for directly instantiating instances from a checkpoint directory. Read more about these changes here!

LinkConcluding Thoughts

This is not the end! We have a ton of exciting improvements planned in subsequent Ray releases ahead with focus on bolstering stability, improving performance, extending integration with larger Python and ML ecosystem, and offering observability into Ray jobs and clusters, so please let us know if you have any questions or feedback.

We’re always interested in hearing from you -- feel free to reach out to us on Github or Discourse.

Finally, we have our Ray Summit 2023 coming up later in the year. If you have a Ray story or use case to share with the global Ray Community, we are accepting proposals for speakers!

*Update 9/15/2023: We are sunsetting the "Ray AIR" concept and namespace starting with Ray 2.7. The changes follow the proposal outlined in this REP.