Now Available: The LLM Router Template

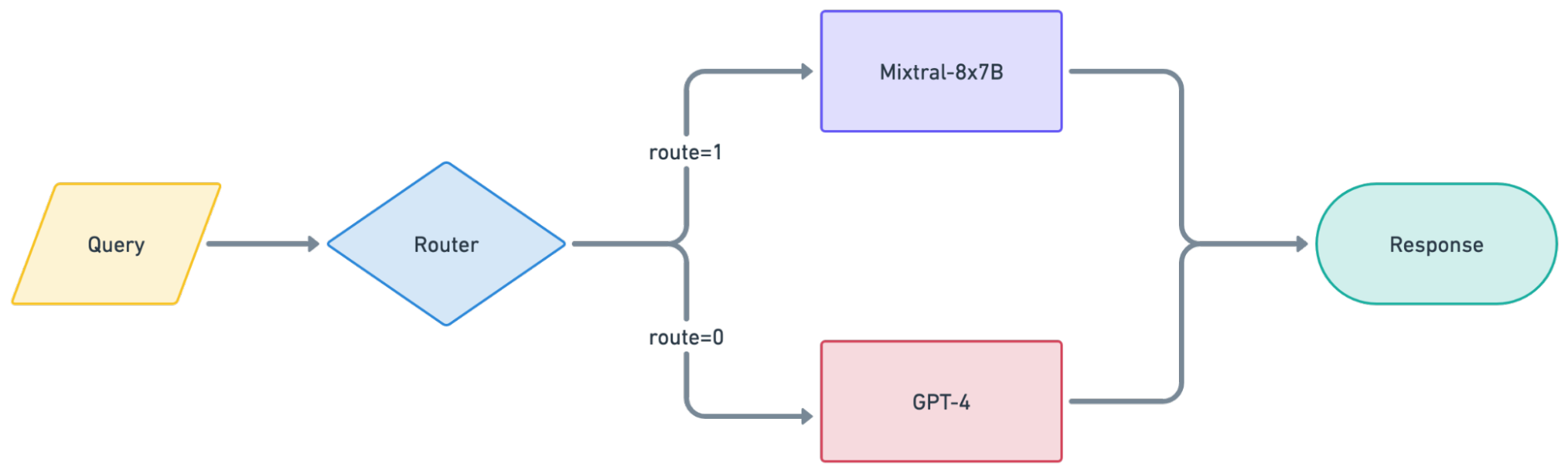

We recently collaborated with the LMSys team to develop an open-source LLM Router that delivers high-quality LLM applications at half the cost by dynamically selecting between high-performance proprietary LLMs and cost-effective open-source models.

You can read more about our work here: https://www.anyscale.com/blog/building-an-llm-router-for-high-quality-and-cost-effective-responses

Some highlights:

The LLM classifier directs "simple" queries to Mixtral-8x7B (or other models) thereby maintaining high overall response quality (e.g., an average score of 4.8/5) while significantly reducing costs (e.g., by 50%)

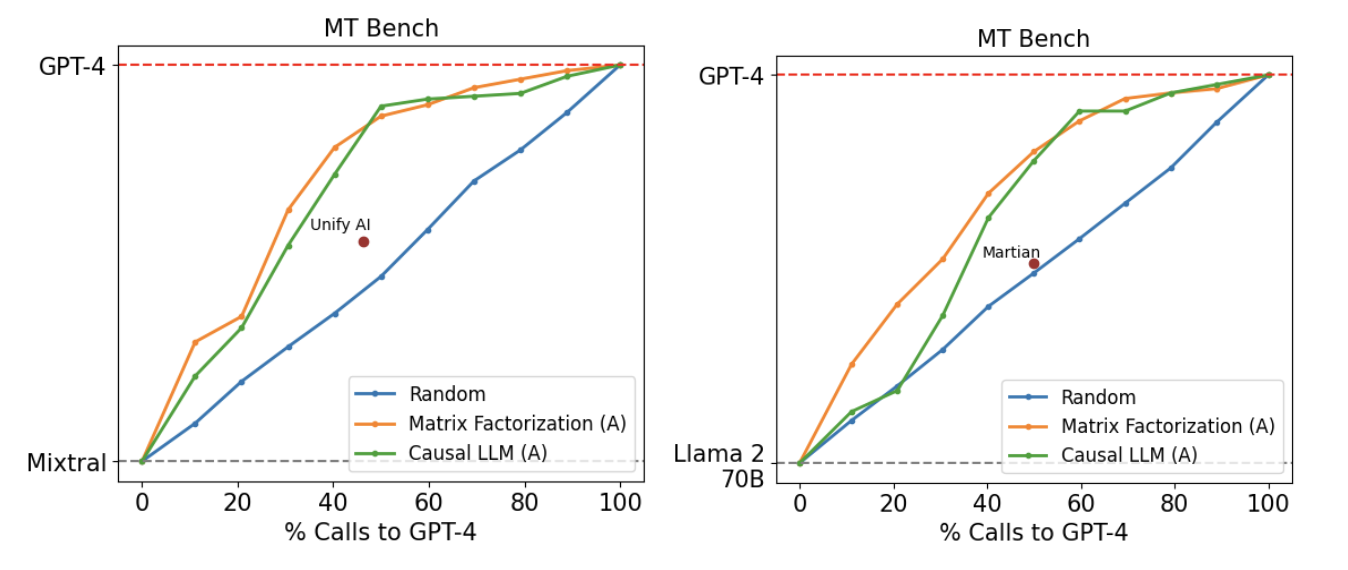

image2 The Anyscale and LMSys LLMRouter sets the standard for quality and cost savings. Using human preference data and LLM-as-a-judge for data augmentation, the Anyscale LLM Router was evaluated on MTBench and achieved higher quality with lower costs (i.e., fewer calls to GPT-4) compared to the random baseline and public LLM routing systems from Unify AI and Martian. For more details on these results and additional ones, refer to our paper.

LinkGet Started with the LLM Router Template Today

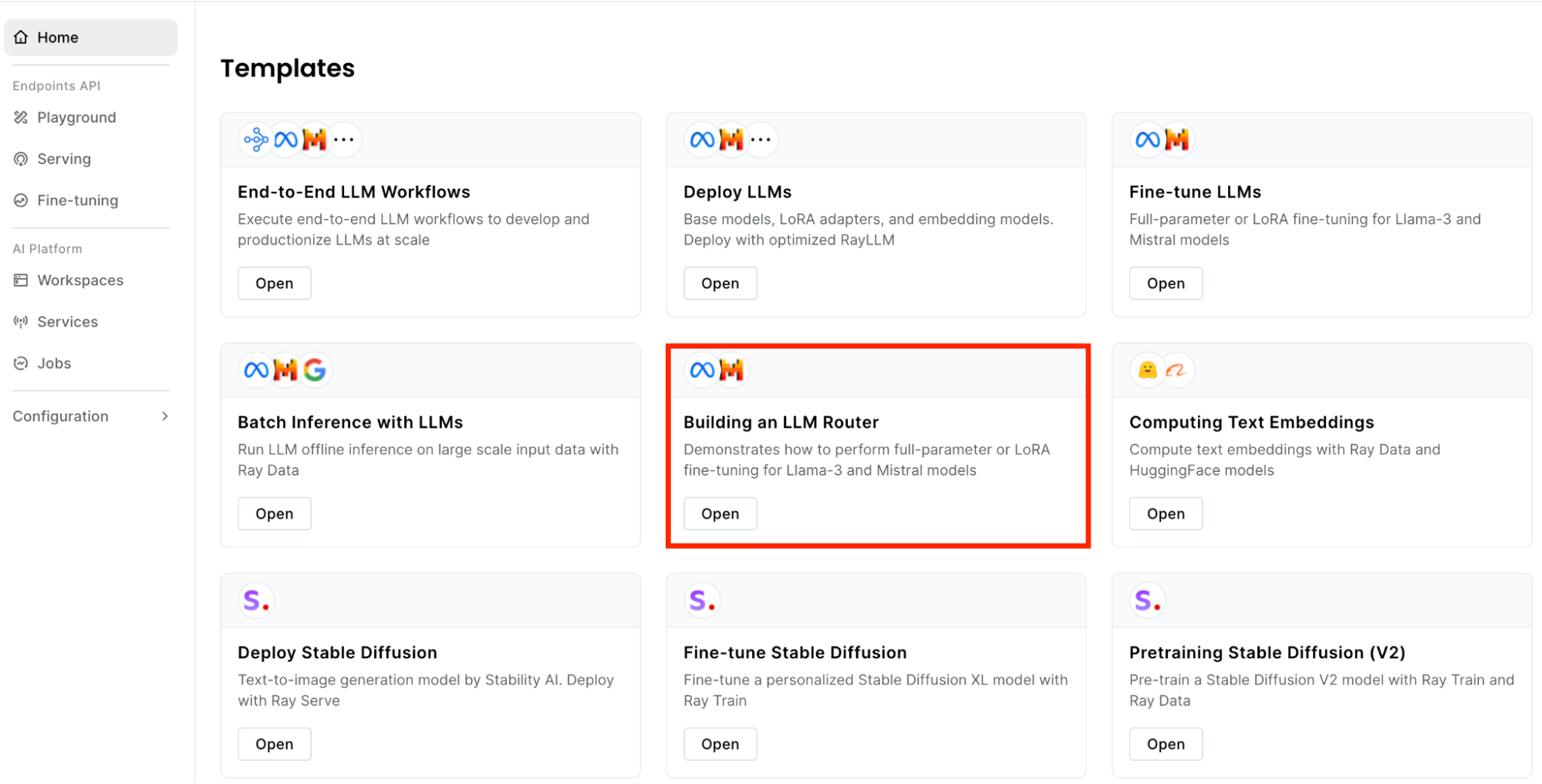

The LLMRouter is now available as an Anyscale Workspace Template.

With a single click, users can launch an IDE (VSCode, Jupyter) backed by a scalable Ray Cluster managed by Anyscale with built- in observability and debugging tools.

Our template simplifies the training and deployment of the LLM Router from a single notebook. Customize the data for your specific applications to achieve top-quality results or use it as is to benefit from the LLM Router's generalizability.

To get started:

Click “launch” on the LLMRouter Template

image4 Follow the instructions

(optional) Update with your own data

Train the Router

Evaluate the Router

image1

Deploy with Anyscale Services

Start saving up to 2X on your LLM Applications!