KubeRay v1.3.0: Enhancing Observability, Reliability, and Usability

Special thanks to these KubeRay contributors: Anyscale (Kai-Hsun Chen, Chi-Sheng Liu), Google (Andrew Sy Kim, Aaron Liang, Ryan O'Leary, Spencer Peterson), opensource4you (Jui-An Huang, Jun-Hao Wan), and Ant Group (Yikun Wang).

At Ray Summit 2024, we saw firsthand how organizations are leveraging Ray on Kubernetes to build and scale their next-generation machine learning platform. For deploying Ray on Kubernetes, KubeRay has become the go-to open-source solution, simplifying the lifecycle management of Ray clusters and applications. As production deployments of Ray with KubeRay become increasingly prevalent, the challenges of observability and reliability become critical considerations. Furthermore, improving the usability of Ray on Kubernetes becomes increasingly important when managing Ray clusters at scale. We're excited to announce that KubeRay v1.3 directly addresses these challenges with a series of key enhancements, with over 300 commits authored from 35 contributors.

LinkObservability

LinkRayCluster Conditions API (Beta)

To improve RayCluster observability, we introduced the RayCluster Conditions API (Alpha) in v1.2.0. Now, it has reached Beta in v1.3.0 and is enabled by default. Users can now observe the following conditions in RayCluster custom resources:

Condition Name | Condition Behavior |

| Once all the Pods in the cluster are ready, this condition is set to True and will no longer change. |

| It indicates whether the head Pod is ready to receive requests. |

| It is set to True when a Pod fails to be created or deleted. |

The .Status.State field still works but is marked as deprecated. We encourage all users to use the new .Status.Conditions API for observability instead.

1# Install KubeRay operator

2$ helm repo add kuberay https://ray-project.github.io/kuberay-helm/

3$ helm repo update

4$ helm install kuberay-operator kuberay/kuberay-operator --version 1.3.0

5

6# Install RayCluster

7$ helm install raycluster kuberay/ray-cluster --version 1.3.0

8

9# Check conditions

10$ kubectl describe raycluster raycluster-kuberay

11

12# [Example output]:

13Status:

14 Conditions:

15 Last Transition Time: 2025-02-12T01:38:40Z

16 Message:

17 Reason: HeadPodRunningAndReady

18 Status: True

19 Type: HeadPodReady

20 Last Transition Time: 2025-02-12T01:38:40Z

21 Message: All Ray Pods are ready for the first time

22 Reason: AllPodRunningAndReadyFirstTime

23 Status: True

24 Type: RayClusterProvisioned

25LinkRayService Status API

The RayService controller underwent a refactoring in KubeRay v1.3.0, involving over 40 commits. This restructuring simplifies handling edge cases, as well as testing and maintenance. For further information, please refer to kuberay/2548.

First, we introduced the RayService Conditions API for improved observability. It includes two conditions: Ready and UpgradeInProgress.

Ready: IfReadyis true, the RayService is ready to serve requests.UpgradeInProgress: IfUpgradeInProgressis true, the RayService is currently in the upgrade process and both active and pending RayCluster exist.

Second, we removed all possible values of RayService.Status.ServiceStatus except Running, so the only valid values are Running or empty. If ServiceStatus is Running, it indicates that RayService is ready to serve requests. In other words, ServiceStatus is equivalent to the Ready condition. We strongly recommend using the Ready condition instead of ServiceStatus.

Third, we introduced the Kubernetes Events API in the RayService controller. It creates a Kubernetes event for every interaction between the KubeRay operator and the Kubernetes API server, such as creating a Kubernetes service, updating a RayCluster, and deleting a RayCluster. Additionally, creating and updating Ray Serve applications will also trigger Kubernetes events.

1# Install KubeRay operator

2$ helm repo add kuberay https://ray-project.github.io/kuberay-helm/

3$ helm install kuberay-operator kuberay/kuberay-operator --version 1.3.0

4

5# Create a RayService CR

6$ kubectl apply -f https://raw.githubusercontent.com/ray-project/kuberay/release-1.3/ray-operator/config/samples/ray-service.sample.yaml

7

8# Check RayService conditions

9$ kubectl describe rayservices.ray.io rayservice-sample

10

11# [Example output]

12 Conditions:

13 Last Transition Time: 2025-02-08T06:45:20Z

14 Message: Number of serve endpoints is greater than 0

15 Observed Generation: 1

16 Reason: NonZeroServeEndpoints

17 Status: True

18 Type: Ready

19 Last Transition Time: 2025-02-08T06:44:28Z

20 Message: Active Ray cluster exists and no pending Ray cluster

21 Observed Generation: 1

22 Reason: NoPendingCluster

23 Status: False

24 Type: UpgradeInProgressLinkMulti-tenant Observability

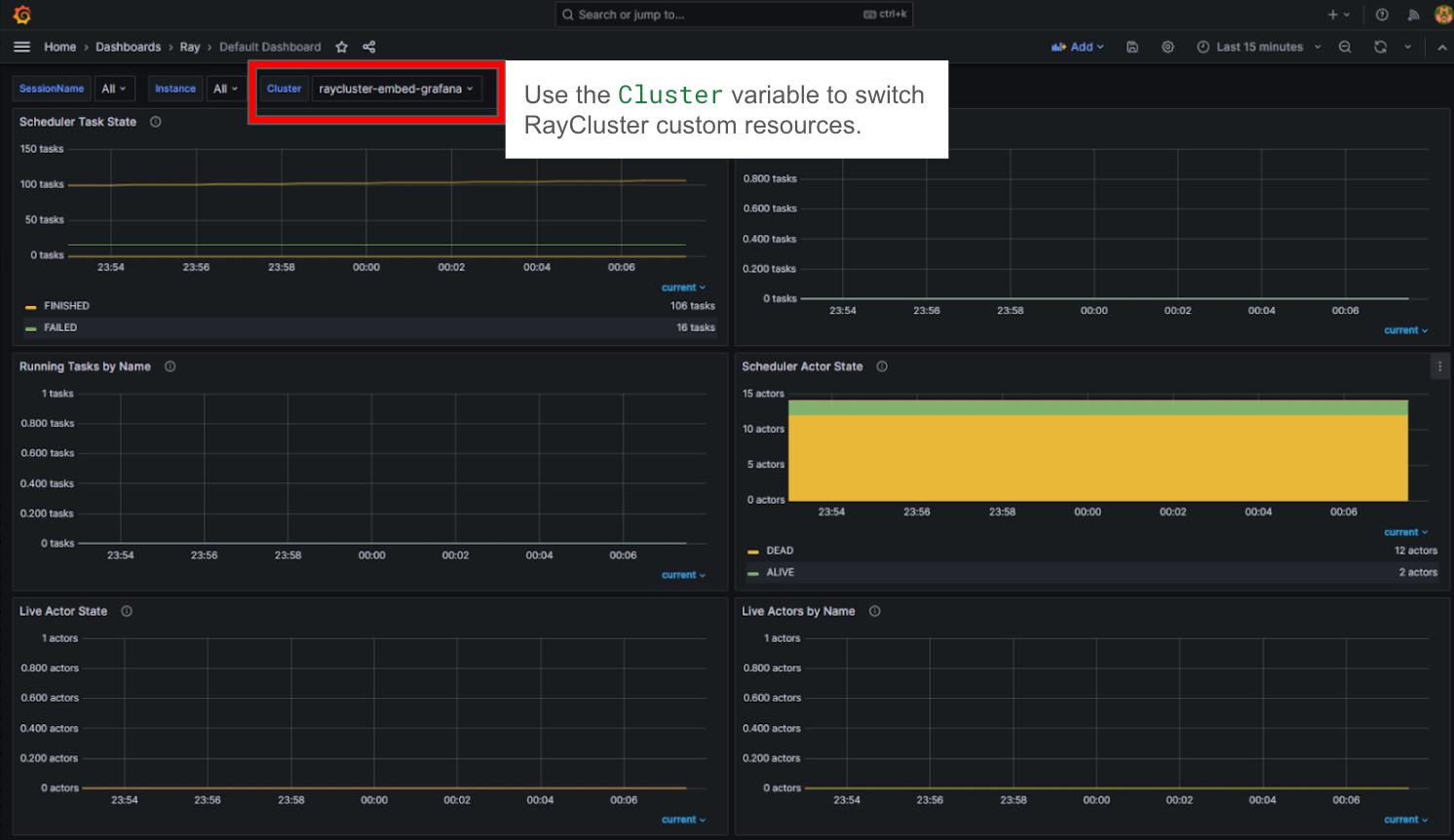

The KubeRay community upstreams commits to Ray to facilitate switching between different RayCluster custom resources when sharing multiple RayCluster custom resources in a single Grafana dashboard, enabling users to view metrics for different clusters. See this document for more details.

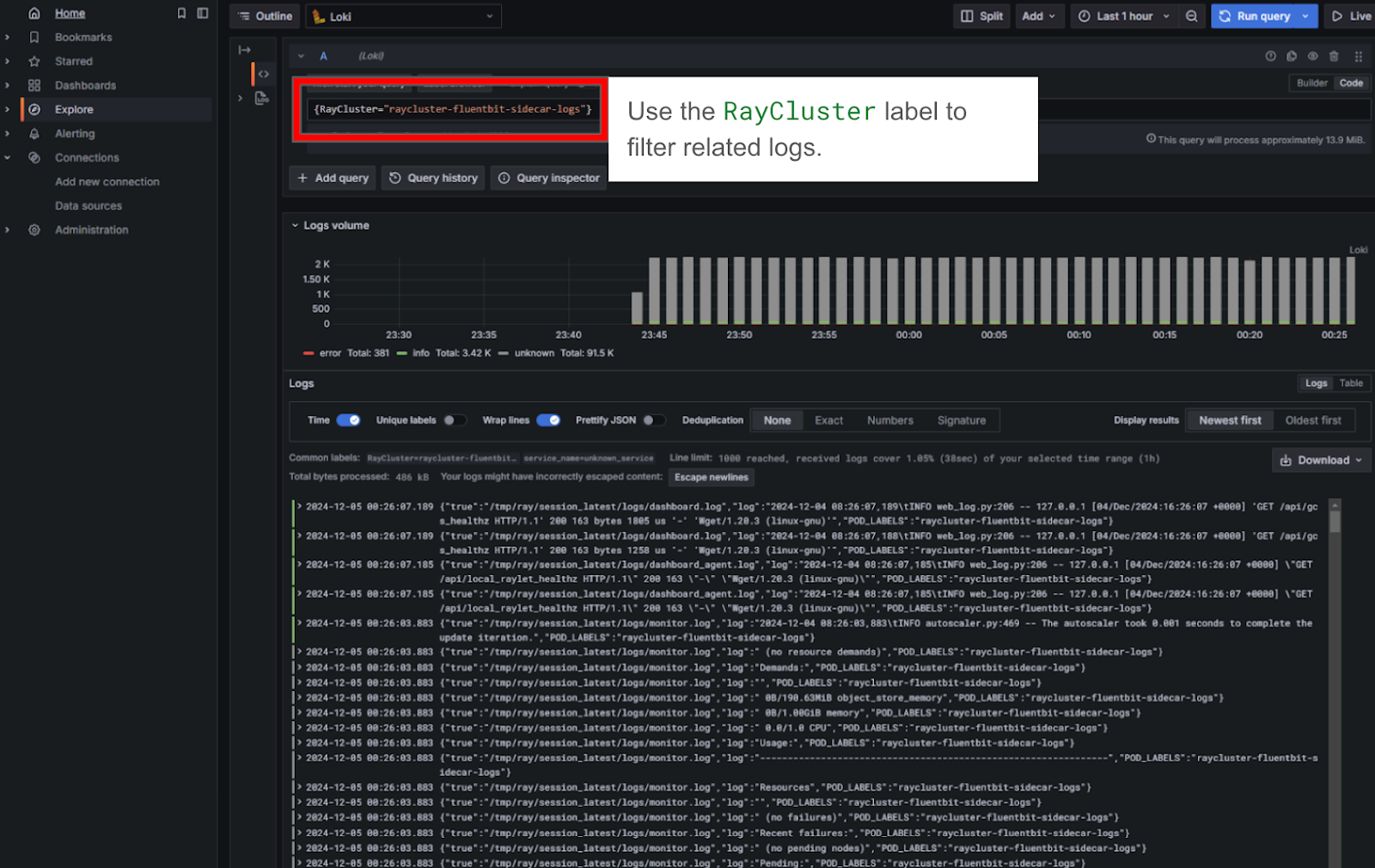

The Ray logging guide currently includes manifests that add the RayCluster custom resource name as a log label, allowing users to filter logs belonging to a specific RayCluster based on the label.

LinkReliability

LinkRayJob: Network Transient Failure Recovery

For long-running RayJob, network transient failure is not uncommon. However, network transient failure is a fatal error for RayJob. To elaborate, by default, RayJob uses RayJob submitter to submit the user’s Ray application to a RayCluster and stream its logs back through a WebSocket connection from the cluster. If the connection between the submitter and the head Pod disconnects, the submitter Pod will fail and then the underlying Kubernetes Job will create a new submitter Pod to submit the Ray application again. However, the retry will fail because Ray doesn’t allow Ray jobs with the same submission ID, which leads to a RayJob failure after the Kubernetes Job reaches the backoffLimit, even if the Ray application is still running in the RayCluster.

In KubeRay v1.3.0, we made the RayJob submitter tolerant of network transient failures by attempting to resume output streaming for the running Ray application after the submitter Pod restarts. See kuberay/2579 for more details.

LinkTowards Ray Autoscaler V2

The Ray Autoscaler V2 (currently in alpha) provides significantly improved stability and observability over the previous version. During the KubeRay v1.3 timeline, we pushed 20 commits dedicated to bug fixes, refactoring, and new features like custom idle timeouts and support for autoscaling TPU v6e worker groups. Our goal for KubeRay v1.4 is to promote the Ray Autoscaler V2 to Beta and enable it by default for autoscaled Ray clusters. In the meantime, we encourage users to help us experiment with the Ray Autoscaler V2 and provide feedback! See Autoscaler V2 with KubeRay to get started!

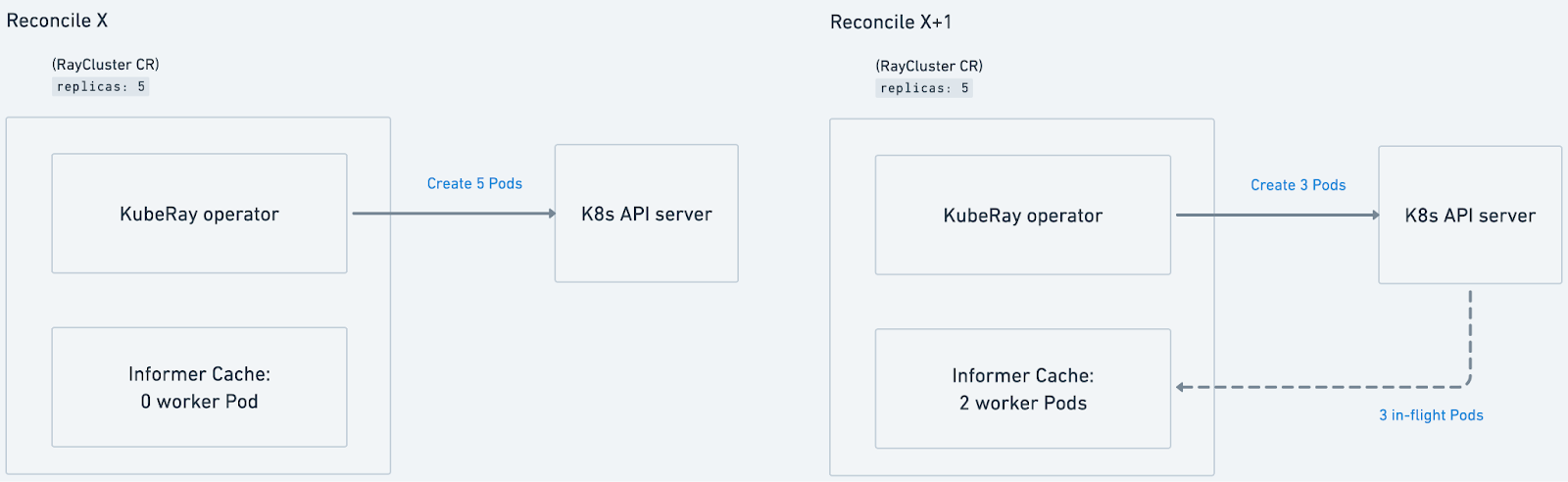

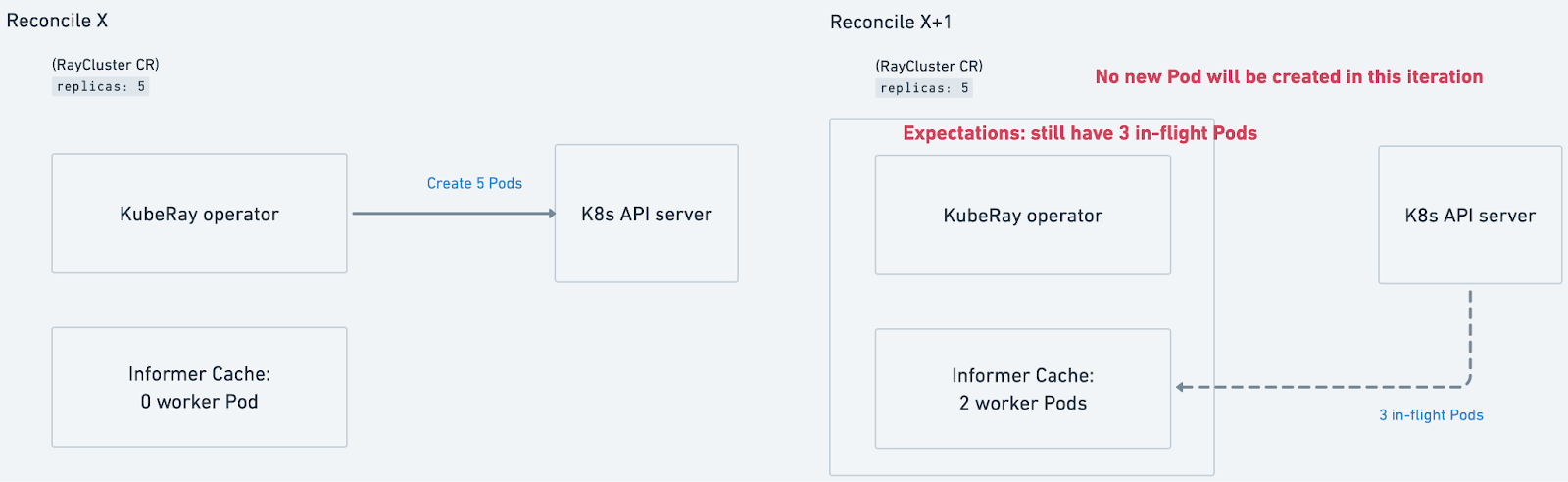

LinkController Expectations: Resolving Stale Informer Cache Issues

The inconsistency between the Kubernetes API server and the informer cache may cause KubeRay to create more Pods than expected. For example, when KubeRay reconciles a RayCluster, it may create five Pods during a reconciliation cycle. If KubeRay reconciles the RayCluster again before the local informer cache is up-to-date and only two new Pods are recorded in the informer cache, it will mistakenly create three additional Pods. See the following figure for more details about the stale informer cache issue.

In KubeRay v1.3.0, we introduced controller expectations to track in-flight Pod creation and deletion requests, preventing the creation of redundant Pods. See kuberay/2150 for more details.

LinkCI Improvement

KubeRay v1.3.0 significantly enhances CI. The number of end-to-end tests has doubled from 23 in v1.2.2 to 46 in this release, strengthening test coverage and stability.

RayCluster now undergoes more rigorous testing, including a GCS fault tolerance test to ensure detached actors function correctly and environment variables are properly populated. The test suite has also been expanded to cover RayCluster suspension and verify that it is correctly managed by either the KubeRay operator or Kueue.

RayService now has dedicated end-to-end tests to validate its stability and reliability. High-availability testing has been introduced, covering scenarios with and without autoscaler enabled. The test suite also includes a zero-downtime upgrade case and a GCS fault tolerance enabled case. Additional tests verify in-place updates, redeployments, and resilience against head pod failures during upgrades.

Autoscaler testing has been expanded beyond autoscaler v1, now including comprehensive coverage for autoscaler v2. New test cases address critical corner scenarios, such as handling updates to minimum replica counts.

The sample YAML tests have been rewritten in Go, eliminating the need for awkward Python-based interactions with the Kubernetes cluster.

LinkUsability

LinkRay Kubectl Plugin (Beta)

The Ray Kubectl plugin is graduating to Beta status. The following commands are supported in KubeRay v1.3.0:

Command | Description |

| Download Ray logs from the cluster to a local directory. |

| Initiate a port-forwarding session to the Ray head Pod. |

| Create a RayCluster |

| Launch a RayJob in a Kubernetes cluster using local Ray scripts. |

| Create a new worker group for an existing RayCluster. |

In this blog, we will focus on the kubectl ray job submit command. For other commands, please see the Ray Kubectl Plugin documentation for more details. See our recently published blog about the kubectl plugin.

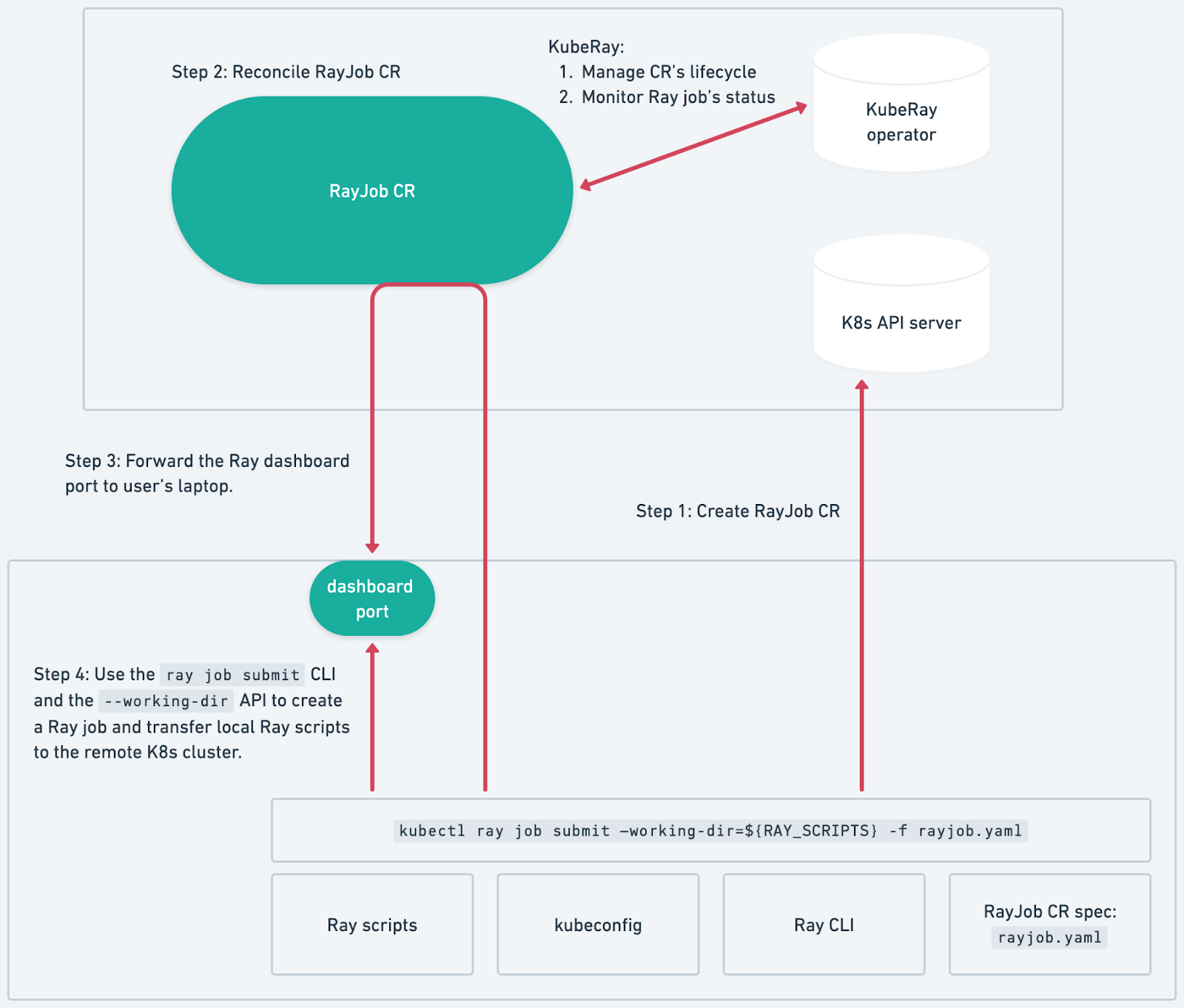

The kubectl ray job submit command allows users to launch a RayJob custom resource in a Kubernetes cluster to run their local Python scripts with a single command, enabling them to develop locally while running Ray applications in a remote Kubernetes cluster. The following figure shows the workflow behind this feature:

See the following example to understand the user journey of kubectl ray job submit.

1# Install KubeRay kubectl plugin

2# Method 1: Using the Krew kubectl plugin manager (Recommended)

3# See https://krew.sigs.k8s.io/docs/user-guide/setup/install/ for details

4$ kubectl krew update

5$ kubectl krew install ray

6# Method 2: Download from GitHub release https://github.com/ray-project/kuberay/releases

7# Extract the kubectl-ray binary and put it in your PATH

8

9# Verify the installation

10$ kubectl ray --help

11

12# Create a Kind cluster

13$ kind create cluster --image=kindest/node:v1.26.0

14

15# Install KubeRay operator

16$ helm repo add kuberay https://ray-project.github.io/kuberay-helm/

17$ helm install kuberay-operator kuberay/kuberay-operator --version 1.3.0

18

19# Download the example Ray script

20$ mkdir working_dir

21$ cd working_dir

22$ wget https://gist.githubusercontent.com/kevin85421/0d0f2bc0c875507f0dbfb083cab19d2c/raw/1362dabee946bebb0e045acb9d641c52bef4513a/example.py

23

24# Run the example.py in the Kind cluster

25$ kubectl ray job submit --name rayjob-sample --working-dir . -- python example.py

26

27# [Example output]

28...

292025-01-06 11:53:34,806 INFO worker.py:1634 -- Connecting to existing Ray cluster at address: 10.12.0.9:6379...

302025-01-06 11:53:34,814 INFO worker.py:1810 -- Connected to Ray cluster. View the dashboard at 10.12.0.9:8265

31[0, 1, 4, 9]

322025-01-06 11:53:38,368 SUCC cli.py:63 -- ------------------------------------------

332025-01-06 11:53:38,368 SUCC cli.py:64 -- Job 'raysubmit_9NfCvwcmcyMNFCvX' succeeded

342025-01-06 11:53:38,368 SUCC cli.py:65 -- ------------------------------------------LinkGCS Fault Tolerance API

GCS fault tolerance is a required feature to achieve high availability in RayService for online serving. See the KubeRay GCS fault tolerance guide and the RayService high availability guide for more details.

Prior to KubeRay v1.3.0, configuring GCS fault tolerance was cumbersome and prone to errors. Users needed to add annotations to the custom resource, set redis-password in rayStartParams, and add environment variables—such as RAY_REDIS_ADDRESS for the Redis address and REDIS_PASSWORD for the Redis password—in the head Pod. Take ray-cluster.deprecate-gcs-ft.yaml as an example; it requires users to set up at most five different places to correctly configure GCS fault tolerance for the RayCluster.

1apiVersion: ray.io/v1

2kind: RayCluster

3metadata:

4 annotations:

5 ray.io/ft-enabled: "true" <---- (1)

6 # ray.io/external-storage-namespace: "my-raycluster-storage" <---- (2)

7 name: raycluster-external-redis

8spec:

9 ...

10 headGroupSpec:

11 rayStartParams:

12 redis-password: $REDIS_PASSWORD <---- (3)

13 template:

14 spec:

15 containers:

16 - name: ray-head

17 ...

18 env:

19 - name: RAY_REDIS_ADDRESS <---- (4)

20 value: redis:6379

21 - name: REDIS_PASSWORD <---- (5)

22 valueFrom:

23 secretKeyRef:

24 name: redis-password-secret

25 key: password

26...

27In addition, the original API is prone to errors. For example, in ray-project/kuberay#2694, a user GCS fault tolerance incorrectly. As a result, the RayCluster enabled GCS fault tolerance and wrote data to the external Redis, but KubeRay was unaware of this. Hence, multiple Ray clusters write and read metadata from the same Redis key, leading to interference between the two independent clusters.

To improve the UX, KubeRay v1.3.0 consolidates all GCS fault tolerance-related configurations into a single place: gcsFaultToleranceOptions. This allows users to configure the feature more easily. Take ray-cluster.external-redis.yaml as an example; users only need to configure GCS fault tolerance in a single place.

1apiVersion: ray.io/v1

2kind: RayCluster

3metadata:

4 name: raycluster-external-redis

5spec:

6 gcsFaultToleranceOptions:

7 redisAddress: "redis:6379"

8 redisPassword:

9 valueFrom:

10 secretKeyRef:

11 name: redis-password-secret

12 key: password

13 headGroupSpec:

14 ...

15To prevent misconfiguration of GCS fault tolerance, KubeRay v1.3.0 introduces multiple validations for RayCluster. The KubeRay operator creates a Kubernetes event for the RayCluster if it is misconfigured.

1# Deploy an invalid RayCluster

2$ kubectl describe raycluster raycluster-invalid

3

4# [Example output]

5Events:

6 Type Reason Age From Message

7 ---- ------ ---- ---- -------

8 Warning InvalidRayClusterSpec 11s raycluster-controller The RayCluster spec is invalid default/raycluster-invalid: RAY_REDIS_ADDRESS is set which implicitly enables GCS fault tolerance, but GcsFaultToleranceOptions is not set. Please set GcsFaultToleranceOptions to enable GCS fault tolerance

9LinkGetting Started with KubeRay v1.3.0

KubeRay v1.3.0 addresses the challenges of scaling Ray in production, incorporating valuable feedback from the Ray community. Beyond the improvements, we've also introduced new guides and tutorials for Ray on Kubernetes. These include how to Configure Ray clusters with authentication and access control using Kubernetes RBAC, Tuning Redis for a Persistent Fault Tolerant GCS, and strategies for reducing image pull policy. New to running Ray on Kubernetes? Get started with our guide: Getting Started with KubeRay. Existing KubeRay users can find upgrade instructions in the KubeRay upgrade guide.

Looking ahead, we're actively collaborating with the Ray community to shape the roadmap for KubeRay v1.4. We encourage you to share your feedback for KubeRay v1.4 on this GitHub issue.