Hyperparameter Search with Hugging Face Transformers

With cutting edge research implementations, thousands of trained models easily accessible, the Hugging Face transformers library has become critical to the success and growth of natural language processing today.

For any machine learning model to achieve good performance, users often need to implement some form of parameter tuning. Yet, nearly everyone (1, 2) either ends up disregarding hyperparameter tuning or opting to do a simplistic grid search with a small search space.

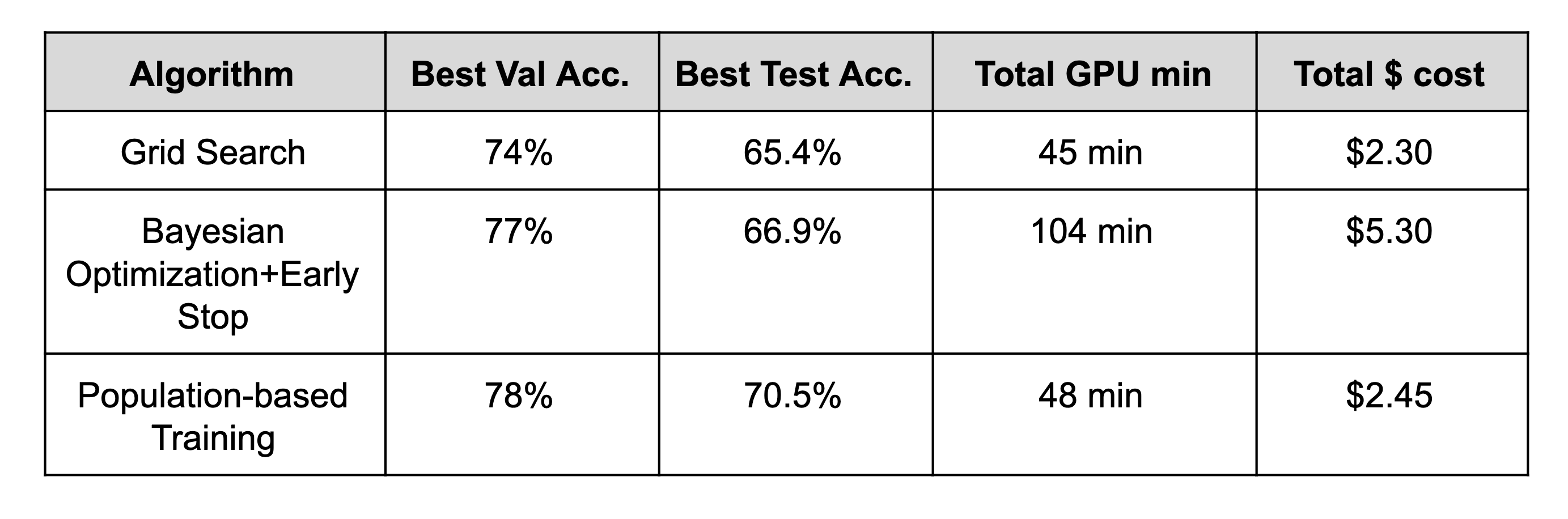

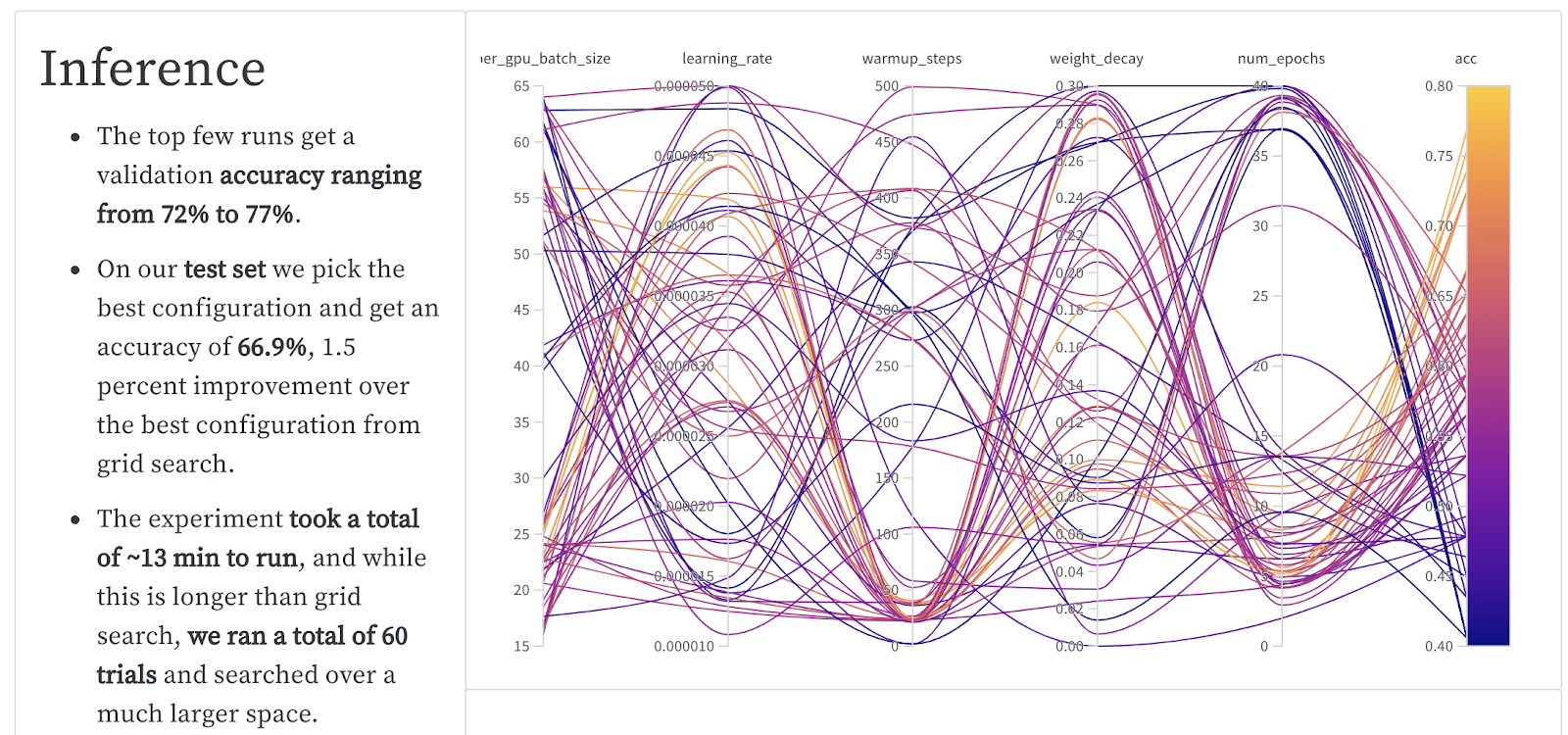

However, simple experiments are able to show the benefit of using an advanced tuning technique. Below is a recent experiment run on a BERT model from Hugging Face transformers on the RTE dataset. Genetic optimization techniques like PBT can provide large performance improvements compared to standard hyperparameter optimization techniques.

If you’re leveraging Transformers, you’ll want to have a way to easily access powerful hyperparameter tuning solutions without giving up the customizability of the Transformers framework.

In the Transformers 3.1 release, Hugging Face Transformers and Ray Tune teamed up to provide a simple yet powerful integration. Ray Tune is a popular Python library for hyperparameter tuning that provides many state-of-the-art algorithms out of the box, along with integrations with the best-of-class tooling, such as Weights and Biases and tensorboard.

To demonstrate this new Hugging Face + Ray Tune integration, we leverage the Hugging Face Datasets library to fine tune BERT on MRPC.

To run this example, please first run:

pip install “ray[tune]” transformers datasets scipy sklearn torch

Simply plug in one of Ray’s standard tuning algorithms by just adding a few lines of code.

1from datasets import load_dataset, load_metric

2from transformers import (AutoModelForSequenceClassification, AutoTokenizer,

3 Trainer, TrainingArguments)

4

5tokenizer = AutoTokenizer.from_pretrained('distilbert-base-uncased')

6dataset = load_dataset('glue', 'mrpc')

7metric = load_metric('glue', 'mrpc')

8

9def encode(examples):

10 outputs = tokenizer(

11 examples['sentence1'], examples['sentence2'], truncation=True)

12 return outputs

13

14encoded_dataset = dataset.map(encode, batched=True)

15

16def model_init():

17 return AutoModelForSequenceClassification.from_pretrained(

18 'distilbert-base-uncased', return_dict=True)

19

20def compute_metrics(eval_pred):

21 predictions, labels = eval_pred

22 predictions = predictions.argmax(axis=-1)

23 return metric.compute(predictions=predictions, references=labels)

24

25# Evaluate during training and a bit more often

26# than the default to be able to prune bad trials early.

27# Disabling tqdm is a matter of preference.

28training_args = TrainingArguments(

29 "test", evaluation_strategy="steps", eval_steps=500, disable_tqdm=True)

30trainer = Trainer(

31 args=training_args,

32 tokenizer=tokenizer,

33 train_dataset=encoded_dataset["train"],

34 eval_dataset=encoded_dataset["validation"],

35 model_init=model_init,

36 compute_metrics=compute_metrics,

37)

38

39# Default objective is the sum of all metrics

40# when metrics are provided, so we have to maximize it.

41trainer.hyperparameter_search(

42 direction="maximize",

43 backend="ray",

44 n_trials=10 # number of trials

45)

46By default, each trial will utilize 1 CPU, and optionally 1 GPU if available. You can leverage multiple GPUs for a parallel hyperparameter search by passing in a resources_per_trial argument.

You can also easily swap different parameter tuning algorithms such as HyperBand, Bayesian Optimization, Population-Based Training:

To run this example, first run: pip install hyperopt

1from ray.tune.suggest.hyperopt import HyperOptSearch

2from ray.tune.schedulers import ASHAScheduler

3

4trainer = Trainer(

5 args=training_args,

6 tokenizer=tokenizer,

7 train_dataset=encoded_dataset["train"],

8 eval_dataset=encoded_dataset["validation"],

9 model_init=model_init,

10 compute_metrics=compute_metrics,

11)

12

13best_trial = trainer.hyperparameter_search(

14 direction="maximize",

15 backend="ray",

16 # Choose among many libraries:

17 # https://docs.ray.io/en/latest/tune/api_docs/suggestion.html

18 search_alg=HyperOptSearch(metric="objective", mode="max"),

19 # Choose among schedulers:

20 # https://docs.ray.io/en/latest/tune/api_docs/schedulers.html

21 scheduler=ASHAScheduler(metric="objective", mode="max"))

22It also works with Weights and Biases out of the box!

LinkTry it out today:

pip install -U raypip install -U transformers datasetsCheck out the Hugging Face documentation and Discussion thread

End-to-end example of using Hugging Face hyperparameter search for text classification

If you liked this blog post, be sure to check out:

Our Weights and Biases report on Hyperparameter Optimization for Transformers

The simplest way to serve your NLP model from scratch