Announcing Ray 2.0

Today, we are excited to announce Ray 2.0. With this major release, we take strides towards our goal of making distributed computing scalable, unified, and open.

Towards these goals, Ray 2.0 features new capabilities for unifying the machine learning (ML) ecosystem, improving Ray's production support, and making it easier than ever for ML practitioners to use Ray's libraries.

LinkUnifying the ML Ecosystem

Ray AI Runtime — a scalable and unified toolkit for ML applications*

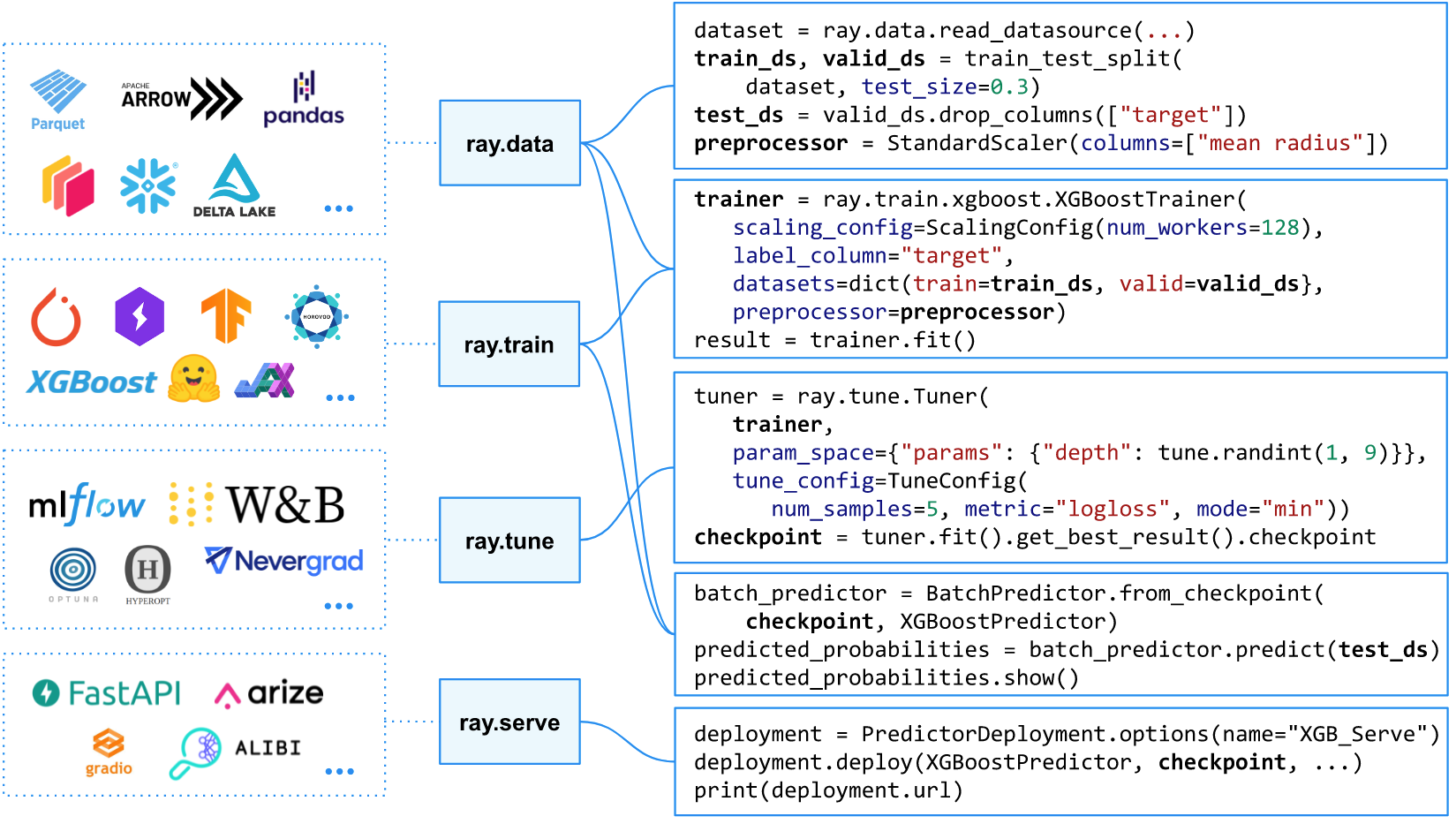

Since its inception, Ray has been used to accelerate and scale compute-heavy machine learning workloads. The Ray AI Runtime (AIR), released as beta, is an effort to incorporate and synthesize lessons learned: by talking to community users and taking into account their use cases both large and small. This deliberate effort has produced a simple, unified toolkit for the Ray community.

With Ray AIR, we're aligning Ray's existing native ML libraries to work seamlessly together and integrate easily with popular ML frameworks in the community. Our goal with Ray AIR is to make it easy to run any kind of ML workload in just a few lines of Python code, letting Ray do the heavy lifting of coordinating computations at scale.

You can learn more about Ray AIR (beta) in the documentation.

LinkLarge-Scale Shuffle for ML Workloads

Many of our ML users tell us that if Ray supported shuffling big data, they could simplify their infrastructure to standardize more on Ray. In Ray 2.0, this is now a reality: Ray now supports natively shuffling 100TB or more of data with the Ray Datasets library. Check out the docs for more information on how to get started, and refer to our technical paper on how distributed shuffling was implemented in Ray.

LinkProduction and Scale

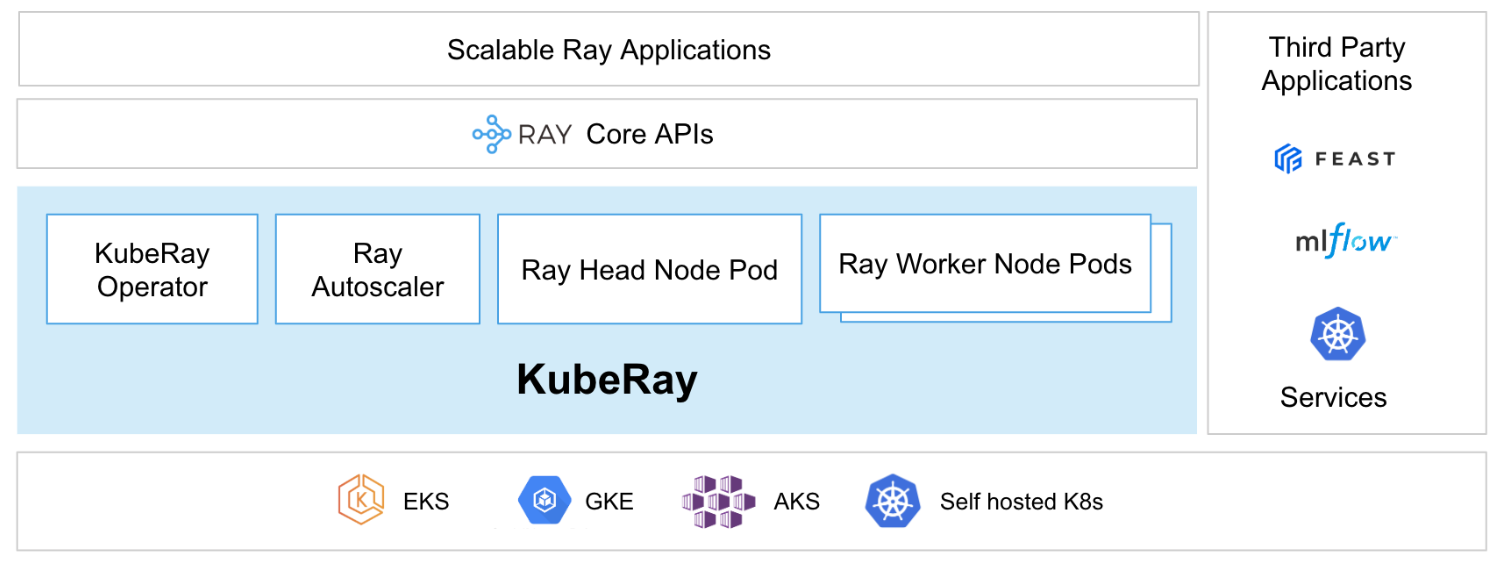

Production support for Kubernetes via KubeRay

Developed in collaboration with ByteDance and Microsoft, KubeRay makes it easier than ever to deploy Ray-based jobs and services on Kubernetes and replaces the legacy Python-based Ray operator. KubeRay, currently in beta, is the officially recommended way of running Ray on K8s. Learn more about KubeRay here.

High-Availability for Large-Scale Ray Serve Deployments

With KubeRay, you can easily deploy Ray Serve clusters in fault-tolerant and HA modes, eliminating the previous operational work necessary to set this up. You can deploy services in fail-over mode, or Ray clusters in fully HA mode (alpha), which removes any single point of failure from the Ray cluster. Learn more about deploying Serve in KubeRay here.

New Ray Observability Tooling

Ever wanted to check up on what's going on in your Ray workload? New in Ray 2.0, the Ray State Observability APIs (alpha) give developers visibility into the health and performance of their Ray workloads. Learn more about Ray State Observability here.

LinkSimpler, More Powerful Library APIs

LinkRay Serve Deployment Graph — multi-step, multi-model inference simplified

Combining or chaining multiple models to make a single prediction has emerged as a common pattern in modern ML applications. Ray Serve's flexible execution model has always made it a popular choice for these use cases.

Building on these strengths, we are excited to introduce Ray Serve’s Deployment Graph API — a new and easier way to build, test, and deploy an inference graph of deployments. Deployment Graphs are built on Ray's intuitive task and actor APIs to make inference pipelines that mix model and business logic accessible to all ML practitioners.

Learn more about deployment graphs, currently in beta, here.

LinkRLlib - Updated Developer APIs and Offline Reinforcement Learning Improvements

In Ray 2.0, the RLlib team has refactored the implementation of all key RLlib algorithms to follow simpler, imperative patterns, making it easier to implement new algorithms or make adjustments to existing ones. Learn more about these new patterns here.

RLlib is also introducing a new Connectors API to make the preprocessing "glue" for environments and policy models more explicit and transparent to users, simplifying the deployment of RLlib models.

Finally, Ray 2.0 features newly added algorithms and functionality for helping run reinforcement learning workloads in production. RLlib now supports Critic Regularized Regression (CRR), a state-of-the-art algorithm for offline RL and Doubly Robust Off-Policy Estimator, an algorithm for policy evaluation. Learn more about RLlib’s Offline RL support here.

LinkTakeaway

To sum up, Ray 2.0 is a major step towards our goal of simplifying compute for ML practitioners and infrastructure groups. With its new enhancements, Ray 2.0 opens up new ideas, opportunities, and applications to these broader communities.

Please try it out and let us know your feedback!

*Update Sep 16, 2023: We are sunsetting the "Ray AIR" concept and namespace starting with Ray 2.7. The changes follow the proposal outlined in this REP.