The Ray Platform for AI Builders

Made by the creators of Ray, Anyscale helps teams build and run data and AI workloads of any size with ease, reliability and cost-efficiency.

All your AI.

One platform.

Any cloud.

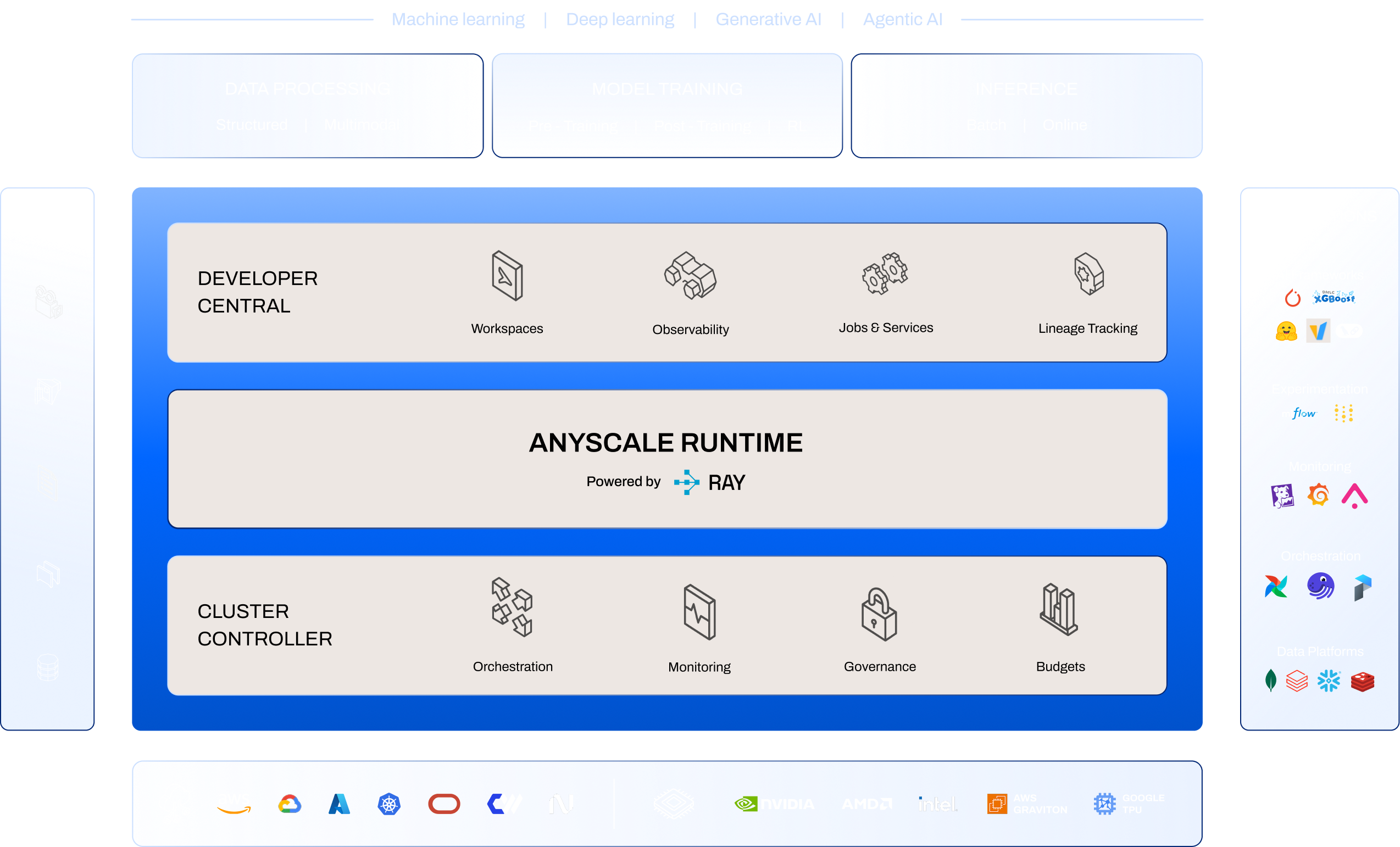

Developer central

Accelerate building distributed Python apps with complete dev tools

Scale development without limits with remote clusters accessible from in-browser IDE, VS Code desktop or Cursor. Iterate with speed with auto propagation of new container and uv dependencies across Ray nodes, and save costs with built-in idle termination.

Anyscale runtime

Scale Ray workloads with performance optimizations

Achieve +2x faster execution for Ray workloads without code changes. Speed up Ray Data with accelerated metadata fetching, boost Ray Train with elastic training, and cut startup times in Ray Serve with faster model loading and cluster startup.

Cluster Controller

Run reliably with fully-managed Ray clusters in your cloud of choice

Scale compute from zero to hundreds of nodes in under a minute and dynamically adjust compute to match demand with fast cold starts and elastic scaling.

Workloads

With Anyscale, unleash the full power of Ray with improved resource utilization and cost-efficient performance at scale.

Distributed Model Training

- Scale XGBoost and PyTorch training jobs with Ray Train library that provides familiar APIs that seamlessly distribute computation across GPUs.

- Accelerate adjacent workflows like data loading, hyperparameter tuning and cross-validation on tabular or unstructured datasets without single-node capacity limits.

- Achieve faster and more reliable training on Anyscale using advanced observability for distributed debugging/tuning and reliable execution on managed Ray clusters.

Ecosystem

Integrate Anyscale with popular AI tools and frameworks

Resources

Case Study

50x larger datasets processed for more accurate models

By migrating to Anyscale, Coinbase accelerated model iteration cycles, maximized hardware efficiency, and eliminated infrastructure overhead.

"Ray and Anyscale aligned with our vision: to iterate faster, scale smarter, and operate more efficiently."

Wenyue Liu | Senior Machine Learning Platform Engineer, Coinbase

FAQs

Try Anyscale Today

Build, deploy, and manage scalable AI and Python applications on the leading AI platform. Unlock your AI potential with Anyscale.