Ray Serve is a scalable model serving library for building online inference APIs. Serve is framework-agnostic, so you can use a single toolkit to serve everything from deep learning models built with frameworks like PyTorch, TensorFlow, and Keras, to Scikit-Learn models, to arbitrary Python business logic. It has several features and performance optimizations for serving Large Language Models such as response streaming, dynamic request batching, multi-node/multi-GPU serving, etc.

Ray Serve is particularly well suited for model composition and many model serving, enabling you to build a complex inference service consisting of multiple ML models and business logic all in Python code.

Ray Serve is built on top of Ray, so it easily scales to many machines and offers flexible scheduling support such as fractional GPUs so you can share resources and serve many machine learning models at low cost.

LinkWhy Use Ray Serve?

As models grow in size, they require more advanced and varied AI accelerators (GPU, TPU, AWS Inferentia) for better performance, as well as specific features for serving ML models. Anyscale’s Ray Serve offers a number of built-in primitives to make ML serving more efficient, including:

Fast Node Startup: Anyscale’s Ray Serve can start up a node in as little as 60 seconds.

Autoscaling: Scale node usage up and down depending on traffic.

Replica Compaction: Anyscale will automatically combine and collapse nodes in order to reduce resource fragmentation.

Scale to Zero: Release your resources when there is no traffic in order to reduce costs.

Heterogeneous Compute: Use GPUs and CPUs in the same cluster.

LinkMulti-Tenant Application Deployments

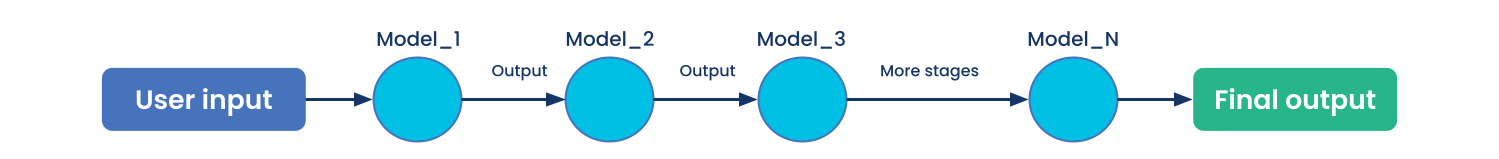

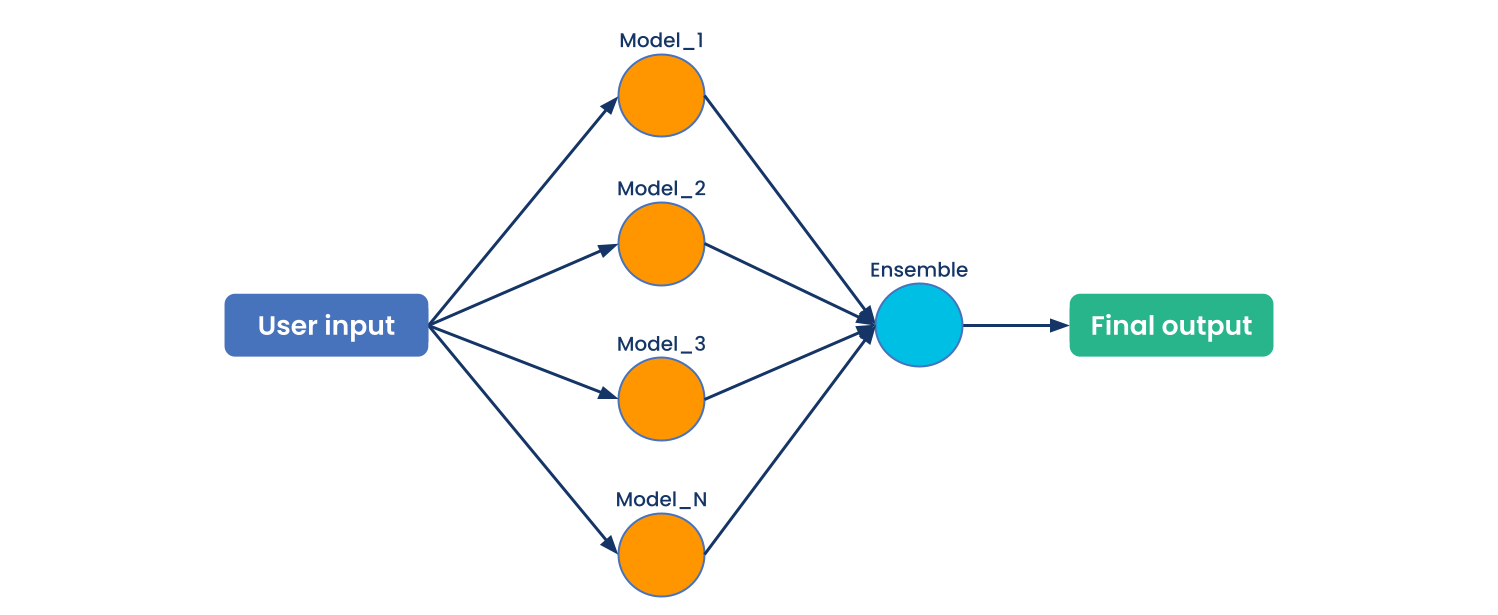

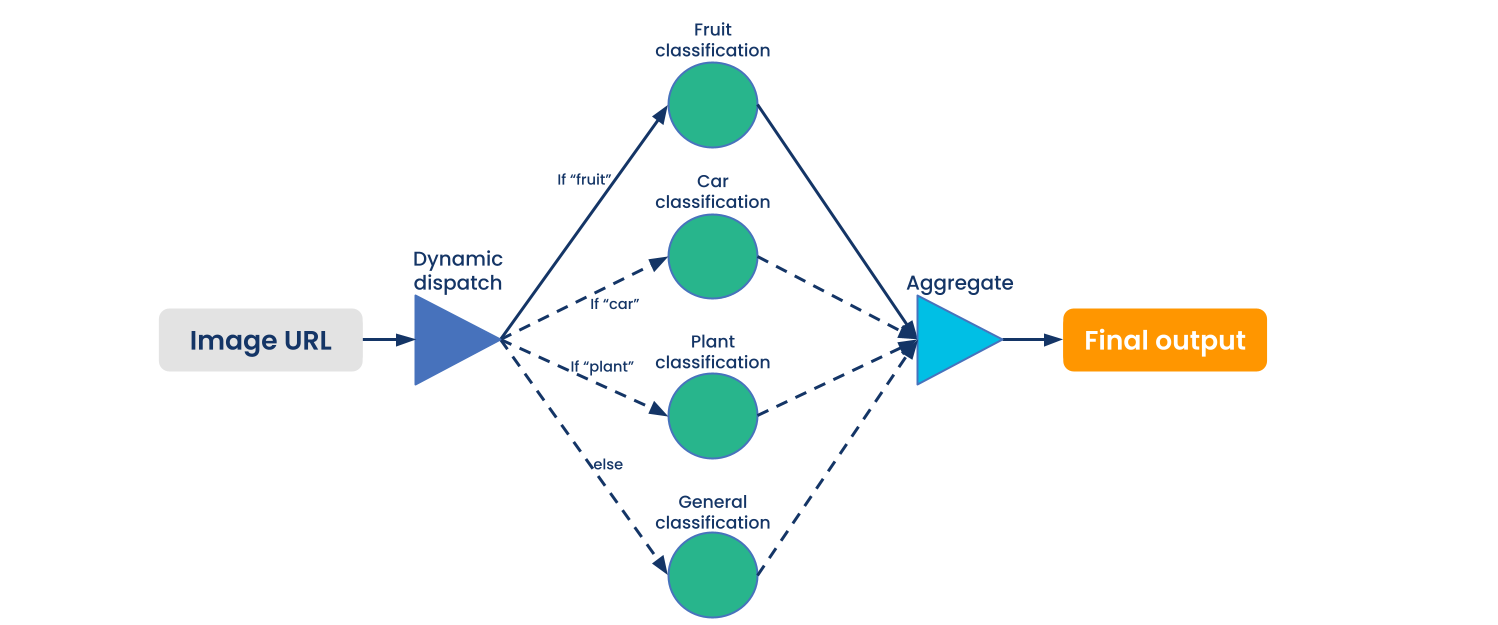

Machine learning serving pipelines are getting longer, wider, and more dynamic. They often consist of many models to make a single prediction. To support complex workloads that require composing many different models together, machine learning applications in production typically follow patterns such as model chaining, fanout and ensemble, or dynamic selection and dispatch.

Figure 1: Chaining multiple models in sequence. This is common in applications like image processing, where each image is passed through a series of transformations.

Figure 1: Chaining multiple models in sequence. This is common in applications like image processing, where each image is passed through a series of transformations. Figure 2: Ensembling multiple models. Often, business logic is used to select the best output or filter the outputs for a given request.

Figure 2: Ensembling multiple models. Often, business logic is used to select the best output or filter the outputs for a given request. Figure 3: Dynamically selecting and dispatching to models. Many use cases don’t require every available model for every input request. This pattern allows only querying the necessary models.

Figure 3: Dynamically selecting and dispatching to models. Many use cases don’t require every available model for every input request. This pattern allows only querying the necessary models.Ray Serve was built to support these patterns and is both easy to develop and production ready. It has unique strengths suited to multi-step, multi-model inference pipelines, including:

Flexible scheduling

Efficient communication

Fractional resource allocation

Shared memory

Compared to other serving frameworks' inference graphs APIs, which are less pythonic and in which authoring is dominated by hand-writing YAML, Ray Serve offers easy, composable, and pythonic APIs, adhering to Ray's philosophy of simple API abstractions.

Link4 Benefits of Ray Serve

LinkBuild End-to-End ML-Powered Applications

Many solutions for ML serving focus on “tensor-in, tensor-out” serving: that is, they wrap ML models behind a predefined, structured endpoint. However, machine learning isn’t useful in isolation. It’s often important to combine machine learning with business logic and traditional web serving logic such as database queries.

Ray Serve is unique in that it allows you to build and deploy an end-to-end distributed serving application in a single framework. You can combine multiple ML models, business logic, and expressive HTTP handling using Serve’s FastAPI integration (see FastAPI HTTP Deployments) to build your entire application as one Python program.

LinkCombine Multiple Models Using a Programmable API

Often, solving a problem requires more than just a single machine learning model. For instance, image processing applications typically require a multi-stage pipeline consisting of steps like preprocessing, segmentation, and filtering to achieve their end goal. In many cases each model may use a different architecture or framework and require different resources (like CPUs vs GPUs).

Many other solutions support defining a static graph in YAML or some other configuration language. This can be limiting and hard to work with. Ray Serve, on the other hand, supports multi-model composition using a programmable API where calls to different models look just like function calls. The models can use different resources and run across different machines in the cluster, but you can write it like a regular program. Check out our documentation on how to Deploy Compositions of Models for more details.

LinkFlexibly Scale Up and Allocate Resources

Machine learning models are compute-intensive and therefore can be very expensive to operate. A key requirement for any ML serving system is being able to dynamically scale up and down and allocate the right resources for each model to handle the request load while saving cost.

That’s why Ray Serve supports dynamically scaling the resources for a model up and down by adjusting the number of replicas, batching requests to take advantage of efficient vectorized operations (especially important on GPUs), and a flexible resource allocation model that enables you to serve many models on limited hardware resources.

LinkAvoid Framework or Vendor Lock-In

Machine learning moves fast, with new libraries and model architectures being released all the time, it’s important to avoid locking yourself into a solution that is tied to a specific framework. This is particularly important in serving, where making changes to your infrastructure can be time consuming, expensive, and risky. Additionally, many hosted solutions are limited to a single cloud provider which can be a problem in today’s multi-cloud world.

Ray Serve is not tied to any specific machine learning library or framework, but rather provides a general-purpose scalable serving layer. Because it’s built on top of Ray, you can run it anywhere Ray can: on your laptop, Kubernetes, any major cloud provider, or even on-premise.