Ray is an open source AI Compute Engine for scaling AI and Python applications like machine learning. With Ray, developers can build and run distributed applications—without any prior distributed systems expertise.

LinkWhy Ray?

As AI workloads scale and computation requirements become more and more massive, AI and ML developers need to shift from coding and developing on their laptops to distributed computing—in other words, scaling development across multiple GPUs and CPUs using cloud computing.

Ray minimizes the complexity of running your distributed individual and end-to-end machine learning workflows with these components:

Scalable libraries for common machine learning tasks such as data preprocessing, distributed training, hyperparameter tuning, reinforcement learning, and model serving.

Pythonic distributed computing primitives for parallelizing and scaling Python applications.

Integrations and utilities for integrating and deploying a Ray cluster with existing tools and infrastructure such as Kubernetes, AWS, GCP, and Azure.

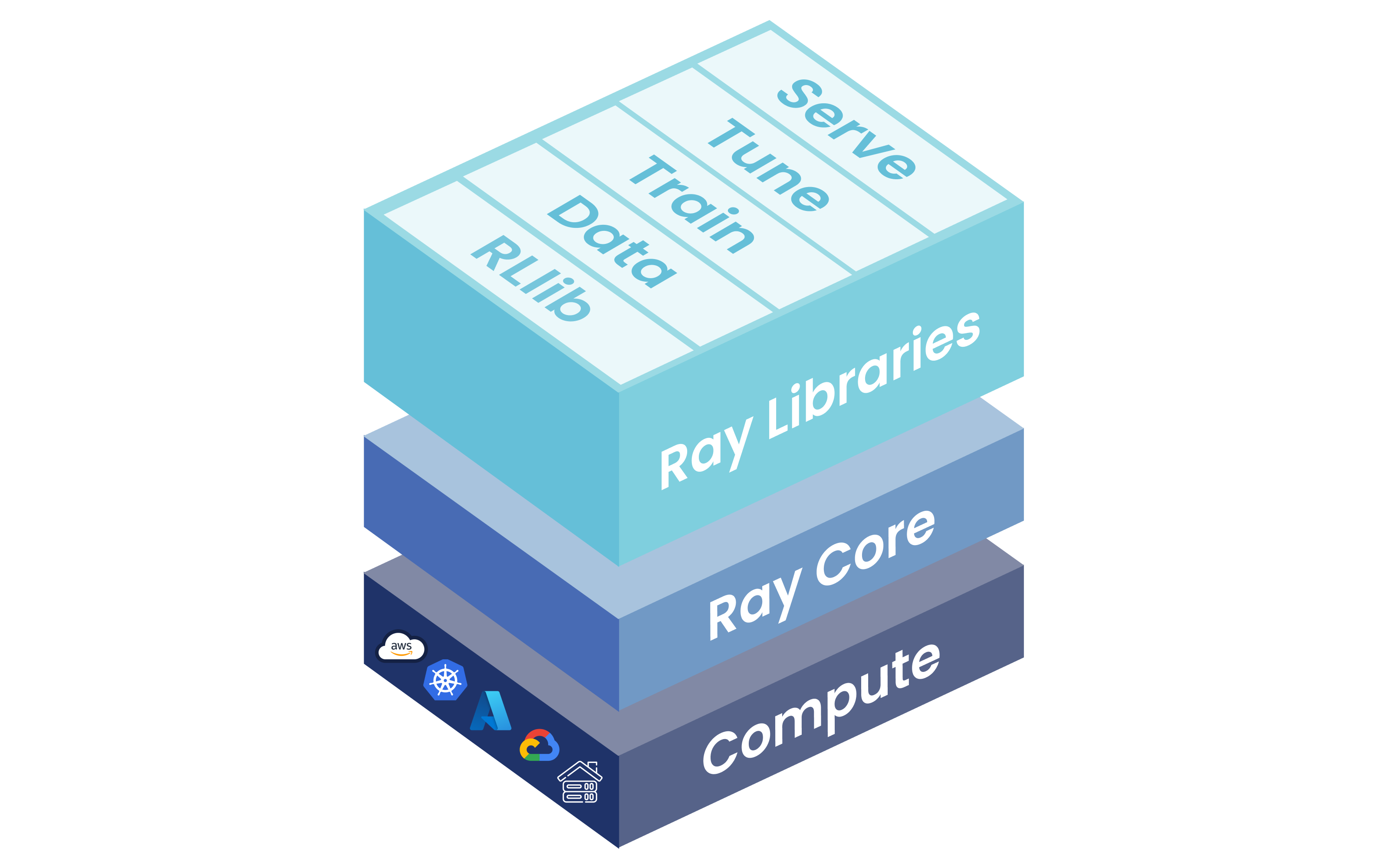

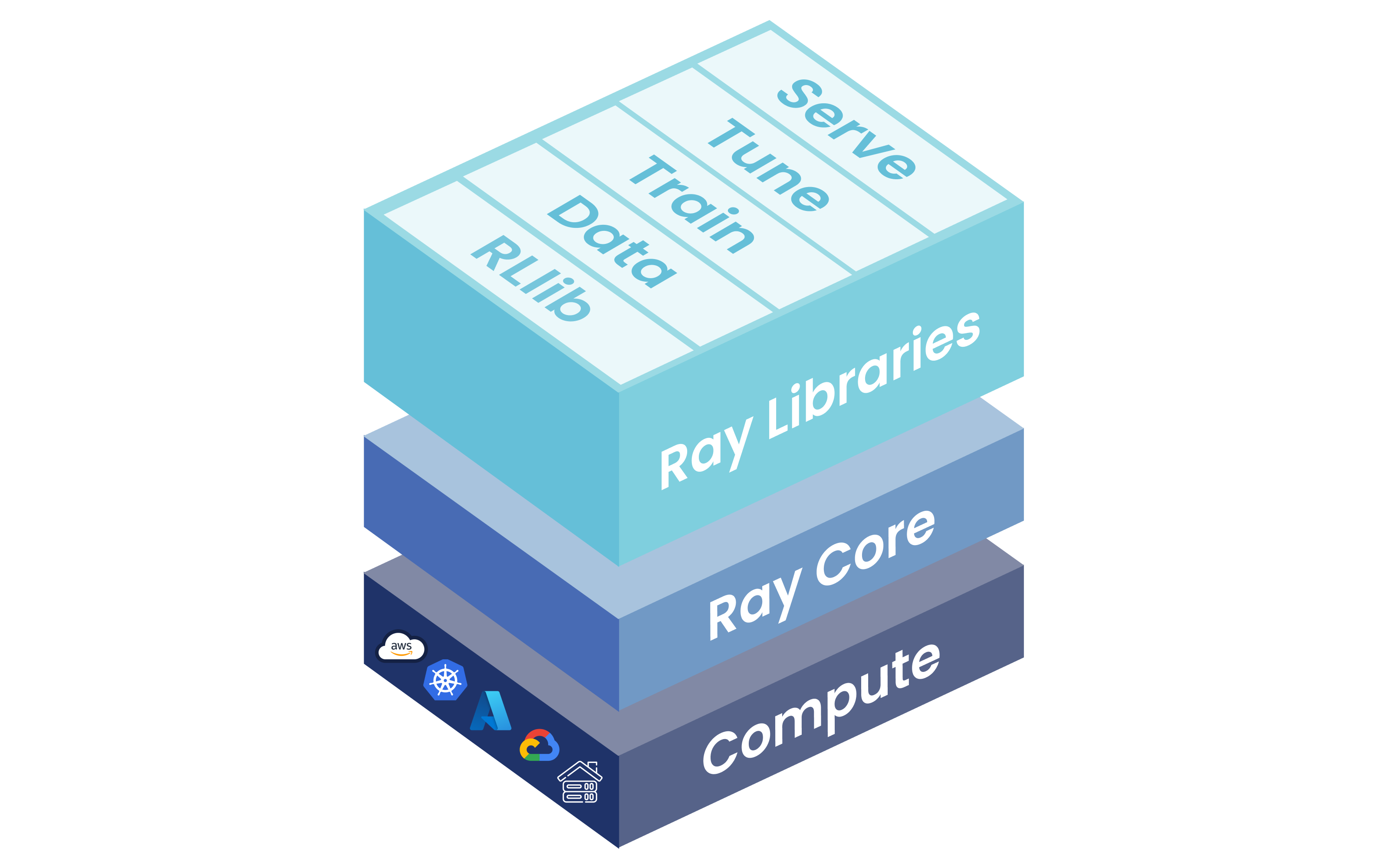

LinkRay Framework: Ray Core + Libraries

Ray’s unified compute framework consists of three layers:

Ray AI Libraries: An open-source, Python, domain-specific set of libraries that equip ML engineers, data scientists, and researchers with a scalable and unified toolkit for ML applications.

Ray Core: An open-source, Python, general purpose, distributed computing library that enables ML engineers and Python developers to scale Python applications and accelerate machine learning workloads.

Ray Clusters: A set of worker nodes connected to a common Ray head node. Ray clusters can be fixed-size, or they can autoscale up and down according to the resources requested by applications running on the cluster.

LinkRay Core

Ray Core facilitates distributed computing by providing a number of core primitives (i.e. tasks, actors, and objects) for building and scaling distributed applications.

Ray Core benefits:

Python native

Enable parallel computing with one line of code

Integrate with any cloud and ML framework

Manage heterogeneous compute

Enhanced APIs

LinkRay Libraries

Ray Core is compatible with a number of machine learning libraries built on Ray. These libraries provide additional features and guidance to tackle key elements of distributed computing.

Ray’s libraries are for both data scientists and ML engineers alike. For data scientists, these libraries can be used to scale individual workloads, and also end-to-end ML applications. For ML Engineers, these libraries provides scalable platform abstractions that can be used to easily onboard and integrate tooling from the broader ML ecosystem.

LinkRay Data

Ray Data is an open source ML library built on top of Ray Core designed to help you scale data processing workflows. It is particularly suited for unstructured data processing like image, video, or audio processing.

LinkRay Train

Built on top of Ray, Ray Train is an ML library to enhance distributed model training. Ray Train has support for a variety of AI training processes including hyperparameter training, and it was instrumental in training OpenAI’s Chat GPT 3.5 and 4.0.

LinkRay Tune

Like Ray Train, Ray Tune is an ML library specifically designed to enhance machine learning fine-tuning.

LinkRay Serve

Ray Serve is a machine learning library focused on enhancing deployment and serving at scale. Built on top of Ray Core, it can help scale things like online inference or offline batch inference.

LinkRay RLlib

Ray RLlib stands for Ray Reinforcement Learning library, an ML library for reinforcement learning. Reinforcement learning is one of the key ways to train ML models (other popular ways include supervised learning and unsupervised learning).

LinkRay Clusters

A Ray cluster is a set of worker nodes connected to a Ray head node. Clusters can be fixed-size, or can be configured to autoscale up and down according to resource requirements.

LinkWhere can I deploy Ray clusters?

Ray provides native cluster deployment support on the following technology stacks:

On AWS and GCP. Community-supported Azure, Aliyun and vSphere integrations also exist.

On Kubernetes, via the officially supported KubeRay project.

To learn more about Ray clusters, refer to the Ray Clusters docs page.

LinkWho Should Use Ray?

For data scientists and machine learning practitioners, Ray lets you scale jobs without needing infrastructure expertise:

Easily parallelize and distribute ML workloads across multiple nodes and GPUs.

Leverage the ML ecosystem with native and extensible integrations.

For ML platform builders and ML engineers, Ray:

Provides compute abstractions for creating a scalable and robust ML platform.

Provides a unified ML API that simplifies onboarding and integration with the broader ML ecosystem.

Reduces friction between development and production by enabling the same Python code to scale seamlessly from a laptop to a large cluster.

For distributed systems engineers, Ray automatically handles key processes:

Orchestration–Managing the various components of a distributed system.

Scheduling–Coordinating when and where tasks are executed.

Fault tolerance–Ensuring tasks complete regardless of inevitable points of failure.

Auto-scaling–Adjusting the number of resources allocated to dynamic demand.

LinkUnderstanding Ray Primitives

Ray’s key primitives are simple, but can be composed together to express almost any kind of distributed computation. These primitives work together to enable Ray to flexibly support a broad range of distributed apps.

Ray tasks are the Ray primitive equivalent of a Python function, enabling you to execute arbitrary functions asynchronously on separate Python workers.

Ray actors are stateful workers (or services). Like tasks, actors support CPU, GPU, and custom resource requirements.

Ray objects are where Ray tasks and actors create and compute on. Objects can be stored anywhere in a Ray cluster.

Ray placement groups allow users to atomically reserve groups of resources across multiple nodes.

Ray environment dependencies manage your cluster dependencies (and can be configured to manage them in advance or on the fly).

LinkGet Started with Ray

Ray currently officially supports x86_64, aarch64 (ARM) for Linux, and Apple silicon (M1) hardware. Ray on Windows is currently in beta.

To get started, install Ray:

$ pip-install -U ray

For more resources, see Installing Ray for more installation options or check out the Ray GitHub project.

LinkManaged Ray on Anyscale

Built by the creators of Ray, Anyscale is the smartest way to run Ray. While Ray can help you get started with distributed computing, Anyscale is the only fully-managed, all-in-one Ray platform. Get access to proprietary optimizations, additional machine learning libraries, enhanced support, and more—only on Anyscale.