Turbocharge LangChain: guide to 20x faster embedding

This is part 2(part 1 here) of a blog series. In this blog, we’ll show you how to turbocharge embeddings. In future parts, we will show you how to combine a vector database and an LLM to create a fact-based question answering service. Additionally, we will optimize the code and measure performance: cost, latency and throughput.

Generating embeddings from documents is a critical step for LLM workflows. Many LLM apps are being built today through retrieval based similarity search:

Documents are embedded and stored in a vector database.

Incoming queries are used to pull semantically relevant passages from the vector database, and these passages are used as context for LLMs to answer the query.

In a previous blog post, we showed how to use LangChain to do step 1, and we also showed how to parallelize this step by using Ray tasks for faster embedding creation.

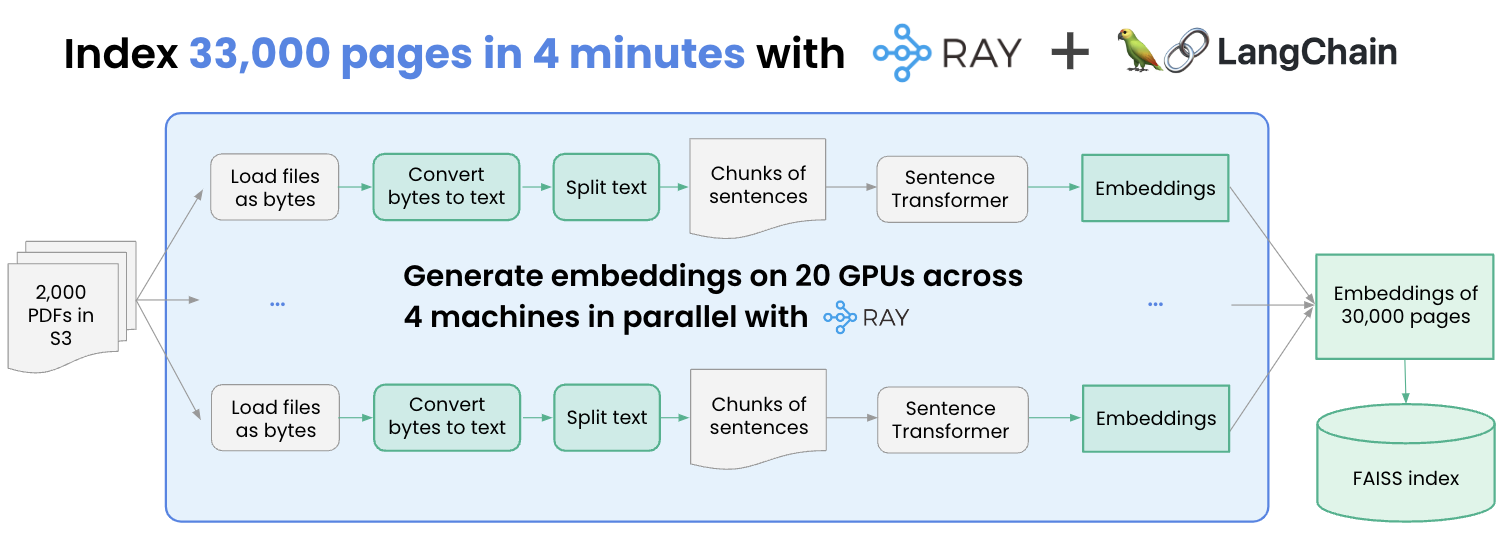

In this blog post, we take it one step further, scaling out to many more documents. Continue reading to see how to use Ray Data, a distributed data processing system that’s a part of the Ray framework, to generate and store embeddings for 2,000 PDF documents from cloud storage, parallelizing across 20 GPUs, all in under 4 minutes and in less than 100 lines of code.

While in this walkthrough we use Ray Data to read PDF files from S3 cloud storage, it also supports a wide number of other data formats like text data, parquet, images, and can read data from a variety of sources like MongoDB and SQL Databases.

LinkWhy do I need to parallelize this?

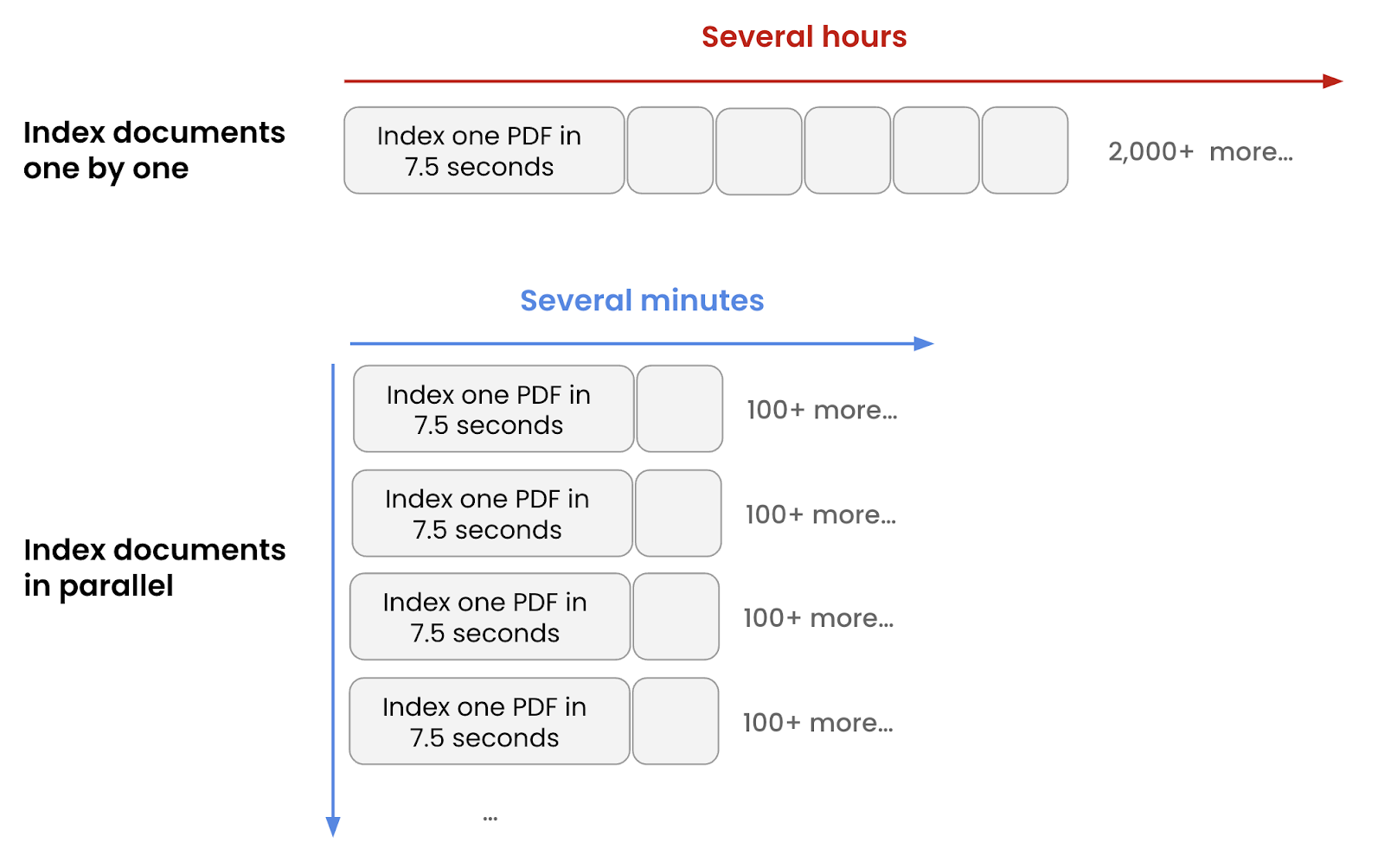

LangChain provides all the tools and the integrations for building LLM applications, including loading, embedding, and storing documents. While LangChain works great for quickly getting started with a handful of documents, when you want to scale your corpus up to thousands or more documents, this can quickly become unwieldy.

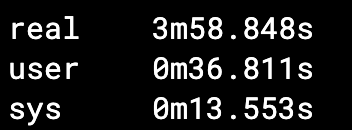

Naively using a for loop to do this for each document within a corpus of a 2,000 documents takes 75 minutes:

1import os

2from tqdm import tqdm

3

4from langchain.document_loaders import PyPDFLoader

5from langchain.embeddings import HuggingFaceEmbeddings

6from langchain.text_splitter import RecursiveCharacterTextSplitter

7from langchain.vectorstores import FAISS

8

9text_splitter = RecursiveCharacterTextSplitter(

10 chunk_size=1000,

11 chunk_overlap=100,

12 length_function=len,

13)

14model_name = "sentence-transformers/all-mpnet-base-v2"

15model_kwargs = {"device": "cuda"}

16

17hf = HuggingFaceEmbeddings(model_name=model_name, model_kwargs=model_kwargs)

18

19# Put your directory containing PDFs here

20directory = '/tmp/data/'

21pdf_documents = [os.path.join(directory, filename) for filename in os.listdir(directory)]

22

23langchain_documents = []

24for document in tqdm(pdf_documents):

25 try:

26 loader = PyPDFLoader(document)

27 data = loader.load()

28 langchain_documents.extend(data)

29 except Exception:

30 continue

31

32print("Num pages: ", len(langchain_documents))

33print("Splitting all documents")

34split_docs = text_splitter.split_documents(langchain_documents)

35

36print("Embed and create vector index")

37db = FAISS.from_documents(split_docs, embedding=hf)Clearly, if you want to iterate quickly and try out different multiple document corpuses, splitting techniques, chunk sizes, or embedding models, just doing this in a for loop won’t cut it.

Instead, for faster development, you need to horizontally scale, and for this you need a framework to make this parallelization very easy.

By using Ray Data, we can define our embedding generation pipeline and execute it in a few lines of code, and it will automatically scale out, leveraging the compute capabilities of all the CPUs and GPUs in our cluster.

LinkStages of our Data Pipeline

In this example, we want to generate embeddings for our document corpus consisting of the top 2,000 arxiv papers on “large language models”. There are over 30,000 pages in all these documents. The code for generating this dataset can be found here.

Let’s take a look at the stages of our data pipeline:

Load the PDF documents from our S3 bucket as raw bytes

Use PyPDF to convert those bytes into string text

Use LangChain’s text splitter to split the text into chunks

Use a pre-trained sentence-transformers model to embed each chunk

Store the embeddings and the original text into a FAISS vector store

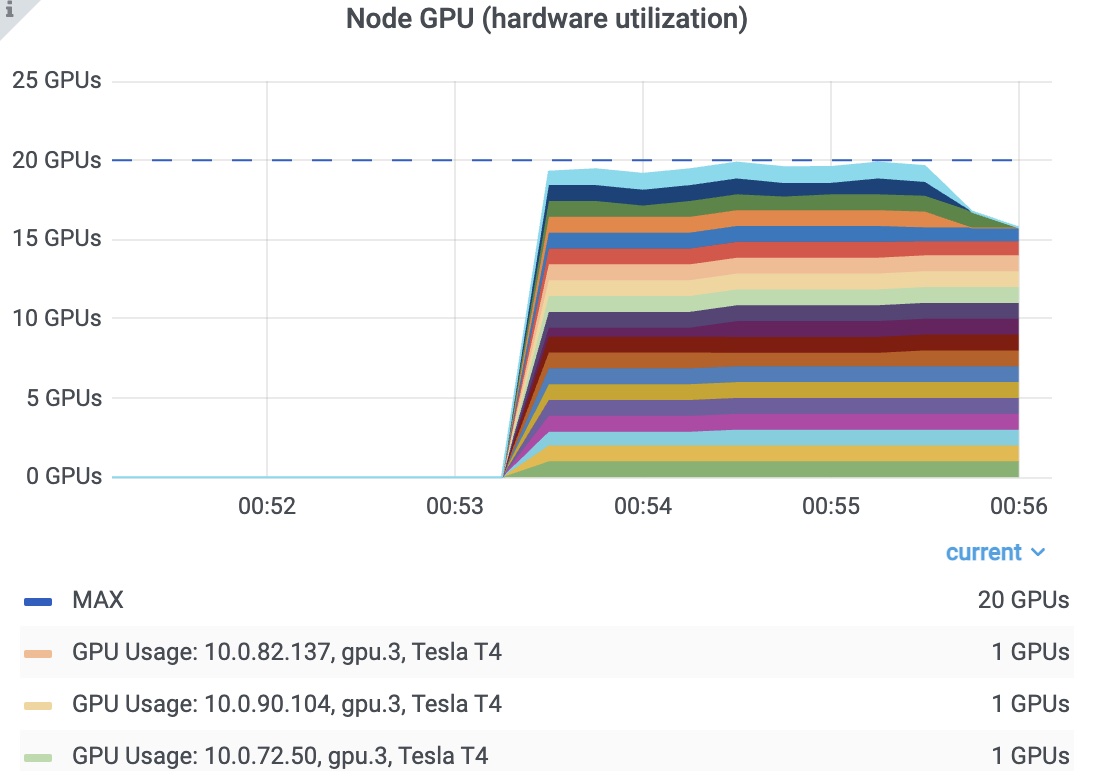

The full data pipeline was run on 5 g4dn.12xlarge instances on AWS EC2, consisting of 20 GPUs in total. The code for the full data pipeline can be found here.

LinkStarting the Ray Cluster

Follow the steps here to set up a multi-node Ray cluster on AWS.

Ray clusters can also be started on GCP, Azure, or other cloud providers. See the Ray Cluster documentation for full info.

Alternatively, you can use Workspaces on Anyscale to manage your Ray clusters.

Now that we have the cluster setup, let’s go through the steps in our script.

LinkInstalling Dependencies

First, we need to install the necessary dependencies on all the nodes in our Ray cluster. We can do this via Ray’s runtime environment feature.

1import ray

2

3ray.init(runtime_env={"pip": ["langchain", "pypdf", "sentence_transformers", "transformers"]})LinkStage 1 - Loading the Documents

Load the 2,143 documents from our S3 bucket as raw bytes.The S3 bucket contains unmodified PDF files that have been downloaded from arxiv.

We can easily do this via Ray Data’s read APIs, which creates a Ray Dataset object. Ray Datasets are lazy. Further operations can be chained and the stages are run only when execution is triggered.

1from ray.data.datasource import FileExtensionFilter

2

3# Filter out non-PDF files.

4ds = ray.data.read_binary_files("s3://ray-llm-batch-inference/", partition_filter=FileExtensionFilter("pdf"))LinkStage 2 - Converting

Use PyPDF to load in the raw bytes and parse them as string text. We also skip over any documents or pages that are unparseable. Even after skipping these, we still have over 33,642 pages in our dataset.

We use the pypdf library directly to read PDFs directly from bytes rather than file paths. Once https://github.com/hwchase17/langchain/pull/3915 is merged, LangChain’s PyPdfLoader can be used directly.

1def convert_to_text(pdf_bytes: bytes):

2 pdf_bytes_io = io.BytesIO(pdf_bytes)

3

4 try:

5 pdf_doc = PdfReader(pdf_bytes_io)

6 except pypdf.errors.PdfStreamError:

7 # Skip pdfs that are not readable.

8 # We still have over 30,000 pages after skipping these.

9 return []

10

11 text = []

12 for page in pdf_doc.pages:

13 try:

14 text.append(page.extract_text())

15 except binascii.Error:

16 # Skip all pages that are not parseable due to malformed characters.

17 print("parsing failed")

18 return text

19

20# We use `flat_map` as `convert_to_text` has a 1->N relationship.

21# It produces N strings for each PDF (one string per page).

22# Use `map` for 1->1 relationship.

23ds = ds.flat_map(convert_to_text)LinkStage 3 - Splitting

Split the text into chunks using LangChain’s TextSplitter abstraction. After applying this transformation, the 33,642 pages are split into 144,411 chunks. Each chunk will then be encoded into an embedding in Step 4.

1from langchain.text_splitter import RecursiveCharacterTextSplitter

2

3def split_text(page_text: str):

4 # Use chunk_size of 1000.

5 # We felt that the answer we would be looking for would be

6 # around 200 words, or around 1000 characters.

7 # This parameter can be modified based on your documents and use case.

8 text_splitter = RecursiveCharacterTextSplitter(

9 chunk_size=1000, chunk_overlap=100, length_function=len

10 )

11 split_text: List[str] = text_splitter.split_text(page_text)

12

13 split_text = [text.replace("\n", " ") for text in split_text]

14 return split_text

15

16# We use `flat_map` as `split_text` has a 1->N relationship.

17# It produces N output chunks for each input string.

18# Use `map` for 1->1 relationship.

19ds = ds.flat_map(split_text)LinkStage 4 - Embedding

Then, we can embed each of our chunks using a pre-trained sentence transformer model on GPUs. Here, we leverage Ray Actors for stateful computation, allowing us to initialize a model only once per GPU, rather than for every single batch.

At the end of this stage, we have 144,411 encodings by running 20 model replicas across 20 GPUs, each processing a batch of 100 chunks at a time to maximize GPU utilization.

1class Embed:

2 def __init__(self):

3 # Specify "cuda" to move the model to GPU.

4 self.transformer = SentenceTransformer(model_name, device="cuda")

5

6 def __call__(self, text_batch: List[str]):

7 # We manually encode using sentence_transformer since LangChain

8 # HuggingfaceEmbeddings does not support specifying a batch size yet.

9 embeddings = self.transformer.encode(

10 text_batch,

11 batch_size=100, # Large batch size to maximize GPU utilization.

12 device="cuda",

13 ).tolist()

14

15 return list(zip(text_batch, embeddings))

16

17# Use `map_batches` since we want to specify a batch size to maximize GPU utilization.

18ds = ds.map_batches(

19 Embed,

20 # Large batch size to maximize GPU utilization.

21 # Too large a batch size may result in GPU running out of memory.

22 # If the chunk size is increased, then decrease batch size.

23 # If the chunk size is decreased, then increase batch size.

24 batch_size=100, # Large batch size to maximize GPU utilization.

25 compute=ray.data.ActorPoolStrategy(min_size=20, max_size=20), # I have 20 GPUs in my cluster

26 num_gpus=1, # 1 GPU for each actor.

27)

28We use the `sentence_transformers` library directly so that we can provide a specific batch size. Once https://github.com/hwchase17/langchain/pull/3914 is merged, LangChain’s `HuggingfaceEmbeddings` can be used instead.

LinkStage 5 - Storing

Finally, we can execute this Data Pipeline by iterating through it, and we store the results in a persisted FAISS vector database for future querying.

1from langchain import FAISS

2from langchain.embeddings import HuggingFaceEmbeddings

3

4text_and_embeddings = []

5for output in ds.iter_rows():

6 text_and_embeddings.append(output)

7

8vectore_store = FAISS.from_embeddings(

9 text_and_embeddings,

10 # Provide the embedding model to embed the query.

11 # The documents are already embedded.

12 embedding=HuggingFaceEmbeddings(model_name=model_name)

13)

14

15# Persist the vector store.

16vectore_store.save_local("faiss_index")LinkExecution

Executing this code, we see that all 20 GPUs are utilized at near 100% utilization. And what would normally take over an hour to run, can now be done in under 4 minutes! If you use AWS spot instances, this would only cost $0.95 total.

LinkQuerying the Vector Database

We can now load in our persisted LangChain FAISS database, and query it for similarity search. Let’s see the top document that’s most relevant to the “prompt engineering” query:

1from langchain.embeddings import HuggingFaceEmbeddings

2from langchain import FAISS

3

4model_name = "sentence-transformers/all-mpnet-base-v2"

5query_embedding = HuggingFaceEmbeddings(model_name=model_name)

6db = FAISS.load_local("faiss_index", query_embedding)

7documents = db.similarity_search(query="prompt engineering", k=1)

8[doc.page_content for doc in documents]1['Prompt Engineering for Job Classification 7 5 LLMs & Prompt Engineering Table '

2 '3. Overview of the various prompt modifications explored in thi s study. '

3 'Short name Description Baseline Provide a a job posting and asking if it is '

4 'fit for a graduate. CoT Give a few examples of accurate classification before '

5 'queryi ng. Zero-CoT Ask the model to reason step-by-step before providing '

6 'its an swer. rawinst Give instructions about its role and the task by adding '

7 'to the user msg. sysinst Give instructions about its role and the task as a '

8 'system msg. bothinst Split instructions with role as a system msg and task '

9 'as a user msg. mock Give task instructions by mocking a discussion where it '

10 'ackn owledges them. reit Reinforce key elements in the instructions by '

11 'repeating the m. strict Ask the model to answer by strictly following a '

12 'given templat e. loose Ask for just the final answer to be given following a '

13 'given temp late. right Asking the model to reach the right conclusion.']

14LinkNext Steps

See part 3 here.

Review the code and data used in this blog in the following Github repo.

See our earlier blog series on solving Generative AI infrastructure with Ray.

If you are interested in learning more about Ray, see Ray.io and Docs.Ray.io.

To connect with the Ray community join #LLM on the Ray Slack or our Discuss forum.

If you are interested in our Ray hosted service for ML Training and Serving, see Anyscale.com/Platform and click the 'Try it now' button

Ray Summit 2023: If you are interested to learn much more about how Ray can be used to build performant and scalable LLM applications and fine-tune/train/serve LLMs on Ray, join Ray Summit on September 18-20th! We have a set of great keynote speakers including John Schulman from OpenAI and Aidan Gomez from Cohere, community and tech talks about Ray as well as practical training focused on LLMs.

Ready to try Anyscale?

Access Anyscale today to see how companies using Anyscale and Ray benefit from rapid time-to-market and faster iterations across the entire AI lifecycle.