Introducing Elastic Distributed Training on Anyscale

Running ML training jobs on a cluster of GPU nodes is essential with the increasing scale of data and models. Distributed ML training allows practitioners to train models in reasonable time frames, but it also introduces more opportunities for failures to occur during training.

Furthermore, practitioners leveraging spot instances to reduce cost increase the failure rate due to spot preemptions. Combined with low spot availability for popular GPU node types, this can oftentimes delay jobs for long periods of time, which is counter to the original cost saving objective.

LinkThe Solution: Elastic Training with Ray on Anyscale

Anyscale now supports elastic training, enabling jobs to seamlessly adapt to changes in resource availability. This ensures continuous execution despite hardware failures or node preemptions, avoiding idle or wasted time. As more nodes become available, the cluster dynamically scales up to speed up training with more worker processes.

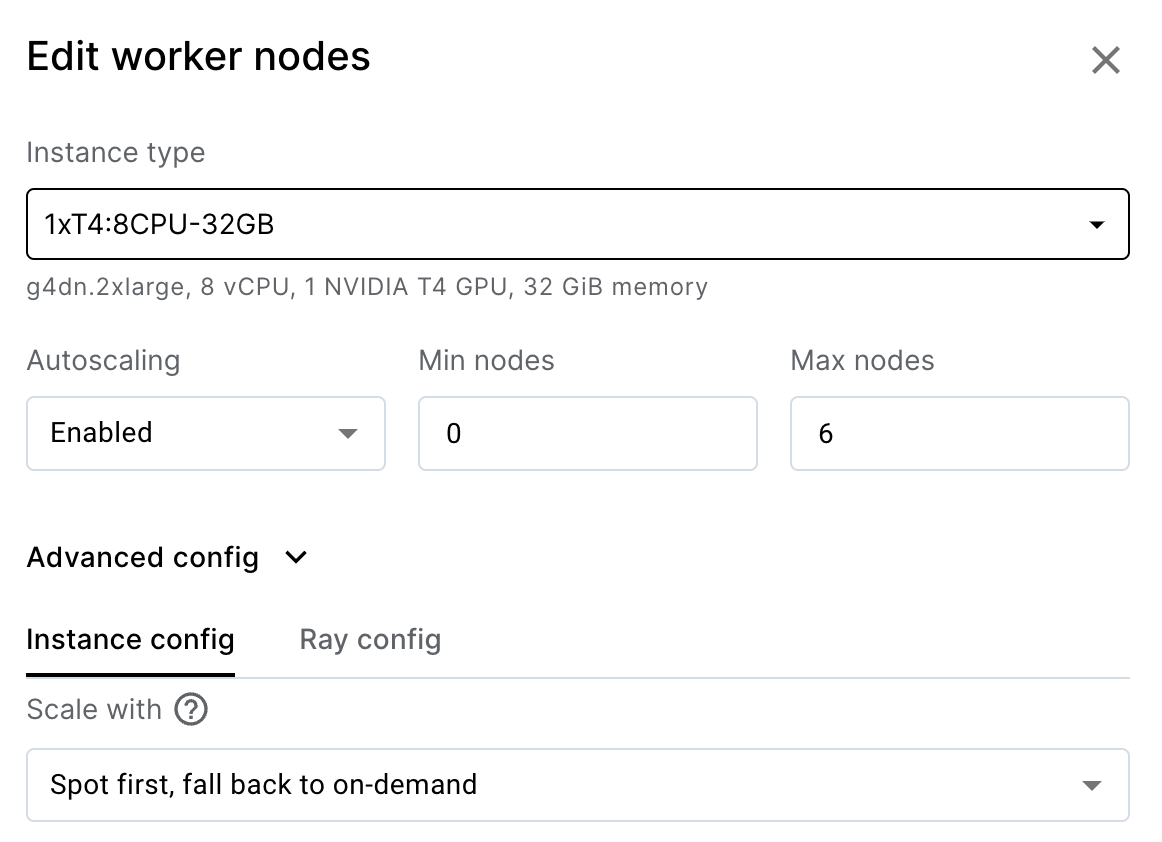

With Anyscale’s support for spot instances and configurable fallback to on-demand instances, users gain fine-grained control over job execution and cost efficiency. For example, a high-priority training job can be set to prefer spot instances but fall back to on-demand instances if needed. This approach maintains the largest possible cluster to ensure timely results while optimizing for cost savings.

For non-critical jobs or those with flexible timelines, users can configure the job to run exclusively on spot instances. If spot nodes are evicted, the cluster continues to progress with fewer nodes instead of halting altogether.

LinkExample of how it works:

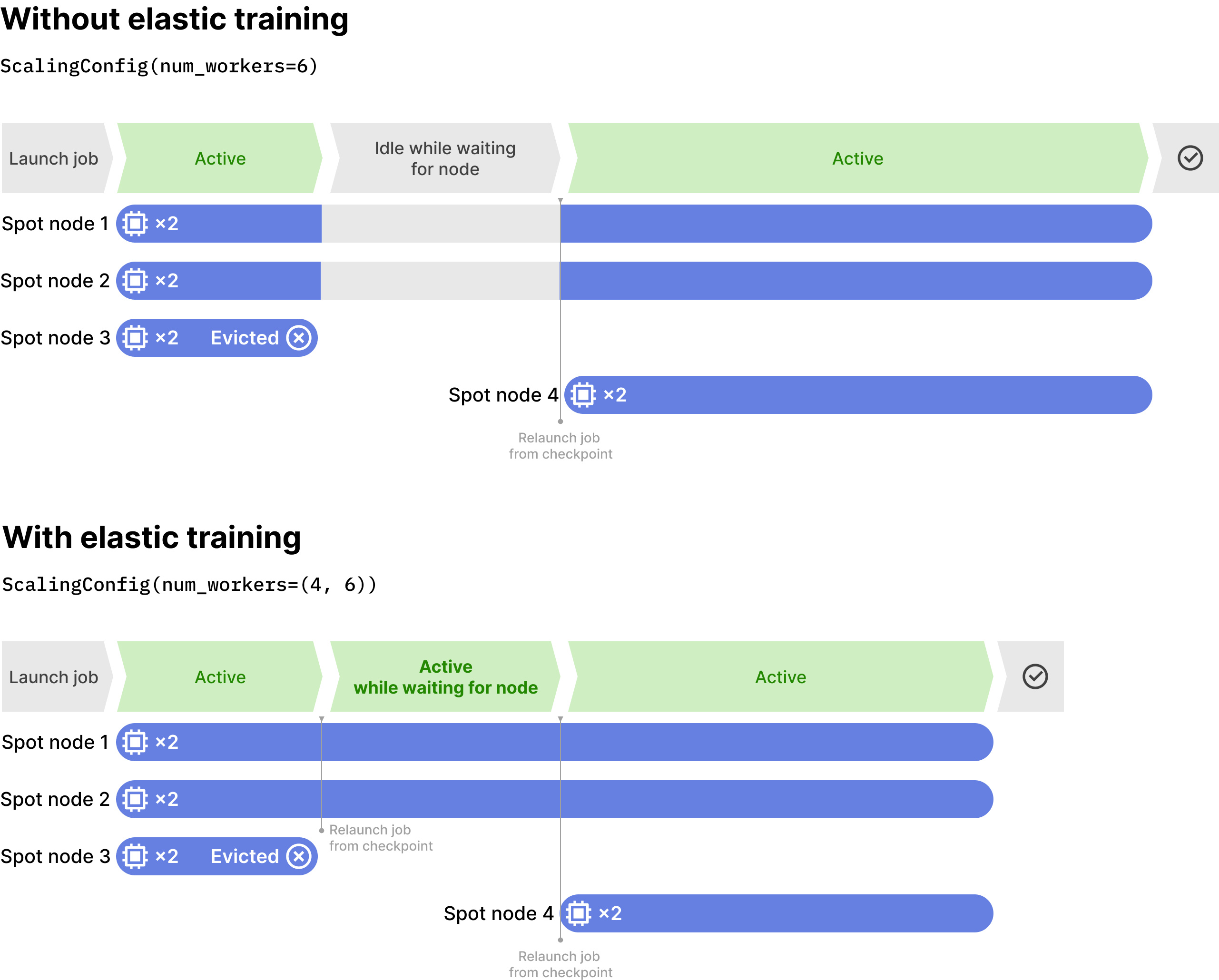

The example below shows a training run configured so that the Ray Train worker group that may elastically scale from 4 to 6 GPU workers. Each node has 2 GPUs.

The training starts with at least 4 workers.

If only 2 out of 3 nodes are available to start with, then training will begin with only 4 workers since the cluster only has 4 GPUs.

Let’s assume that training was able to start with all 6 workers. After some period, the spot node (Spot node 3) is evicted. The cluster will continue training on 4 GPU workers with elastic training.

If another node becomes available, the cluster autoscaling will scale up and add the node.

Note that the non-elastic alternative could possibly hang here for minutes to even hours if spot availability never opens up, which is not unusual for high-demand GPU instances. All remaining GPUs would be idle, wasting money and compute that could be used to make some forward training progress.

Note how easy it is to take advantage of Elastic Training on Anyscale. Developers and practitioners need to only change ScalingConfig(num_workers) to specify (min_workers, max_workers) as a tuple instead of a fixed worker group size.

LinkSummary and Key Benefits:

Lower Costs - run training on lower cost spot instances and save as much as 60% on cloud costs

Faster training - ensure uninterrupted continued progress while training. Deliver models in less time

Minimal Code Changes: Implementing elastic training in Anyscale requires minimal modifications to your existing code.