Fine-tuning LLMs for longer context and better RAG systems

One of the primary limitations of transformers is their ability to operate on long sequences of tokens. For many applications of LLMs, overcoming this limitation is powerful. In Retrieval Augmented Generation (RAG), a longer context augments our model with more information. For LLMs that power agents, such as chatbots, longer context means more tools and capabilities. When summarizing, longer context means more comprehensive summaries. There exist plenty of use-cases for LLMs that are unlocked by longer context lengths. This post explains the fundamentals of context extension and serves as a practical guide to creating tailored, synthetic datasets and solving problems with long context.

If a model has been natively trained on a given context length, it will have little to no inherent ability to generalize to longer sequences. This limits the applicability of many models. Some closed-source models such as GPT 4 perform well on longer contexts, but are prohibitively expensive in production. Motivated by the pain of these limitations, a number of solutions have been proposed to extend the native context length by altering the model. Note that for brevity, when using the term “long context”, we refer to any context that is longer than the original context length of any given model.

In this post, we refine the popular “Needle In a Haystack” benchmark and compare our best fine-tuned models against closed-source alternatives. We also explain our process of creating a problem-specific dataset in a cost-effective and generalizable way by using Anyscale Endpoints with JSON mode. By the end of this post, you should know how to create synthetic datasets for long context for specific use cases and use Anyscale’s APIs to train and deploy your model. For this particular example, we show that you can get very close to the performance of a fine-tuned GPT-3.5-turbo and GPT-4 by fine-tuning a llama-2-13B model and serving it at 10x cheaper inference and training cost. You can later refer to our code as a starting point of creating your own synthetic datasets for long-context fine-tuning.

We expect techniques and principles presented here to be relevant to a multitude of use-cases. For instance, in RAG, we compile snippets perceived as most relevant into a context to augment our model’s internal knowledge. If many snippets are relevant or if reranking is expensive, we want to extend the context of the model. Similarly, when using LLMs to power an agent, long context is a powerful tool that facilitates long-term memory and more descriptive instructions and tools for the agent.

We begin with explaining the intricacies of benchmarking long-context and creating a synthetic fine-tuning dataset to improve on them. We then briefly present how we fine-tuned our open-source models, benchmark them and compare cost and performance against several alternatives.

LinkDataset: Retrieval of structured biographical information

Following OpenAIs announcement of context lengths of 128k for their GPT-4-based models, Greg Kamgradt tweeted about a novel benchmark, called “Needle In A Haystack”. Later, they open sourced the benchmarking code. The original benchmark takes a series of Paul Graham’s essays (the haystack) and inserts a piece of information about what to do in San Francisco (the needle). It then asks the model under test to extract that exact piece of information about the needle from the haystack. The model’s predictions are then evaluated and mapped to an accuracy score at each depth level and context length of the haystack. This benchmark gives us an idea of how well the model can attend to relevant information from a large corpus and base its answer on it. Like in RAG, we chain together bits of information and ask the model to base its answer on the presented information.

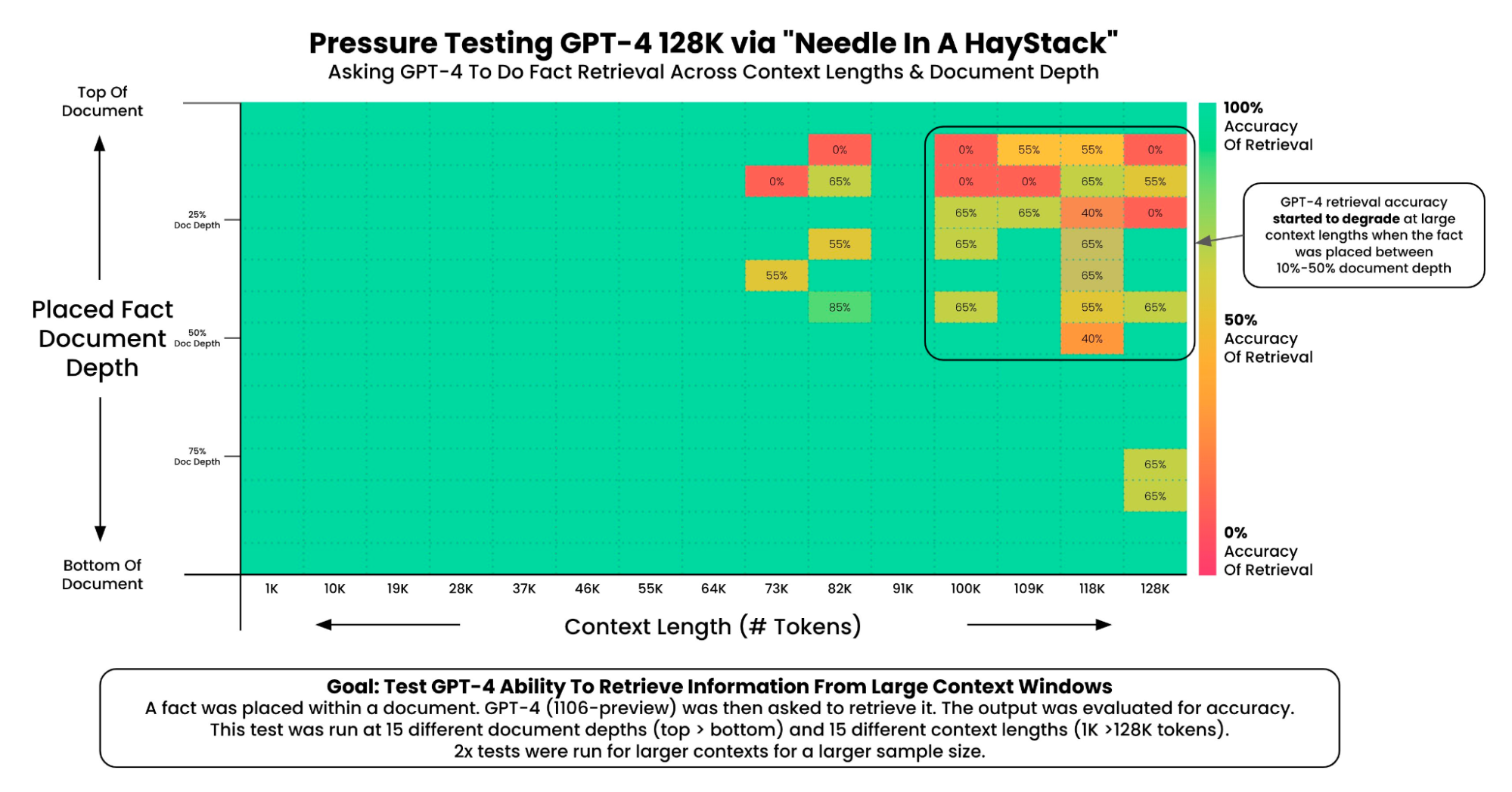

The following is the figure from Greg Kamradt’s original post:

We can see how lower context lengths yield successful retrievals wherever we put the needle. But Greg Kamratd found that “GPT-4’s recall performance started to degrade above 73K tokens” and that “Low recall performance was correlated when the fact to be recalled was placed at 7%-50% document depth”.

The benchmark is similar to the pass-key-retrieval task (Chen et al.), where a key is hidden inside unrelated information and subsequently retrieved by an LLM. Although the “Needle In a Haystack” clearly illustrates model’s capability to extract information from long context, we identify the following issues with it:

The response “The best thing to do in San Francisco is eat a sandwich and sit in Dolores Park on a sunny day.” is an answer that models may already be biased towards, even without grounding.

The information to be retrieved is semantically distinct from the corpus in which it is embedded. Information may “stand out” a lot to the model.

For solving these benchmarks the model can simply “copy and paste” the needle without reasoning about it.

The benchmark involves a judge model to score the answer. This can lead to “self-enhancement” bias during evaluation and is costly.

We propose the following changes:

To solve (1), we first change the domain and base our task on the wiki_bio dataset which is a collection of biographies. We swap out all names from the original wiki_bio dataset with randomly chosen unique names, so that models can not be preconditioned towards the right answer. To solve (2), we use biographies as the “haystack”, but also as the “needle”, making it harder to retrieve the needle among similar information. To solve (3), we ask the model for information such as whether a person is a sportsperson or politician. This requires the model to reason about the biography to a certain extent. To solve (4), We create all labels in JSON as detailed below.

Creating the labels in JSON lets us evaluate efficiently, and it lets us compile the biographies into a fine-tuning dataset. This process can be adapted to a multitude of applications and is scalable and straightforward. We select a number of biographies that are going to be our needles. We then use Anyscale Endpoints in JSON mode to extract information in JSON. Note that creating 5000 labels this way costs less than 1$ with the Anyscale Endpoints API.

We create the training dataset by forming haystacks of concatenated biographies and inserting a needle biography at a random position in each. Evaluation follows the regime of the original “Needle In A Haystack” benchmark: We assemble a haystack for each context length and sweep the position of the needle through the haystack to test how well the model operates on information at each position.

We have open-sourced the scripts for constructing the dataset and the benchmark.

Here is a shortened datapoint consisting of many biographies:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

albert mayer ( born november 29 , 1973 , yerevan ) , is an armenian artist .

tammy thompson [...] andrew dullea ( ; march 1 , 1848 -- august 3 , 1907 )

was an american sculptor of the beaux-arts generation who most embodied

the ideals of the . raised in new york city , he traveled to europe for

further training and artistic study , and then returned to new york ,

where he achieved major critical success for his monuments commemorating

heroes of the american civil war , many of which still stand . in addition

to his famous works such as the robert gould shaw memorial on boston common

and the outstanding grand equestrian monuments to civil war generals ,

john a. logan atop a pedestal done by the architectural firm of mckim ,

mead & white , assisted by the chicago architect daniel h. burnham in

chicago , 1894 -- 97 , and , at the corner of new york 's central park ,

1892 -- 1903 , dullea also maintained an interest in numismatics . he

designed the $ 20 gold piece , for the us mint in 1905 -- 1907 , considered

one of the most beautiful american coins ever issued as well as the $ 10

gold eagle , both of which were minted from 1907 until 1933 . in his later

years he founded the , an artistic colony that included notable painters ,

sculptors , writers , and architects . his brother louis dullea was also a

well-known sculptor with whom he occasionally collaborated [...] the

orpheum theatre in los angeles . frank lloyd wright 's son j

One of these biographies is the one attributed to the random name “andrew dullea”. This is our needle.

The model’s task is to extract information on “andrew dullea”. The following is the label created with Mixtral 7B on Anyscale Endpoints in JSON mode:

1

2

3

{"nationality": "American", "date_of_birth": {"day": 1, "month": 3,

"year": 1848}. "date_of_death": {"day": 3, "month": 8, "year":

1907}, "sportsperson": false, "politician": false}Unlike previous benchmarks, we require a higher level of reasoning capability for the model to understand whether the person is a sportsperson or politician, and the other features are also a little more involved (e.g. that “March” maps to “3” in the month field).

Dataset quality is crucial for the model to learn the statistical dependencies of the extracted data. After creating the training dataset, we manually inspect several data points to filter bad ones. For example, in our case, many biographies belonged to bands rather than persons so we filtered these from our needles. During evaluation, we also found it crucial to manually inspect many evaluated responses to find modes of failures and look for a cause in the dataset. For example, it turned out that some biographies didn’t clearly have a nationality associated with them, which created noise, so we added the label of an unknown nationality. Creating a small tool that makes analysis of evaluation results accessible can go a long way here. In our case, we simply assembled all scores, labels and responses in a tabular HTML file to inspect them.

Let’s recap: We have added spice to the problem of the “Needle in a Haystack” by using anonymized biographies as needle and haystack. We have also created labels in JSON and assembled everything into a fine-tuning dataset. This process generalizes well to other applications that do or don’t require structured outputs, is highly scalable and inexpensive. Before we dive into the results of our fine-tuned models and compare them against closed source alternatives, we briefly demonstrate how we fine-tuned and served our open source models.

LinkFine Tuning and Serving

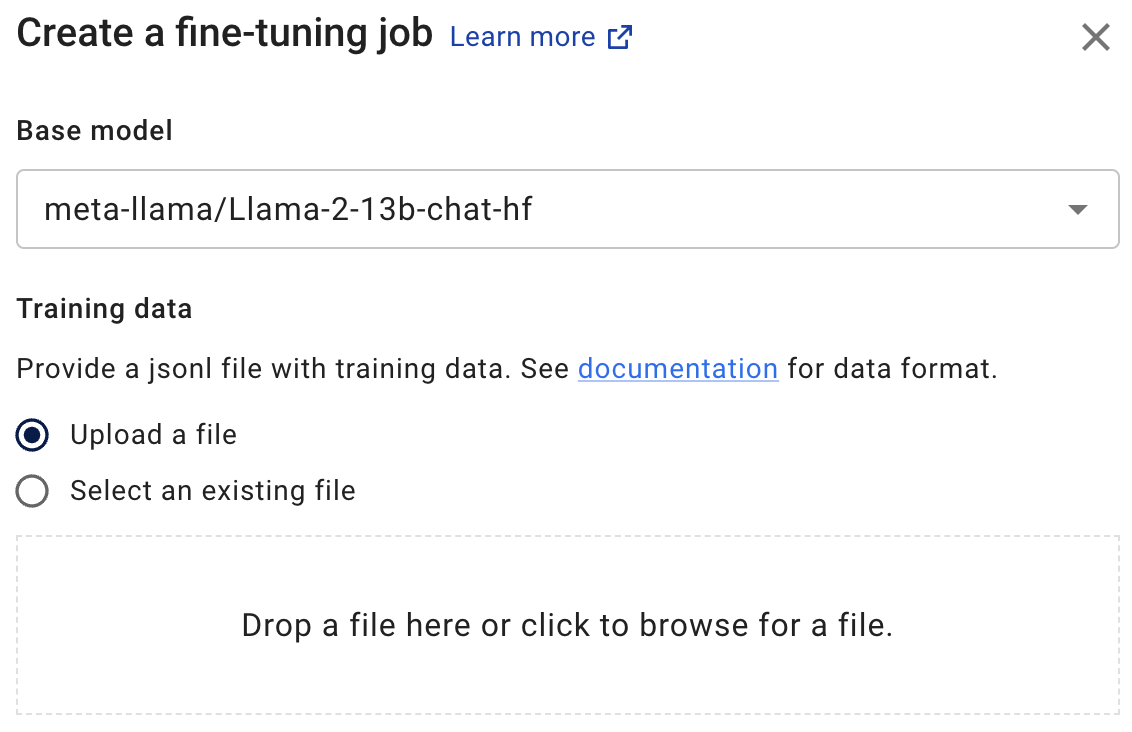

Setting up a fine-tuning stack is hard. When fine-tuning for "long context", we should not extend the context length of the LLM beyond what we need. Anyscale Endpoints Fine-Tuning API automatically handles context length extension up to some maximum and lets you focus on your application logic. (This feature will be released soon) You select the model that you want to fine-tune and upload a dataset such as the one created above and if needed, the Anyscale fine-tuning service sets the context length according to what is needed by the dataset, which can be higher than the native context length. You can use the UI to initiate a fine-tuning job on your data.

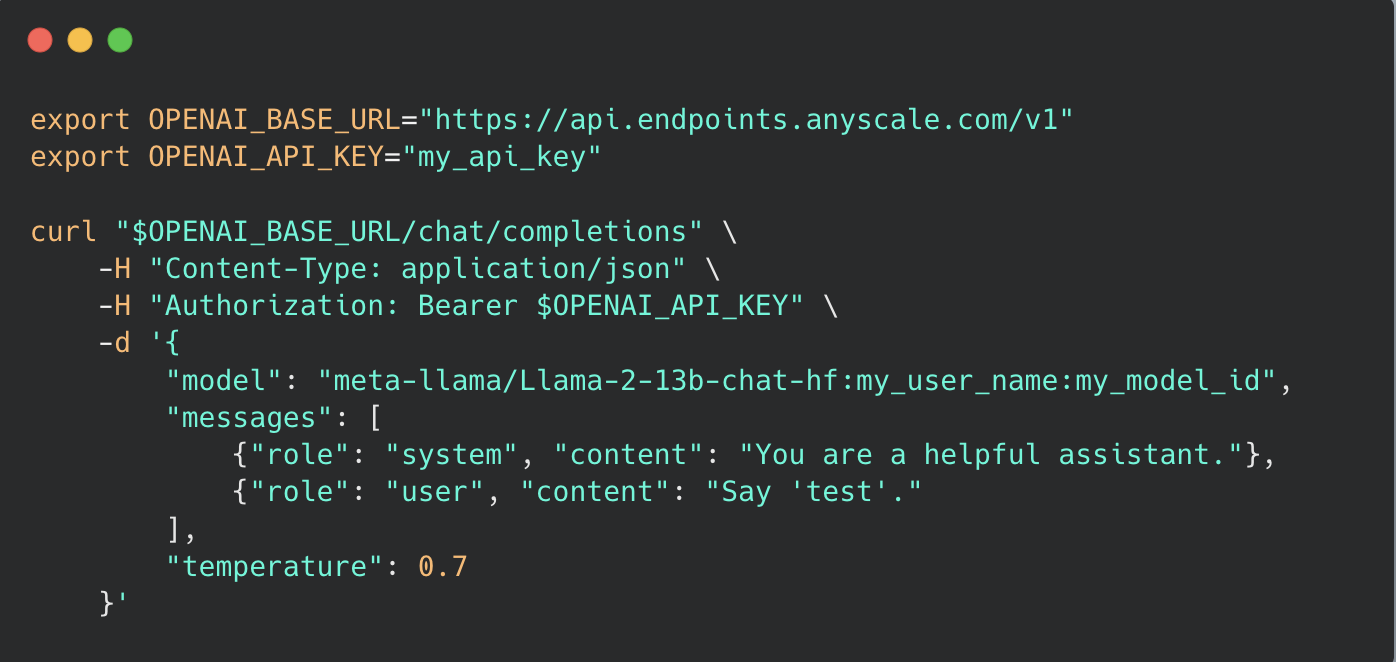

This removes the complexity of managing an LLM training stack and is cost-effective. Shortly after, we receive an email with instructions on how to query the fine-tuned model. There are no additional steps needed to serve the fine-tuned model and it will immediately be available through Anyscale Endpoints. We can then query it via OpenAI’s Python SDK.

LinkBenchmark Results

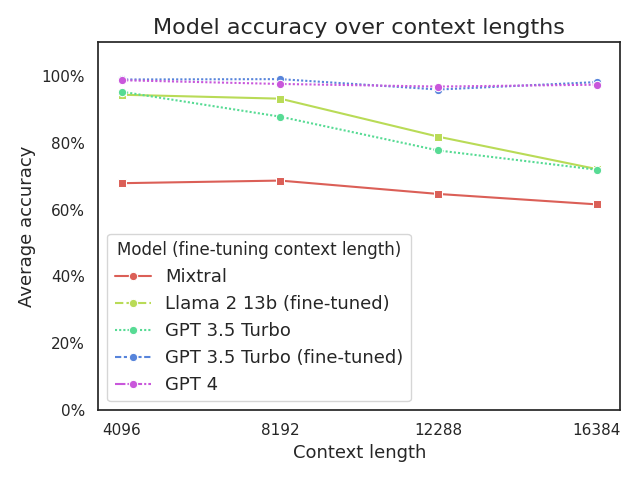

Before going into the results in detail, we present an overview of the accuracy of some off-the-shelf models and fine-tuned models on the data detailed above. In order to compare models across benchmarks, we focus on the average accuracy per context length. More specifically, the following graph quantifies the models’ ability to extract structured biographical information from a large corpus of concatenated biographies at different context lengths.

Our fine-tuned Llama 2 13B ends up more accurate than non-fine-tuned GPT 3.5. Fine-tuning GPT 3.5 gives us even better results, but at ~10x the cost during inference.

Some observations from this graph:

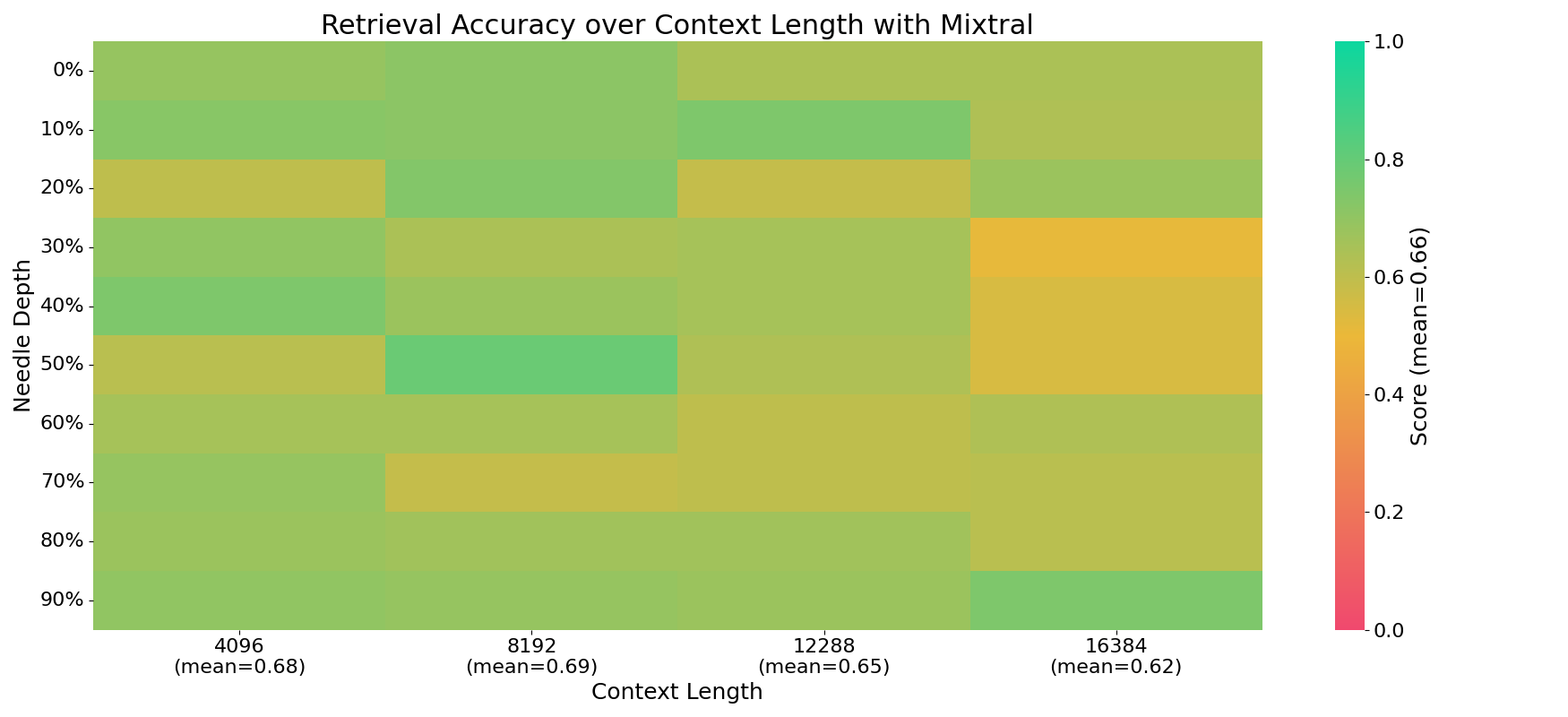

The Mixtral model, designed to handle a context length of 32k, maintains a consistent accuracy rate of approximately 67% across all tested context lengths.

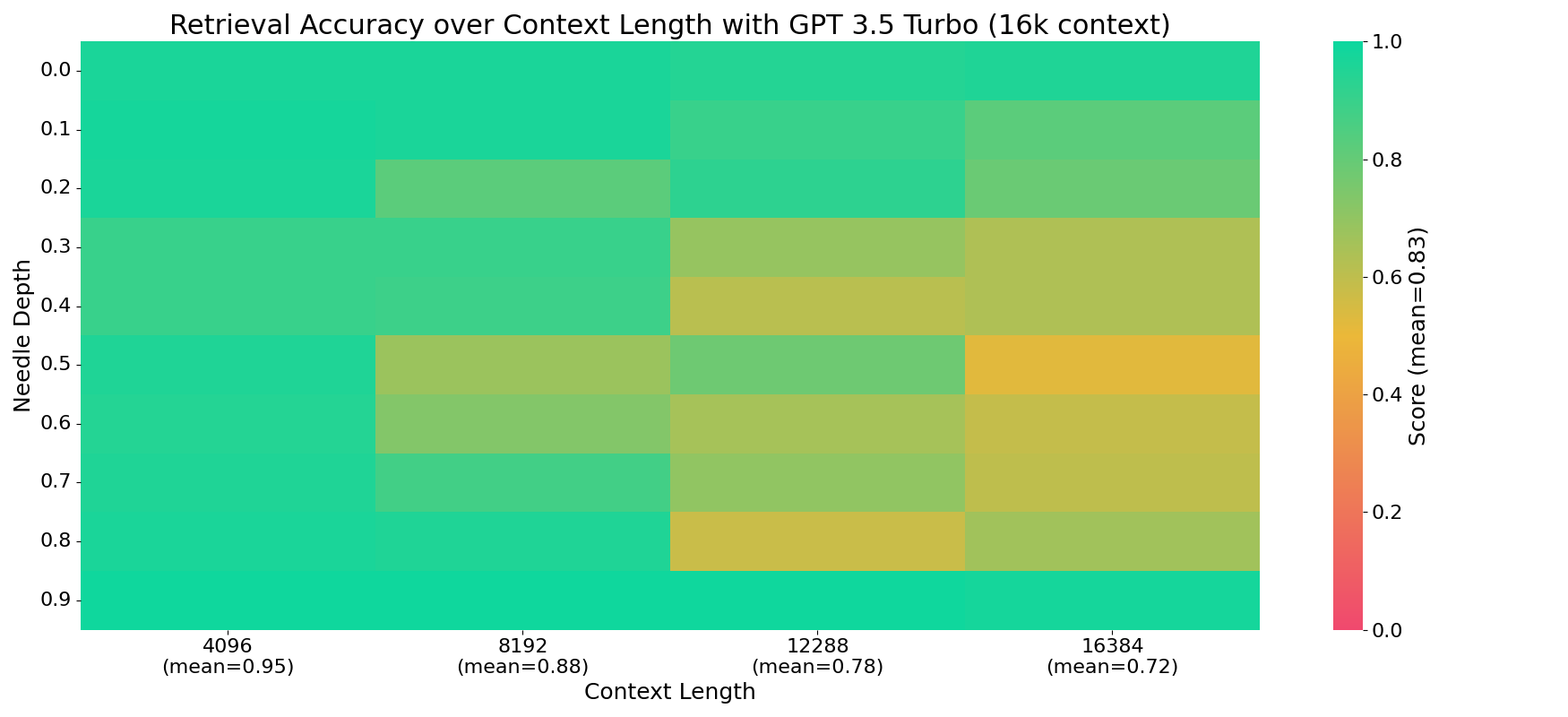

GPT-3.5, despite being trained for 16k context length, shows a decline in performance as the context length approaches 16k.

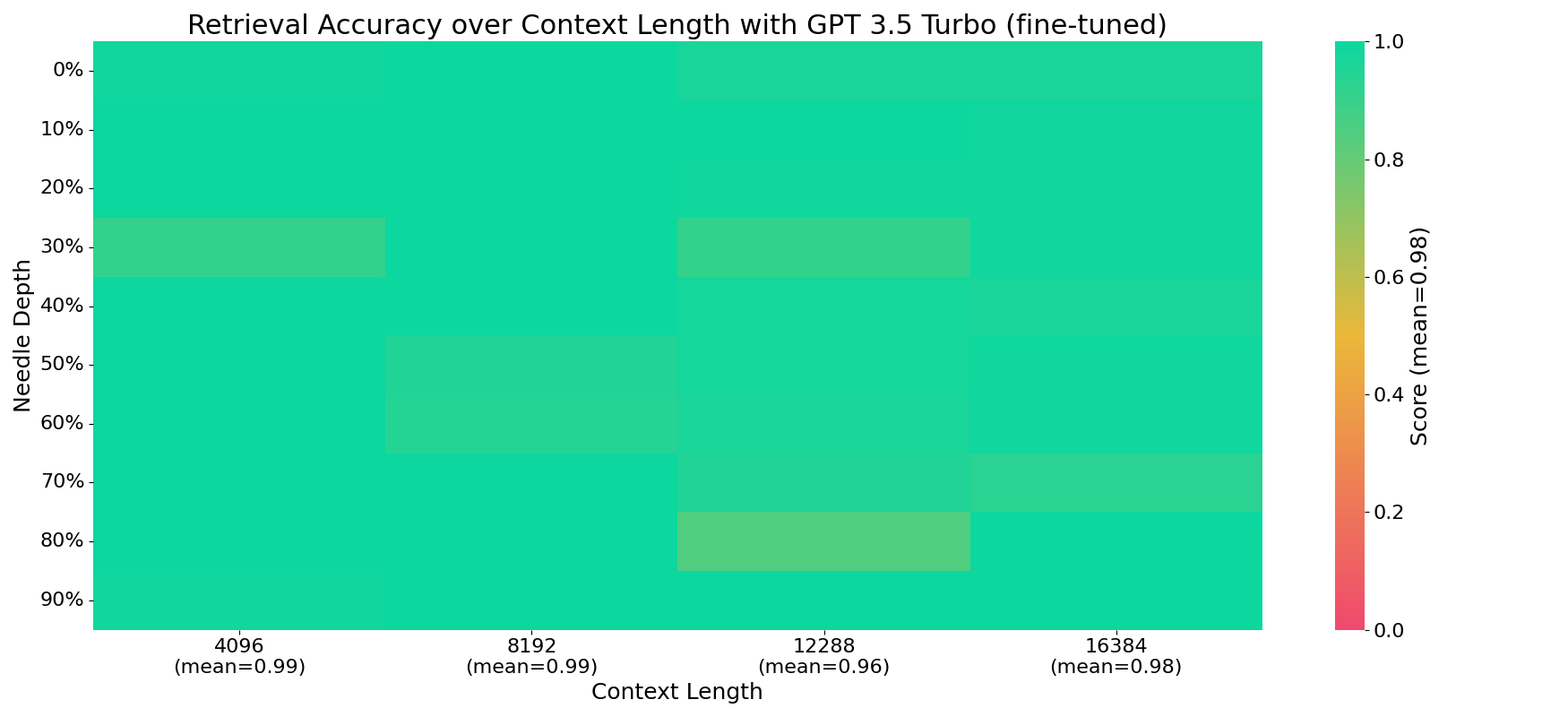

By fine-tuning GPT-3.5 for 16k context lengths, the performance discrepancy with GPT-4’s capabilities at larger context lengths is significantly reduced.

GPT-4, which can support context lengths up to 128k, achieves optimal performance across all context lengths tested up to 16k.

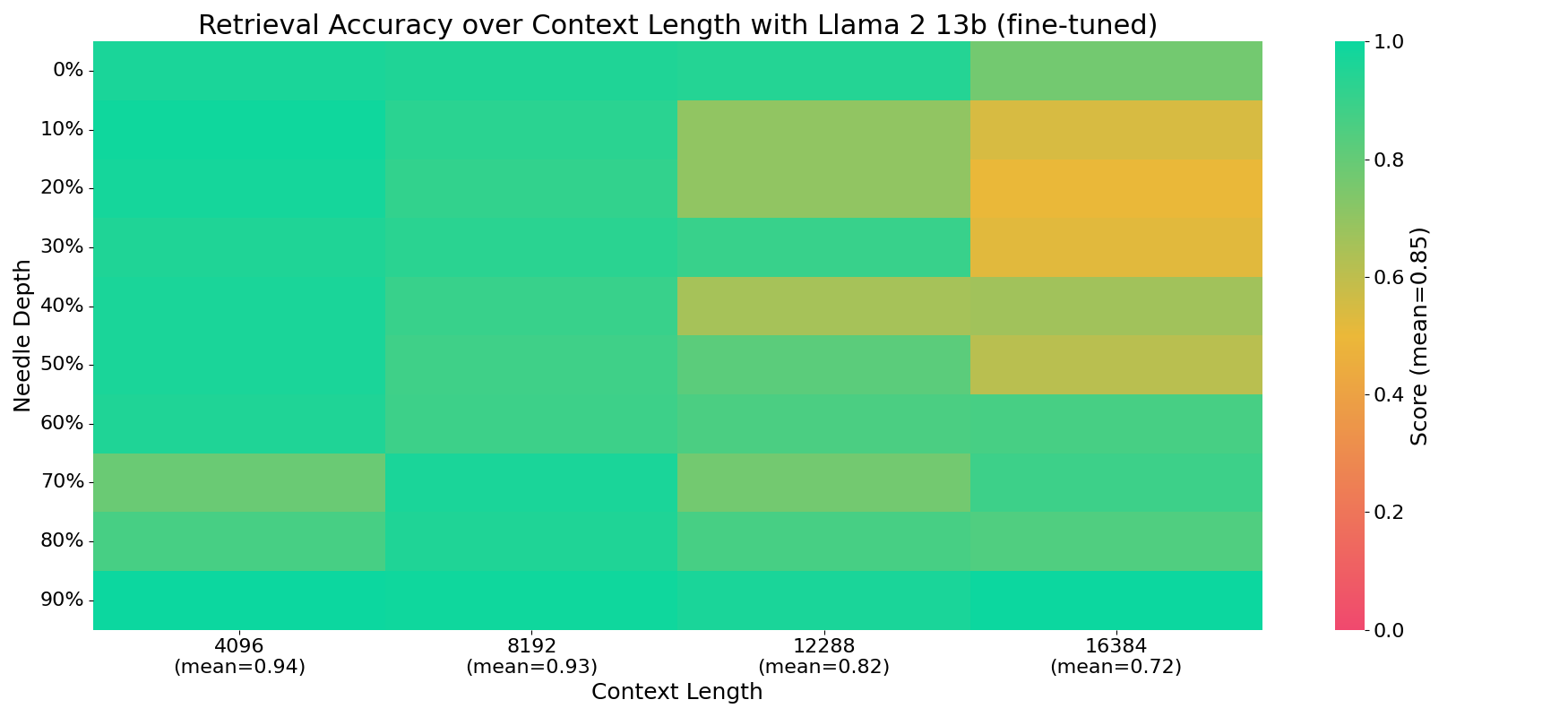

Enhancing the Llama 2 13B model, with an original context length of 4k tokens, through fine-tuning with data for up to 16k tokens context lengths, significantly enhances its quality, surpassing the performance of an unmodified GPT-3.5. This improvement is particularly notable as it also offers a cost-effective solution for inference.

In order to make an informed decision about how to trade off cost and accuracy, we can estimate the cost of a single query at ~16k Llama 2 tokens (which is roughly ~13.5k GPT tokens). In order to do so, we can tokenize the same prompt with the tokenizers of all respective models and use the pricing information that is available at OpenAI and Anyscale to estimate the cost of the prompt. Since we only predict json, answers are brief. So even though output tokens are usually more expensive, we don’t need to account for them here.

Models (hosted by Anyscale / OpenAI) | ~ cost / 1000 queries | ~ training cost / 5k examples |

Mixtral | $7.5 | N/A |

Llama 2 13B (fine-tuned) | $3.8 | $88 |

GPT 3.5 Turbo | $6.8 | N/A |

GPT 3.5 Turbo (fine-tuned) | $40.9 | $856 |

GPT 4 | $136.21 | N/A |

We can learn from this that, for the given problem, we can explore the problem cheaply during prototyping with Mixtral while getting reasonable results. If we want to get a sense of what the upper bound accuracy is or to test our evaluation procedure, we can query GPT 4.

In production, we likely want to fine-tune to maximize cost-efficiency. The choice of the fine-tuned model will then depend on our target accuracy.

LinkBaselines

We first set two baselines to get a feeling for how hard our refined benchmark is.

Firstly, we use Mixtral 7B in JSON mode without fine-tuning as an inexpensive and open source choice.

Mixtral has a native context length of 32k tokens and through JSON mode we can force the proper output structure on our results and get a quick feeling for how hard the task is.

This gives us a baseline of a mean accuracy of 66%.

OpenAI’s models are a popular choice for exploring problems. At the time of writing, we were unable to force the OpenAI API into a guarantee of specific output format. Nevertheless, GPT-3.5-turbo, with a little bit of prompting and JSON output format, gave us the following accuracies with minimal prompt engineering efforts:

GPT 3.5 Turbo clearly suffers from the “Lost in the Middle” problem but does considerably better than Mixtral 7B.

LinkFine-tuned Models

With the process of creating a synthetic fine-tuning dataset that we detailed above, we can programmatically create datasets of arbitrary size for any context length. This makes the process of optimizing for a given application straightforward. Although there are plenty of context-lengths to choose from, we choose 16k Llama 2 tokens to ensure comparability across different models and model sizes. We fine-tune all models on 5000 examples. Easier problems probably won’t require as many examples.

Fine-tuning GPT-3.5-Turbo with this many tokens cost us $856 for three epochs without any further adjustments and we were able to obtain the following results.

GPT-3.5-Turbo gives us almost perfect results. Note that this proves two things: First, our synthetic training dataset is of sufficient quality to solve this complex problem. Second, our evaluation procedure seems to work well without a judge-model.

Finally, we fine-tune Llama 2 13B with Anyscale Endpoints on the same dataset we used before to fine-tune GPT-3.5-Turbo. The cost of this was 88$ for one epoch.

Llama 2 13b ends up in the same ballpark as the fine-tuned GPT 3.5 turbo on lower context lengths. At higher context lengths, accuracy degrades but remains higher than both non-fine-tuned baselines. Such a tradeoff between cost and performance for production use-cases has to be analyzed on a per-case basis.

LinkConclusion

In this blogpost we have created a custom fine-tuning dataset for long context and benchmarked popular options.

More specifically, we have:

Analyzed the popular “Needle In A Hastack” benchmark and added some spice to it to make it more challenging and more relevant to RAG applications.

Shown a generalizable and scalable procedure of creating synthetic fine-tuning datasets for structured data with Anyscale Endpoints.

Benchmarked popular models and showed the effectiveness of the dataset and our fine-tuning procedure.

Compared cost and performance and learned when to fine-tune and how to choose the right analysis framework.

You can start fine-tuning your own long-context models with Anyscale Endpoints or read the documentation. This feature will be available soon. Join the waitlist now!

Next steps

Anyscale's Platform in your Cloud

Get started today with Anyscale's self-service AI/ML platform:

- Powerful, unified platform for all your AI jobs from training to inference and fine-tuning

- Powered by Ray. Built by the Ray creators. Ray is the high-performance technology behind many of the most sophisticated AI projects in the world (OpenAI, Uber, Netflix, Spotify)

- AI App building and experimentation without the Infra and Ops headaches

- Multi-cloud and on-prem hybrid support